- Create a training collage of positive and negative example images.

- Learn the epitome of the training collage.

- Calculate the conditional probability of each epitome patch being mapped into an image given that the image is a positive (or negative) example.

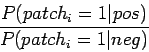

- Select the epitome patches that maximize the ratio

|

(1) |

- These patches, and their conditional probabilities of being mapped into positive and negative example images, can then be used to classify new images.

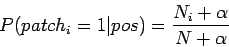

The conditional probabilities of a patch mapping into an image given that the image is a positive example is simply calculated

|

(2) |

where  means that

means that  does map into the example image,

does map into the example image,  is the number of positive example images that patch

is the number of positive example images that patch  maps into, and

maps into, and  is the total number of positive example images.

is the total number of positive example images.  is a psuedocount to avoid getting zero probabilities. We say that

is a psuedocount to avoid getting zero probabilities. We say that  from the epitome maps into an image if

from the epitome maps into an image if  has the highest posterior probability among all epitome patches of mapping into an image patch for

has the highest posterior probability among all epitome patches of mapping into an image patch for  image patch. The patch mapping probability for negative example images is calculated in a similar fashion.

image patch. The patch mapping probability for negative example images is calculated in a similar fashion.

David Andrzejewski

2005-12-19