|

My UW

|

UW Search

Computer Science Home Page

> CS 534

> HW #5 Projects

General Info

Final Grades

Syllabus

Getting Started

Readings

Homework

Midterm Exam

Lecture Slides

Apps, Code, Data

Comp Photog in the News

Other Reading

Other Courses

Links

|

|

|

|

CS 534: Computational Photography

Fall 2016

|

|

HW #5 Projects HW #5 Projects

- James Babb

Reconstructing Shredded Documents through Computational Photography Techniques

This project is based off of the DARPA Shredder Challenge where a shredded

document is given to a user and it must be reconstructed back into the

original form. My project will be looking at various techniques we can use to

reconstruct a shredded document ranging from edge matching, to edge detection,

and to feature point matching. I will be looking at the pros and cons of

various techniques with experiments to demonstrate their effectiveness (or

lack of) on reconstructing both real shredded documents and a "sample"

shredded document created virtually. Issues that will need to be addressed in

my experiments include, but are not limited to rotation of the "shredded rectangle"

for purposes of lining up with other "shredded rectangles", the rectangle is

flipped the wrong direction, changes in intensity, and of course missing pieces.

Pending time, I may also look into using SIFT to create a panorama of multiple

copies of a shredded document where pieces are missing from each chunk,

but can be combined together to create a full document. Finally, I will touch

briefly on how information theory and how much data we really need to

recover from the shredded document in order to understand its content.

- Sean Bauer and Abishek Muralimohan

Capturing Depth in Photos: Applications of the Z-Channel

In this project, we present a system for obtaining depth data (a Z-channel) along with conventional RGB channels while capturing a photo or video. The system consists of the Microsoft Kinect which calculates a coarse depth map of a scene using an infrared laser grid projected onto the scene. Realtime depth information, along with an RGB video stream (both at 640 x 480px) is made available to the user through the Kinect SDK (beta). Apart from this, we will also capture high-resolution still images using a conventional camera placed in close proximity to the Kinect.

In addition to matching a coarse depth map to a high resolution image, we seek to augment existing image processing algorithms with depth information. Our first examples will be seam carving and defocusing. This will allow the dynamic resizing of images without losing any important (based on depth) objects. Similarly, we can automatically defocus or create lens blur for background, foreground, or specific objects based on depth.

- Jacob Berger

Background Collage

Most previous methods for completing or replacing aspects of images use large databases of like-images. This project, instead, aims to replace parts of the background of an input image with portions of a small group of images, while maintaining a semantically valid image. In order to achieve this goal, my algorithm will focus on combing a segmentation method with a modified seam carving algorithm in order to remove whole low energy, background, sections. The algorithm will then combine SIFT feature point detection with blending algorithms to select like-areas from other pictures, replace the removed areas, and blend the output to create a semantically valid image. The entire algorithm will require minimal user input and should require only a small selection of like-images.

- Jacob Dejno and Allen Sogis-Hernandez

Video/Image Colorization and Recoloring Using User Input Optimization

Our project will follow along with a paper called "Colorization using Optimization" by Levin, Lischinski, and Weiss. This paper presents a faster, more accurate algorithm to recoloring photos and videos using a very small amount of user input. The user marks very few color areas and the picture or video based on the YUV colorspace. What we intend to do is further explore this algorithm, and add some functionality along the way. We want to make it easier than even the small input from the user, and will be looking to ways of automating this coloring/recoloring process.

- Nick Dimick and Lorenzo Zemella

Multi-Focus Image Fusion

Many types of cameras can have a very limited depth of focus and images taken with these cameras are able to only focus on objects only within the same plane. This leads to difficulty when trying to have several objects on different planes in focus. Specific cameras with confocal lenses may be used, but the performance is usually slow or unsuitable. This project looks at an alternative to using special equipment. Using a single camera to collect a group of images with different planes of focus, it is possible to develop an algorithm that will combine all of the images to form a single image with an extended depth of focus. The result is an image containing all objects of interest in focus as if it had been taken using a very large depth of focus. Advantages of this project include requiring no special equipment and the full capabilities of the "standard" camera can be used. This means the full camera resolution can be used as well as faster shutter speeds in dark areas that would otherwise not be possible. Computational power is cheap, so this is a very viable and efficient alternative. The idea of this project was inspired by the LYTRO camera and will be exploring the work completed by the Center of Bio-Image Informatics, University of California and possibly others.

- Tanner Engbretson, Zhiwei Ye and Muhammad Yusoff

Where Am I?: Estimating Geographic Information from a Single Image based on its Optimal Image Features Descriptor

This project is based on James Hays and Alexei A. Efros slides on estimating geographic information from a single image. The main objective of the project is to find the optimal image features descriptor for any particular image and detect its location based on geo-tagged photos. For our projects, we choose three different image features descriptors to compare their performance in searching or guessing the location of the input image. The three image features descriptors are Modeling The Shape of the Scene: A Holistic Representation Of The Spatial Envelope by Olivia and Torralba 2001, Image Retrieval Based On Multi-Texton Histogram by Guang-Hai Liu, LeiZhang , Ying-KunHou, Zuo-YongLi and Jing-YuYang 2010, and Geometric Context from a Single Image by Derek Hoiem, Alexei A. Efros and Martial Hebert 2005. We will implement these three descriptors on a single input image to find its location and compare their performance in searching for the input image nearest-neighbors. Based on the result of the comparison, we will group the input image accordingly so that for the future reference, we will know which image feature descriptor to use for any particular input image in searching for its geo-location.

- Nathan Figueroa

Reconstructing Shredded Documents

The efficient reassembly of shredded paper documents is an important task in forensic photography and counter intelligence. Modern computer algorithms have been used to reconstruct evidence from the Enron corporate scandal and to recover archives of the Stasi, the East German secret police. Nonetheless, "unshredding" remains a difficult and time-consuming process. This project implements a simple algorithm to extract shredded strips from a digital image and match them based on content.

- Matt Flesch and Erin Rasmussen

UW-Madison Hall View: An Extension and Integration with Google Maps Street View

Google Maps Street View currently exists and provides users with the ability to view 360 degree panoramas of many streets. Users can navigate down the street and view a panorama as if they were standing in the middle of the road. We will be using the Google Maps Javascript API to create integrate panoramas we take into the existing Google panoramas. We will take sets of images from multiple locations inside the Computer Science building and build a number of panorama tiles using AutoStitch. We may add some image manipulation at this point depending on the difficulty of the project. Then we will link these custom tiles together to allow users to virtually navigate the halls of the Computer Science building. We may also add the option to have users ask for directions to a specific room and give back the optimal halls to take.

- Aaron Fruth

iSpy Touchscreen Key-Logger

The iSpy program is a key-logging application that takes in a video feed, detects what buttons on the keyboard are being pressed, and outputs the typed letters into a text file.

The normal process of reading the input on a touchscreen involves several steps. First, the phone is detected in the source video and the phone is stabilized to make the phone screen easier to compute. Next, the phone image is compared to a reference image. In case part of the screen is blocked by the user's hand, the reference image will fill in the blocked area for the algorithm to work. After alignment, the algorithm separates the magnified letter from the rest of the keyboard on each frame of the video feed, and places the letter into a text buffer. While the video plays, the complete text entered into the touch device is tracked and placed into a text file.

There are several positives to this method of key-logging. First, no direct access to a target's phone or phone service is necessary to read information typed in. Second, a low-quality video feed is enough to get results from a phone. This is because the algorithm focuses on what key is magnified verses what is displayed on the screen, so a less clear video is required. Third, the algorithm can extract data from a phone, no matter the distortion or reflection. However, there are drawbacks of using this system, mostly due to the behavior of the phone's keyboard. Magnification is not on the space key or delete key on most keyboards, so the text extracted is often words ran into another. This is a minor flaw, however, because the text is still easily readable by human beings, even without spaces and with spelling errors. Also, phones with magnification turned off or phones with a privacy screen cannot be read. These are two ways to prevent being targeted using this method of key-logging.

Because this program is designed to implement many functions to receive input, I will focus on only one part of the program, the function that receives the referenced image and outputs the character pressed. The input image will be provided by a screenshot taken from an iPhone 4 with one character pressed, and the function will compare this to reference letters from an iPhone GUI reference kit. This will allow the program to work on a stable input and test the theory behind the iSpy program.

- Chris Hall and Lee Yerkes

Panoramas from Video with Occlusion Removal

Videos have been shown to be a good source of images for producing panoramas. Videos are especially good for cases where many images need to be captured quickly due to dynamics of either the target being imaged or the platform capturing the images. Such cases can be used for creation of activity synopsis synthesis, such as those shown by Liu, Hu, and Gleicher in "Discovering Panoramas in Web Videos," it can also be used for quick and simple occlusion removal from planar scenes. The intent of this project is to create panoramas from video then apply to these special cases.

- Caitlin Kirihara and Tessa Verbruggen

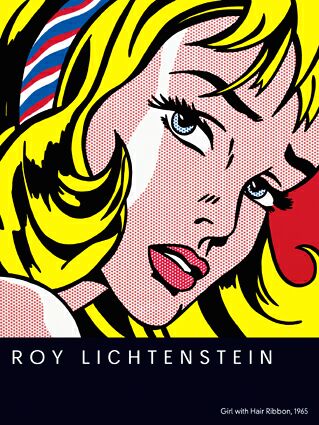

Constructing Lichtensteinesque Images

Roy Lichtenstein is a pop artist who was mainly active in the 1960s. His images are very much like in a comic strip. They have large smooth areas, of which some have a dot-matrix effect, and black edges. For example:

For this assignment, we will try to reproduce this effect. We will take an input image and produce something in the style of Roy Lichtenstein. To achieve this, we will apply three different effects. First, we will use a bilateral filter to smooth regions of a similar color to one color. Next, we will 'remove' pixels (change them to white) to achieve a dot-matrix effect. Lastly, we will use an edge-detection algorithm to find the edges, and add these as black lines on top of the image we gained before.

The overall idea is not based on a specific paper. However, for the separate parts, we will probably be using previously written papers to implement the algorithms, such as "Bilateral Filtering for Gray and Color Images" by C. Tomasi and R. Manduchi (1998) and some information on edge detection.

- Cara Lauritzen and Rose O'Donnell

Which is Better?: An Analysis of Photo Quality Assessment

This project is based on Luo, Wang, and Tang's "Content-Based Photo

Quality Assessment." The main objective of the project is the

implementation of the techniques as described in the paper. Regional

and global features are extracted in order to assess photo quality.

The paper describes multiple methods for extracting subject areas, and

also various types of regional and global features. Our program

determines the highest quality photo from a set of photographs. We

compare the results when different combinations of methods for

extraction and feature detection are used. We also collect human-

based quality assessment to gage the effectiveness of the different

combinations. The paper divides photos into seven categories based on

content. We use three of these categories: landscape, plant, and

architecture. For these reasons we do not implement human based

subject extraction or human based feature detection.

- Jared Lutteke and Sam Wirch

Fine Filtering Geotagged Images Using Image Content

With the millions of publicly available images available today, it is easy to go to a photo sharing website, such as Flickr, and search for photos with a given tag. It is also possible to search geotagged photos based on a given image location. However, little is available in finding photos with certain content at an available location. An example of this would be finding photos near the capitol which are of the statue in front of the capitol building.

Our project will be based on the websites such as Panoramio, but providing additional clustering of photos around a location and doing image content analysis to provide further filtering capabilities. The key realization being that while photos are often around similar locations, geotag information does include what the photo is of or the orientation of the camera. We hope to provide an interface where a user could get specific images with content they desire at a certain geotagged location.

Some methods we intent to utilize are content recognition would be tag filtering, feature descriptors, and color analysis. These are of course subject to change depending on time constraints and implementation. We wish to offer as many filtering options as possible. For image acquisition, we intend to use publicly available photos, most likely from Flickr. Flickr currently has millions of geotagged photos for use, giving us an easy source of images. In addition to the filtering, we wish to cluster related photos together within a geotagged location. The goal of our project is to extend photos related by location and provide additional clustering so a user traveling to a specific place could find photos with certain color, or with certain content.

- Phil Morley

Haze Removal and Depth Modeling

This project will investigate the use of haze removal as shown in the paper "Haze Removal Using Dark Channel Prior" by K. He, J. Sun, and X. Tang. I will implement their code from scratch and experiment with parameters to maximize haze removal. The method also creates a depth map. I hope to use this depth map to create a unique form of modeling the new scene such as in a 3D model.

- Ryan Penning

Image Improvement Utilizing Methods from Compressed Sensing

The popularity of smart phones has allowed people to carry a camera almost anywhere they go. Unfortunately, the images produced using these mobile devices often leaves much to be desired. In particular, the images frequently exhibit a large amount of noise, and are of a relatively low resolution. One technique that my provide a path to improving image quality without expensive hardware changes is compressed sensing. The heart of this technique relies on the assumption that when completing a data set, the simplest result is most likely to be the correct one (under a given set of prerequisite conditions). Massimo Fornasier and Holger Rauhu outline this algorithm as it applies to image processing in their work. I plan to attempt to apply this algorithm to images obtained from mobile devices. By first obtaining a low resolution, noisy set of images, and then utilizing SIFT feature matching to align these image and map them to a higher resolution image matrix. This results in a relatively sparse image matrix, which can then be completed using L1-minimization based techniques, as outlined in the paper mentioned above.

- Leigh-Ann Seifert and Brian Ziebart

Storyboard-It

Inspired by previous students' work "Sketchify," we'd like to not only turn video's into sketches but turn them into storyboards or comics. Our goal is to take "Sketchify" one step further by improving upon the results and orienting the program at only video instead of pictures. By applying other filters we can achieve the look of a colored sketch, similar to storyboards. Then, using an algorithm to find key frames in the video, the user will be able to select how many frames in the storyboard they want, and the program will find that many key frames. The end result will look like a storyboard or comic strip which can give a single picture representation of a story or video.

- Kong Yang

Defocus Magnification

The majority all new phones now come standard with a built-in camera. These cameras are usually limited in range of depth of field supported by the camera lens. Defocus magnification is a technique that estimate the amount of blur in different regions of an image and magnifies this blurriness to generate a shallower depth of field.

|

|

|