|  |

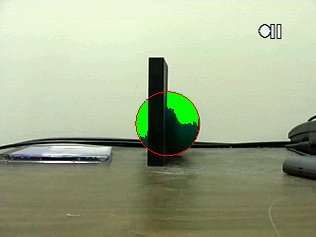

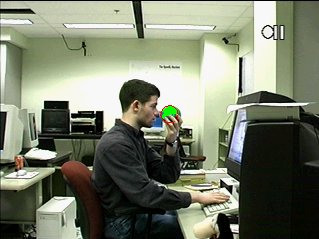

| Sample images containing a green ball. Pixels identified as belonging to the ball are in light green. |

The goal of this project was to track the 3D position of a ball in real time using a single camera. We made the following assumptions:

In light of the third assumption we do no frame-to-frame tracking. This separates our implementation from other efforts, which typically focus attention on a small region of the image based on the previous location of the ball and some sort of motion model. We search through the entire image for the ball. While this requires extra computation, hardware has advanced to the point where it is feasible to do a small amount of computation on every pixel in real time. The advantage of this is we can smoothly handle the case where the ball is temporarily lost due to occlusion or passing outside the frame.

Calculation of 3D coordinates is trivial given accurate measurements of the center and radius of the projected circle. The problem is hence reduced to locating this circle. This involves two subproblems: identifying points that possibly belong to the circle and fitting a circle to these points.

Candidate circle points are done using a user-defined color ("the" ball color) and two user-defined thresholds, one low and one high. The image is first scanned for points that are within the lower threshold of the ball color. Then for each such point a seedfill algorithm is used to recursively identify all points within the higher threshold of that point's color. We measure distance between colors by converting RGB values to HSV coordinates and calculating the absolute value of the difference in hue. Colors with saturation below a threshold are automatically discarded (which means that we can't track grey objects).

|  |

| Sample images containing a green ball. Pixels identified as belonging to the ball are in light green. |

Once circle pixels have been identified, we use a simple scanline algorithm to identify edge pixels: for each row and column, scan from one end of the image to the other. The first and last circle pixels are marked as edges. We experimented with more sophisticated edge detection methods, such as Canny detection, but the final results were of similar quality. A multiresolutional Hough transform was then used to fit a circle to these points.

|  |

| Sample images showing the circle calculated by the Hough transform. The left image demonstrates our robustness with respect to occlusions; the right shows results for a more realistic scene. |