Grid Search

Background

SVM’s have parameters which cannot be learned using an optimization method like SGD. Instead, they must be iteratively computed if we wish to solve for the optimal parameters.

A common method for optimizing results for the hyperparameters is a grid search, also known as a parameter sweep. The algorithm uses a performance metric to guide the optimization. The parameter space is unbounded and continuous, thus the bounds and the intervals must be set manually. Setting the parameters correctly can make the difference between a

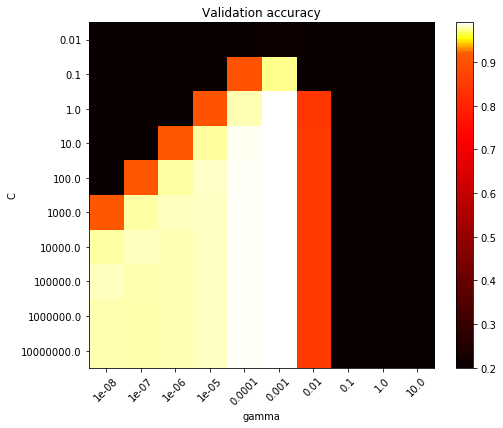

The parameter space for the SVM grid search we tested included the kernel parameters and slack variables. Thus, in our experiments, we varied the variables C and the variable gamma for the RBF kernel. The grid search algorithm then trains an SVM classifier for every combination of the parameters to produce a surface of varying performance. We expect this surface to be roughly smooth, and thus we can visualize it using an interpolation method, or a heatmap as in our case.

Our Simulation

The data set we used was provided by sklearn.datasets.

Description below, note that each datapoint is a 8x8 image of a digit.

| Classes | 10 |

| Samples per class | ~180 |

| Samples total | 1797 |

| Dimensionality | 64 |

| Features | integers 0-16 |

For this example data set we used a 5-Fold Cross Validation to guide which parameters optimize results. The bounds for our search were set based in exponential values about 0 with a base of 10 where 10 values of each were chosen.

We found that gamma performs greatest with smaller gamma and C performs greatest with larger gamma for our specific sample data so we moved the parameters to the range defined by the heat map displayed below. The algorithm decided that the best parameters are {C: 1.0, gamma: 0.001} which scored an accuracy of 99%.