Connecting What to Say With Where to Look by Modeling Human Attention Traces

Showcase.

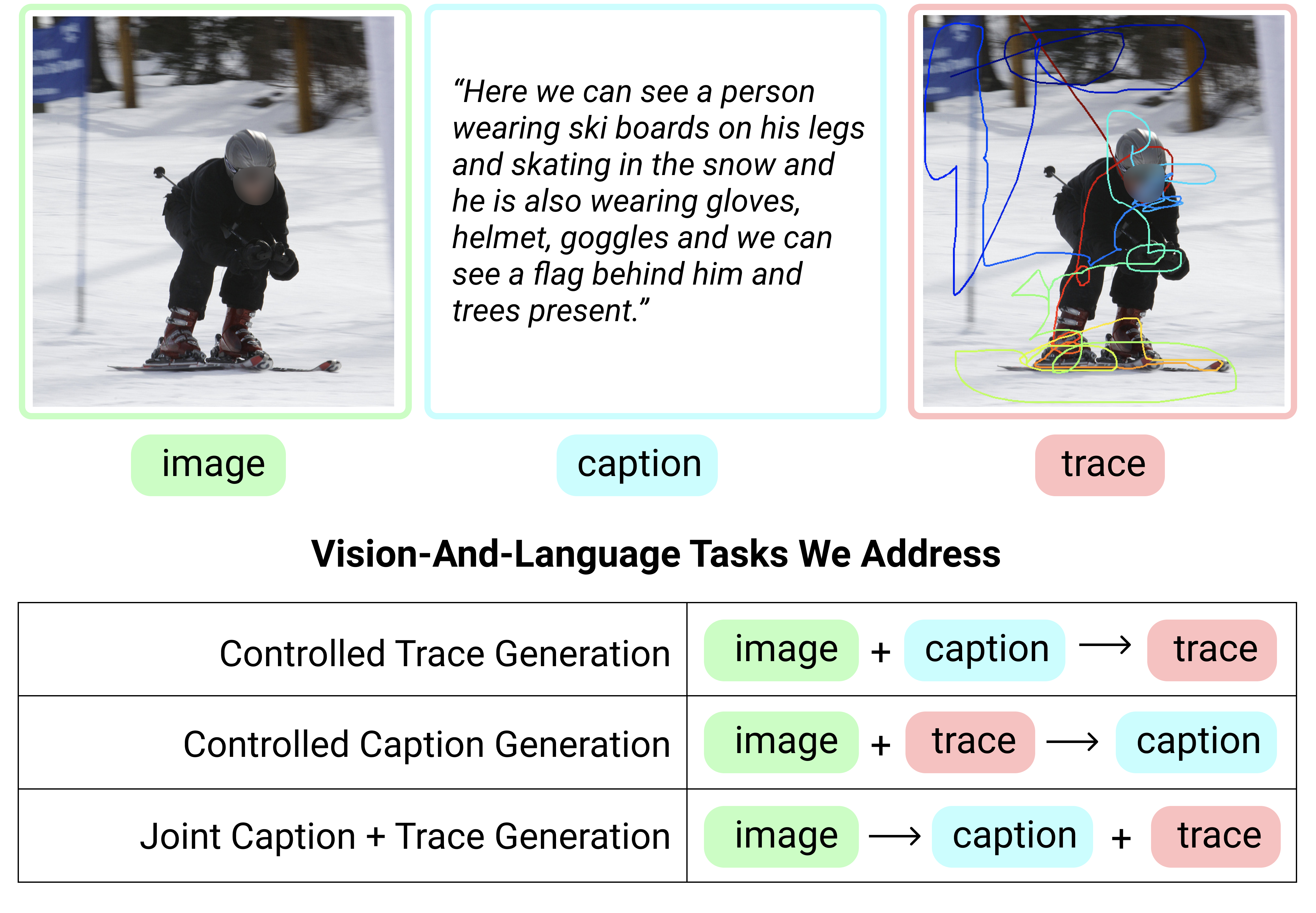

The three vision-and-language tasks, as illustratedon a single example from the Localized Narratives dataset. The first and third depicted tasks are proposed by us.

Abstract.

We introduce a unified framework to jointly model im-ages, text, and human attention traces. Our work is builton top of the recent Localized Narratives annotation frame-work, where each word of a given caption is pairedwith a mouse trace segment. We propose two novel tasks:(1) predict a trace given an image and caption (i.e., visualgrounding), and (2) predict a caption and a trace givenonly an image. Learning the grounding of each word ischallenging, due to noise in the human-provided traces andthe presence of words that cannot be meaningfully visuallygrounded. We present a novel model architecture that isjointly trained on dual tasks (controlled trace generationand controlled caption generation). To evaluate the qualityof the generated traces, we propose a local bipartite match-ing (LBM) distance metric which allows the comparisonof two traces of different lengths. Extensive experimentsshow our model is robust to the imperfect training dataand outperforms the baselines by a clear margin. More-over, we demonstrate that our model pre-trained on the pro-posed tasks can be also beneficial to the downstream task ofCOCO’s guided image captioning.

Qualitative results.

Visualizations on COCO:

Visualizations on Open image:

Visualizations on Flickr30k:

Visualizations on ADE20k: