HAMLET

Human, Animal, and Machine Learning: Experiment and Theory

Meetings:

Fridays 3:45 p.m. - 5 p.m., the Berkowitz room (338 psychology)

Schedule:

Fall 2010

- Sept. 17. Humans

learn using manifolds, reluctantly.

- Jerry Zhu

- Department of Computer Science.

This talk is on human's ability to perform manifold (a.k.a.

graph-based) semi-supervised learning. When unlabeled data lies

on a manifold in the feature space, the manifold information as

revealed by the unlabeled data may help a learner to perform

classification in a semi-supervised setting. Although well-known

in machine learning, the use of manifolds in human learning is largely

unexplored. We show that humans may be encouraged into using the

manifold, overcoming the strong preference for a simple, axis-parallel

linear boundary. Furthermore, this can be explained as Bayesian

model selection with Gaussian Processes.

Joint work with Bryan Gibson, Tim Rogers, Chuck Kalish, and Joe

Harrison.

- Sept. 24. Supervised

learning of conceptual structure from linguistic information

- Jon Willits

- Department of Psychology.

- Oct. 1. The Evocative Power of Words: Language modulates visual processing.

- Gary Lupyan

- Department of Psychology.

Humans are not the only animals to form categories, but are the only ones to have names for their categories. Beyond making linguistic communication possible, do words (verbal labels) enable or facilitate certain cognitive and perceptual processes? I will present findings from a variety of paradigms showing that verbal labels modulate ongoing perceptual processing in surprisingly deep ways. This linguistic modulation of perception may have important consequences for higher-level cognition such as the learning of new categories (Lupyan et al., 2007), memory (Lupyan, 2008), and conceptually grouping items along a particular dimension (e.g., color) (Lupyan,

2009) as well as inference in reasoning.

These findings suggest that language reorganizes conceptual representations and dynamically modulates representations that have been presumed to be immune from linguistic influence. The theoretical view emerging from these findings speaks to the inherent difficulty in distinguishing verbal and nonverbal representations and compels a re-examination of the Whorfian hypothesis.

- Oct. 8. Classification

of Neural Processes: Spike Trains to EEG

- David Devilbiss

- Department of Psychology

This talk will focus on two topics our laboratory is

currently working on: 1) Classification of single channel EEG into

sleep wake

states and 2) modeling the relationship between neuronal activity from

cells in

the prefrontal cortex and cognitive/behavioral processes involved in

goal-directed

behaviors. Although classification of EEG is important for a number of

reasons,

in the context of our research it is critical to know what behavioral

state the

animal is in when analyzing the effects of drugs on neuronal activity

since arousal

levels alone modulate neural firing rates. To identify these arousal

states by

single channel EEG in an unsupervised manner, we currently use a number

of

approaches including principal component analysis, independent

component

analysis, and Gaussian mixture models. But the question remains,

whether there

are better/more efficient methods available. Psychoactive

compounds also effect multiple

cognitive/behavioral processes, however the means to express the

relationship

of neuronal discharge to cognitive functions is poorly understood. To initially determine the degree to which

neurons

of the prefrontal cortex (PFC) participate in a number of

cognitive/behavioral

processes, recent work with Rick Jenison has led to constructing

conditional

intensity functions to explicitly relate the simultaneous contributions

of numerous

behavioral events to the discharge properties of PFC neurons.

- Oct. 15. What is it like

to be a Bayesian (psychologist)? %<< please note the temporary

location of Rm 228 Psychology Building

- Chuck Kalish

- Department of Educational Science.

There has been a lot of interest in recent Bayesian models of learning and inference within Psychology.

There are strong supporters and strong detractors. Its not always clear, though, just what supporters are supporting or

what detractors are detracting. In this talk I will lay out one story. To be a Bayesian means to approach inference as an

inductive problem rather than as a transductive problem. I will talk about the difference between induction and transduction,

and present some studies of young children's learning from examples to illustrate the value of inductive approaches.

- Oct. 22. *No meeting,

Psych Department Colloquium all day.*

- Oct. 29. Goal-directed decision making as probabilistic inference

- Matt Botvinick

- Department of Psychology, Princeton University

Research in animal learning and behavioral neuroscience has distinguished between two forms of action control: a habit-based form, which relies on stored action values, and a goal-directed form, which forecasts and compares action outcomes based on a model of the environment. While habit-based control has been the subject of extensive computational research, the computational principles underlying goal-directed control in animals have so far received less attention. While there are a number of algorithmic possibilities, one particularly interesting approach frames goal-directed decision making as probabilistic inference over a generative model of action. I will introduce this computational perspective and explore some ways in which it may shed light on the neural mechanisms underlying goal-directed behavior.

- Nov. 5.

Spatial Computing

- Michael Coen

- Departments of Computer Science and Biostatistics.

I'll introduce a model of computing that is tied heavily to the the shapes and densities of our datasets. This approach is visual and intuitive, formal, non-parametric and distribution free, and draws heavily from optimization theory. Applications are broad and include clustering, protein homology, text-classification, spatial-based kernels, and distribution identification.

- Nov. 12. An integrated information theory of consciousness

- Giulio Tononi

- Department of Psychiatry

- Nov. 19. Adaptive

Human-Computer Interfaces

- Bilge Mutlu

- Department of Computer Science

- Nov. 26. *Thanksgiving, no meeting.*

- Dec. 3 .The role of sleep in the acquisition of new conceptual knowledge

- Tim Rodgers

- Department of Psychology

Human memory appears to be supported by two distinct functional systems. The "fast-learning" medial temporal-lobe system can rapidly acquire memories of new experiences, and can maintain separate traces for even very similar events, but does not generalize well; whereas the "slow-learning" cortical system supports good generalization but must learn in a slow and interleaved fashion. It is clear that the two systems must work together in the acquisition of new concepts: the information learned quickly in the rapid system must eventually be consolidated in the system of knowledge acquired by the slow-learning system. Sleep has long been proposed as a mechanism for achieving this consolidation. During sleep, the medial temporal lobe is thought to "replay" recent learning events to the cortex, providing it with additional pseudo-experience that allows the new information to be integrated without disrupting previously-stored knowledge. Though there is considerable evidence suggesting that sleep plays an important role in consolidating implicit forms of memory, there has been little work investigating the role of sleep in consolidating concept learning (ie learning of new categories). I will describe some new experiments providing the first evidence that sleep influences new concept learning. Specifically, relative to comparable waking periods, sleep appears to benefit memory for atypical or unusual aspects of the items learned, but not the more prototypical aspects. These data are consistent with a view of memory in which fast- and slow-learning systems both contribute to the acquisition of new information, with the fast-learning system taking disproportionate responsibility for atypical/unusual properties of objects, and the cortical system mainly acquiring knowledge of the category central tendencies. When memories are replayed during sleep, the unusual information is disproportionately weighted, leading to larger gains.

- Dec. 10. Speech perception as efficient coding

- Keith Klunder

- Department of Psychology

Fundamental principles that govern all perception, from transduction to cortex, are shaping our understanding of perception of speech and other familiar sounds. Here, ecological and sensorineural considerations are proposed in support of an information-theoretic approach to speech perception. Optimization of information transmission and efficient coding are emphasized in explanations of classic characteristics of speech perception, including: perceptual resilience to signal degradation; variability across changes in listening environment, rate, and talker; and, categorical perception. Experimental findings will be used to illustrate how a series of like processes operate upon the acoustic signal with increasing levels of sophistication on the way from waveforms to words. Common to these processes are ways that perceptual systems absorb predictable characteristics of the soundscape, from temporally local (adaptation) to extended periods (learning), and sensitivity to new information is enhanced.

HAMLET mailing list

The MALBEC lectures ("Mathematics, Algorithms, Learning, Brains, Engineering, Computers")

Fall 2009 archive

Spring 2009 archive

Fall 2008 archive

Contact: David Devilbiss (ddevilbiss@wisc.ed), Chuck Kalish (cwkalish@wisc.ed), and Jerry Zhu (jerryzhu@cs.wisc.ed) (Add 'u' to the addresses)

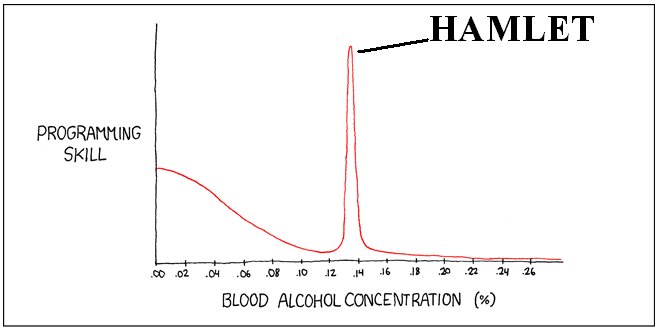

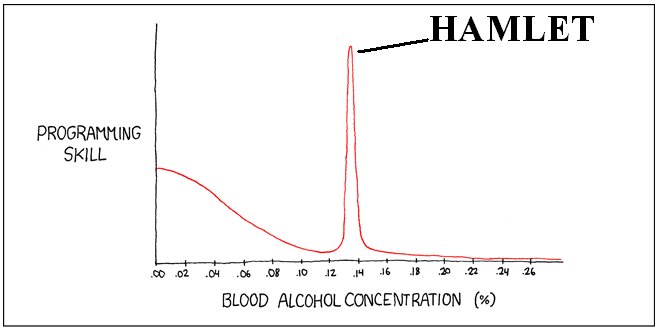

(Adapted from xkcd.com)

(Adapted from xkcd.com)

(Adapted from xkcd.com)

(Adapted from xkcd.com)