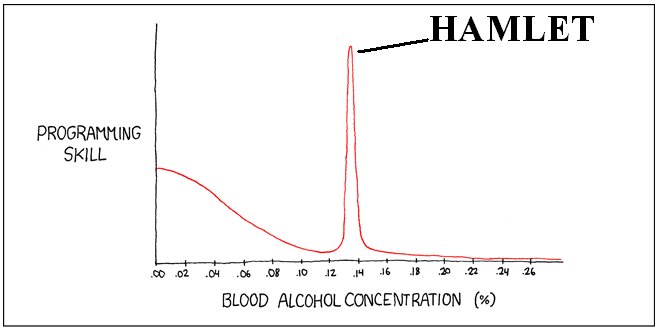

HAMLET

Human, Animal, and Machine Learning: Experiment and Theory

Abstract: Traditionally, semantic or conceptual processing has been viewed as manipulation of abstract symbols, with perceptual and motor systems serving only as the input and output respectively. Embodiment and situated cognition theories that posit a close relationship between perception/action and semantic systems have gained currency in recent years. Neuroimaging and modeling studies that shed light on the role of sensory-motor systems in conceptual processing will be presented, and open questions and potential solutions will be discussed.

Abstract: We consider the task of human collaborative category learning, where two people work together to classify test items into appropriate categories based on what they learn from a training set. We propose a novel collaboration policy based on the Co-Training algorithm in machine learning. In our human Co-Training collaboration policy, the two people play the role of the base learners. The policy restricts the view of the data that they can see, and limits their communication to only the exchange of their labelings on test items. We conduct a series of human behavioral experiments to explore the effects of different collaboration policies. We show that under the Co-Training policy the collaborators jointly produce unique and potentially valuable classification outcomes, which are not possible compared to either full collaboration or no collaboration. We demonstrate that these observations can be explained with appropriate machine learning models.

Abstract: The world as we know it is lawful, and objects and events that occur in the natural environment are highly redundant. Through evolution and experience, sensorineural systems should extract and exploit this redundancy, emphasizing unpredictable inputs that better inform behavior. This approach, the efficient coding hypothesis, has long neglected the role of perception in this process. I will be discussing multiple lines of research that demonstrate efficient coding in the perception of a wide variety of sounds, including speech, musical instruments, and novel sounds with well-controlled statistical structure. Simple computational models will be shown to explain considerable amounts of listener performance and lend insights into perceptual organization.

Abstract: Organisms make estimates of object motion from limited sensory input. In binocular vision, the information needed to infer an object's 3D motion trajectory is carried by temporal changes in the two retinal images. I'll review previous work on the neural mechanisms underlying 3D motion perception, before turning to a curious finding: Human observers systematically misperceive the 3D trajectory of an object moving through depth, reporting motion directed toward an observer as biased to more lateral directions (Harris & Dean 2003).

Previous work on 2D motion perception has shown that a Bayesian model with a prior for slow speeds can account for biases in 2D speed and direction percepts (Weiss et al. 2002; Stocker & Simoncelli 2006). Biased 3D motion perception might similarly be understood as a natural consequence of optimal inference under sensory uncertainty and a prior for slow speeds.

To test, we presented a plane of dots viewed through a circular aperture. On each trial, the plane moved along a particular motion trajectory, and observers reported an estimate of the plane's trajectory. We varied the contrast of the visual stimulus, which effectively varied the noise in retinal measurements. Consistent with the predictions of a Bayesian model, lower contrast increased the bias in estimates of motion direction. These results extend previous Bayesian models of motion perception, illustrate how limited sensory information leads to biased estimation of object motion, and build toward an understanding of strengths and limitations of 3D motion perception.

Abstract: What would it take to develop machine learners that run forever, each day improving their performance and also the accuracy with which they learn? This talk will describe our attempt to build a never-ending language learner, NELL, that runs 24 hours per day, forever, and that each day has two goals: (1) extract more structured information from the web to populate its growing knowledge base, and (2) learn to read better than yesterday, by using previously acquired knowledge to better constrain its subsequent learning.

The approach implemented by NELL is based on two key ideas: coupling the semi-supervised training of hundreds of different functions that extract different types of information from different web sources, and automatically discovering new constraints that more tightly couple the training of these functions over time. NELL has been running nonstop since January 2010 (follow it at http://rtw.ml.cmu.edu), and has extracted a knowledge base containing approximately 440,000 beliefs as of October 2010. This talk will describe NELL, its successes and its failures, and use it as a case study to explore the question of how to design never-ending learners.

Abstract: How does the human brain represent meanings of words and pictures in terms of underlying neural activity? This talk will present our research using machine learning methods together with fMRI and MEG brain imaging to study this question. One line of our research has involved training classifiers that identify which word a person is thinking about, based on their observed neural activity. A second line involves training a computational model that predicts the neural activity associated with arbitrary English words, including words for which we do not yet have brain image data.

Abstract (1): An important problem in cognitive psychology is to quantify the perceived similarities between stimuli.Previous work attempted to address this problem with multi-dimensional scaling (MDS) and its variants.However, there are several shortcomings of the MDS approaches. We propose Yada, a novel general metric learning procedure based on two-alternative forced-choice behavioral experiments. Our method learns forward and backward nonlinear mappings between an objective space in which the stimuli are defined by the standard feature vector representation, and a subjective space in which the distance between a pair of stimuli corresponds to their perceived similarity. We conduct experiments on both synthetic and real human behavioral datasets to assess the effectiveness of Yada. The results show that Yada outperforms several standard embedding and metric learning algorithms, both in terms of likelihood and recovery error.

Abstract (2): This paper presents a computational model of word learning with the goal to understand the mechanisms through which word learning is grounded in multimodal social interactions between young children and their parents.

We designed and implemented a novel multimodal sensing environment consisting of two head-mounted mini cameras that are placed on both the child’s and the parent’s foreheads, motion tracking of head and hand movements and recording of caregiver’s speech. Using this new sensing technology, we captured the dynamic visual information from both the learner’s perspective and the parent’s viewpoint while they were engaged in a naturalistic toy-naming interaction. We next implemented various data processing programs that automatically extracted visual, motion and speech features from raw sensory data. A probabilistic model was developed that can predict the child’s learning results based on sensorimotor features extracted from child-parent interaction. More importantly, through the trained regression coefficients in the model, we discovered a set of perceptual and motor patterns that are informatively time-locked to words and their intended referents and predictive of word learning. Those patterns provide quantitative measures of the roles of various sensorimotor cues that may facilitate learning, which sheds lights on understanding the underlying real-time learning mechanisms in child-parent social interactions.

Abstract: Systems that sense, learn, and reason from streams of data promise to provide extraordinary value to people and society. Harnessing computational principles to build systems that operate in the open world can also teach us about the sufficiency of existing models, and frame new directions for research. I will discuss efforts on learning and inference in the open world, highlighting key ideas in the context of projects in transportation, energy, and healthcare. Finally, I will discuss opportunities for building systems with new kinds of open-world competencies by weaving together components that leverage advances from several research subdisciplines.

Bio: Eric Horvitz is a Distinguished Scientist at Microsoft Research. His interests span theoretical and practical challenges with developing systems that perceive, learn, and reason. He has served as President of the Association for the Advancement of Artificial Intelligence (AAAI) and is now the Immediate Past President of the organization. He has been elected a Fellow of the American Association for the Advancement of Science (AAAS) and of the AAAI and serves on the NSF Computer and Information Science and Engineering (CISE) Advisory Board and the Council of the Computing Community Consortium. He received his PhD and MD degrees at Stanford University. More information can be found at http://research.microsoft.com/~horvitz

Abstract: Nearly 2500 years ago, Hippocrates called for the careful recording and sharing of evidence about patients and their illnesses. While this charge sits at the foundation of modern medicine, large amounts of critical healthcare data still goes unrecorded, in contradiction to the Hippocratic school of medicine-and to the Hippocratic oath that all physicians recite upon receiving their medical degree. I will discuss insights and efficiencies that can be gained in healthcare by learning predictive models from large amounts of data about patients and their encounters with healthcare providers.

Abstract: To describe the stochastic environment in descriptive or prescriptive models, it is implicitly assumed that enough data will be available to guarantee the validity of a decision or the consistency of a statistical estimate. Unfortunately, in a real life environment the data available is rarely enough to reach the asymptotic range, either because it is not available or there is not enough time to collect an adequate data base before decisions or estimates must be produced. One serious shortcoming is our ability to systematically blend data and non-data information, in other words, our inability to deal with the fusion of hard and soft information. The lecture deals with these challenges.

Abstract:

Beers can be characterized in terms of attributes such as bitterness, maltiness, and color. Beer lovers aren't usually good at producing accurate quantitative assessments of attributes, but they can provide relatively reliable comparisons between beers (e.g., beer X is more similar to beer A than to beer B). Presumably the attributes affect how people rank beers. This motivates the following questions. Suppose that a person is asked to rank N beers and that every beer is a point in a D-dimensional Euclidean (attribute) space. How many different rankings are possible assuming that the ranking must agree with distances in the underlying attribute space? How many comparison queries are required in order to determine the person's ranking? We answer these questions and provide an algorithm that determines the person's ranking in a provably optimal number of queries.

On the other hand, suppose we would like to discover the embedding of the beers in a D-dimensional Euclidean attribute space given just comparisons between the beers are provided to us by some expert. Given that non-metric multidimensional scaling uses only the ranking of the distances between beers to embed the beers, how many rankings, or equivalently, embeddings, are possible assuming the ranking must agree with distances of the underlying attribute space? How many queries are required in order to determine this embedding? We partly answer these questions and provide an algorithm that determines the embedding in a conjectured optimal number of queries.

Abstract: The term "brain network" means many things to many people. Much of the work I have seen uses it in a rather loose sense; a brain network is simply a summary statistic (often referred to as the "functional connectivity") with little or no direct relation to physical structure or signaling in the brain. The term "effective connectivity" refers to causal interactions between brain components, and seems a more concrete notion of a brain network. I will discuss three probabilistic approaches to modeling and inferring networks from data: correlation thresholding, conditional independence graphs, and causal directed acyclic graphs (aka structural equation modeling). The latter can represent effective connectivity, while the former two may be at best loosely associated with effective connectivity and could even be misleading.

Abstract: This paper presents a computational model of word learning with the goal to understand the mechanisms through which word learning is grounded in multimodal social interactions between young children and their parents. We designed and implemented a novel multimodal sensing environment consisting of two head-mounted mini cameras that are placed on both the child’s and the parent’s foreheads, motion tracking of head and hand movements and recording of caregiver’s speech. Using this new sensing technology, we captured the dynamic visual information from both the learner’s perspective and the parent’s viewpoint while they were engaged in a naturalistic toy-naming interaction. We next implemented various data processing programs that automatically extracted visual, motion and speech features from raw sensory data. A probabilistic model was developed that can predict the child’s learning results based on sensorimotor features extracted from child-parent interaction. More importantly, through the trained regression coefficients in the model, we discovered a set of perceptual and motor patterns that are informatively time-locked to words and their intended referents and predictive of word learning. Those patterns provide quantitative measures of the roles of various sensorimotor cues that may facilitate learning, which sheds lights on understanding the underlying real-time learning mechanisms in child-parent social interactions.

Abstract: Human semantic memory is the form of memory that allows us to understand and make inferences about objects and events, either as they are experienced or as described in language. The systems that support semantic abilities must achieve two functions that are, in some ways, contradictory. On the one hand, for semantic knowledge to generalize productively the system must acquire representations that are abstracted over different situations. Like the characters in Lost, we must be capable of recognizing a polar bear in the jungle, even if, in our actual experience, the polar bear is typically found in the Arctic. On the other hand, we must also be able to shape the particular inferences we make to suit the immediate demands of the current context. For instance, when moving a piano we must attend to its weight and shape and ignore its characteristic function, whereas when playing in a concert we can ignore its shape and weight and must attend to the function. Models of semantic memory, categorization, and inductive inference have mainly focused on understanding abstraction over context and have largely ignored how context constrains performance in semantic tasks.

Understanding how task context influences cognition is, however, a primary focus in the study of cognitive control. In this literature, a central idea is that active representations of task goals in the prefrontal cortex influence the flow of activation through more posterior networks--including, potentially, the semantic network. I will describe a simple model that links this approach to control to more general ideas about semantic memory, and will show how the model explains important differences between two interesting neuropsychological groups: patients with semantic dementia, and patients with semantic impairment following fronto-parietal stroke.

HAMLET mailing list

David Devilbiss (ddevilbiss@wisc.ed), Chuck Kalish (cwkalish@wisc.ed), and Jerry Zhu (jerryzhu@cs.wisc.ed) (Add 'u' to the addresses)

(Adapted

from xkcd.com)

(Adapted

from xkcd.com)