Virtual Exertions: a user interface combining visual information, kinesthetics and biofeedback for virtual object manipulation

Kevin Ponto, Ryan Kimmel,

Joe Kohlmann,

Aaron Bartholomew

and Robert G. Radwin

Living Environments Laboratory,

University of Wisconsin–Madison

Video

Paper Download

Researchers and students may download a copy of this publication.

ponto-ieee-3dui-2012-virtual-exertions.pdf

Citation

BibTeX citation coming soon.

Abstract

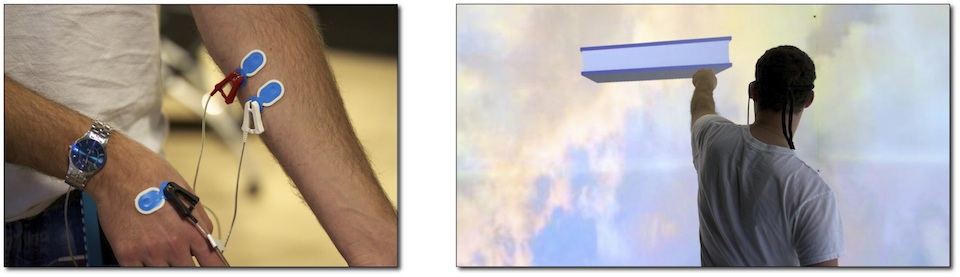

Virtual Reality environments have the ability to present users with rich visual representations of simulated environments. However, means to interact with these types of illusions are generally unnatural in the sense that they do not match the methods humans use to grasp and move objects in the physical world. We demonstrate a system that enables users to interact with virtual objects with natural body movements by combining visual information, kinesthetics and biofeedback from electromyograms (EMG). Our method allows virtual objects to be grasped, moved and dropped through muscle exertion classification based on physical world masses. We show that users can consistently reproduce these calibrated exertions, allowing them to interface with objects in a novel way.