CS 766 Project 1 Report

Jake Rosin

9-25-07

[recommended width]

<------------------------------------------------------------------------------------------------------------------------------->

Project Description

Using a set of photos taken by the same camera of the same scene at different exposure lengths, recover the camera's response curve. Use this curve and the low dynamic range source images to construct a high dynamic range image. High dynamic range allows a single image to cover a broad gamut of luminance values without bright areas appearing saturated or dark areas being too murky to show details. Although the full dynamic range of an HDR image cannot be displayed at once, tone mapped versions may be produced which retain the benefits in high and low brightness areas but use only the traditional 256 brightness levels for each color channel.

Algorithms Used

The response curve of the camera used is recovered using the method described by Debevec and Malik [1]. This algorithm uses pixel locations chosen to give a good range of pixel values as part of a system of linear equations. Using the least squares method the function g can be recovered, where

g(Zij) = ln Ei + ln Δj

Using this function, the pixel values Zij (for pixel i in image j) and the exposure times Δj, the actual pixel irradiances Ei can be estimated and a high dynamic range image produced.

Since the source images used were all taken using a stable tripod setup, no alignment was necessary to construct the results. In cases where source images are taken using a hand-held camera, or where the tripod is unstable, images should be aligned to ensure the real-world location represented by each pixel is constant across the set of images. Greg Ward's [2] MTB alignment algorithm was implemented for this purpose. To find the offset between a pair of images a bitmap is constructed using the median grayscale pixel value as a threshold. Since luminance is not expected to change between photos of the same scene, pixels below the median in one image are expected to remain so in the other. The exception would be fluctuations due to noise; for that reason pixels with values near the median are omitted from consideration in the next step.

Having constructed the bitmaps, an XOR between one bitmap and the other at some offset will reveal the number of pixels with significantly different luminance values between images. (Actually, implementation is easier if the image is offset, then the bitmap is produced, since the algorithm runs faster if the bitmap is stored at eight pixels per byte rather than in a more complex form. This is what my implementation does and I assume the same is true for Ward's.) By trying all possible offsets the one which produces minimum difference in the bitmaps is selected as the true offset. Repeating this process for all pairs of images allows a consistent alignment to be produced.

Trying all possible offsets is obviously a costly operation; image pyramids are used to alleviate this problem. Some maximum depth d is chosen and images resized to 1/2^d scale. Nine possible offsets are used (those in (x ± {0,1}, y ± {0,1})) and the best is selected. Moving one step up the pyramid, the image is offset by double the selected amount and the process is repeated. The maximum horizontal or vertical pixel offset is thus ±(2^d -1).

Finally, the HDR images are converted to low dynamic range format using Reinhard's tone mapping algorithm. This algorithm was not implemented in the project; instead the plug-in for HDRShop was used.

Implementation Details

The Gil and LAPACK libraries were used in this implementation: Gil to allow easy image manipulation and LAPACK to solve the overconstrained system of equations necessary to derive g. The Gil library was downloaded from https://www.cmlab.csie.ntu.edu.tw/trac/libgil2/wiki/Download and installed using the instructions provided on the CS 766 course page. Code for this project was written on an Ubuntu Linux 7.04 system, and the LAPACK library was acquired using apt-get. Most code was written in C++; the call to LAPACK's dgelss function occurs within a C file.

The executable produced requires some user input in addition to the source images. A file containing image filenames and their corresponding exposure times must be constructed before running hdr, as well as a file containing pixel locations used to derive the camera curve (if the number of pixels specified in this file is insufficient more will be selected at random). Details on these files can be found in the README.

Image alignment is performed as described above, with the user being prompted to specify the maximum relative offset between consecutive image pairs. If alignment is performed the results are written as new images and the program terminates without constructing an HDR image; this is to allow new pixel locations to be selected for the translated and cropped images.

Assuming the images are aligned the camera curve is derived. Pixels are selected at random from the image in addition to those chosen by the user, where N(P – 1) > 255p. In the current version p is hard-coded to 10; experiments with lower ps produced similar results with marginal runtime improvements. The rationale for choosing pixels beyond the minimum number (i.e., using any value for p apart from 1) is to increase the probability of a good range of pixel values when all pixels are chosen at random. This is less of an issue if the pixels are user-specified.

After constructing a high dynamic range image the program outputs the result as a Radiance RGBE image, along with a text file showing the values for g in the domain [0, 255] for each color channel.

Instructions on running the program can be found in the README; help compiling the source is available in README_COMPILATION.

HDR Results

In a word, disappointing. The images produced showed more detail in high and low luminance areas than the source images, as desired, but color balance was off-kilter in most. It's possible this is a result of the camera responding achromatically to a color of light other than R = G = B, as discussed in section 2.6 of [1]. The color drift in some images is barely noticeable (e.g. ramp_up.jpg), but for others the distortion is obvious even without examining the source images (e.g. doit.jpg, exterior.jpg). My images also appeared brighter overall, having a general appearance of being slightly oversaturated. Occasionally this reduced image detail in high-luminance areas. Compare below the tone mapped version of exterior.jpg produced by my implementation, and one produced using HDRShop at all steps.

On the left, the version produced by my implementation. On the right is one produced by HDRShop.

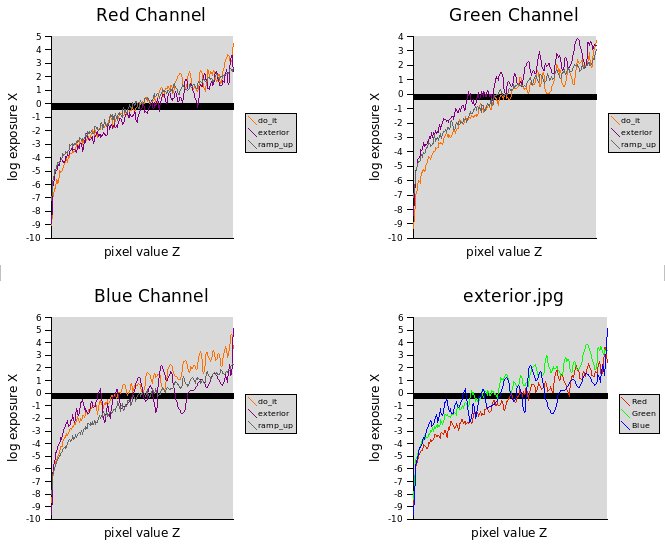

Another issue, which possibly explains the color difference between the high dynamic range versions of different scenes, was the fact that the recovered g function was not consistent across source images. The cause of this is unknown. Recovered gs were also non-monotonic; this was expected due to image noise, data lost in converting source images to .jpg format, etc., but the degree of deviance from a monotonic result was greater than expected. These results are examined in the charts below.

For each of the three color channels, the derived g function is shown for the three images mentioned above. Notice that for all three channels 'ramp_up,' the image which showed the most visually consistent results, also possesses the smoothest (i.e., closest to monotonic) g function. In the bottom-right the three channels for the worst looking image of the three are shown.

Alignment Results

Just shy of exemplary. Image alignment seemed spot-on for most exposure levels, only misaligning images when the median pixel value was exceptionally low (and I assume when extremely high, but images with this condition were not tested). Since this result is predicted by Ward's paper I don't see it as a problem. Test images can be found in the directory alignment_test, and results can be replicated by using a maximum offset of 31 pixels (2^d -1 for d = 5).

The misalignment problem at low average brightness occurs due to the difficulty in selecting a threshold value for bitmap conversion, especially when the best possible value is beyond the dynamic range of an image. When light sources such as light bulbs (for example) are visible within the image, they tend to contain an extremely bright core (such as the bulb's filament) surrounded by a more diffuse glow. At longer shutter speeds this entire area fits easily above the median, but when exposure time decreases the core maintains its brightness while the glow around it grows darker. Eventually the edges of the core slip below the threshold value (even when this value is set below the median, as mentioned on page 4 of [2] and allowed by my implementation) as luminance levels in the area of the glow become indistinguishable from the surroundings. This dramatically alters the shape of the bitmap and so alignment problems result.

Implemented Extensions

Ward's image alignment algorithm discussed in detail above was implemented. Attempts to improve the results of HDR image creation, though ultimately unsuccessful, were considered a higher priority than additional extensions.

References