Photometric Depth Reconstruction

Mikola Lysenko

The objective of this project was to reconstruct a depth field from a series of photographs taken under controlled lighting conditions. The basic idea behind this process was to compute an approximation of the surface normals, which we then use to find a good approximation of the surface. We do this in four steps; first, we calibrate the camera by computing the lighting directions. Next, we find the normal map using least squares. Then, we solve for the color albedo using the normal data. Finally, we fit a surface using least squares again.

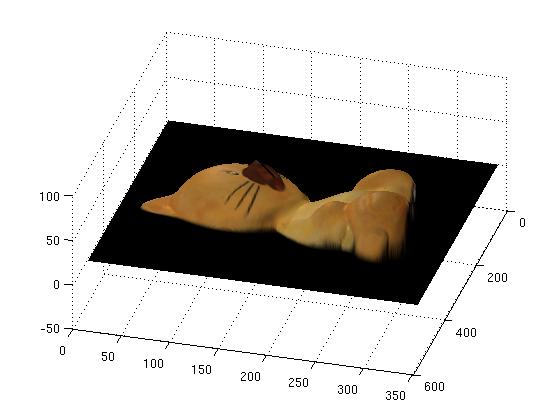

To perform calibration, we first gather a series of photographs of a chrome sphere taken under similar lighting conditions. From this, we determine the center of the sphere,  , its radius,

, its radius,  , and the location of the specular highlight in each image,

, and the location of the specular highlight in each image,  . To compute the light direction in each image, we use the fact that the normal for the sphere at the specular highlight is:

. To compute the light direction in each image, we use the fact that the normal for the sphere at the specular highlight is:

Assuming the camera is viewing the object head on, the viewing direction is then

. From this, it is possible to solve for the lighting direction,

. From this, it is possible to solve for the lighting direction,  , using the standard reflection formula:

, using the standard reflection formula:

The basic procedure for normal reconstruction follows from the project description. For each point in the image, we attempt to find a good normal by minimizing the following function at each pixel:

where  is the intensity of the pixel in the

is the intensity of the pixel in the  image,

image,  is the light direction,

is the light direction,

is the combined normal/albedo and

is the combined normal/albedo and  is a weighting factor. For a weighting function, we chose to use the same function from the HDR project as suggested, giving:

is a weighting factor. For a weighting function, we chose to use the same function from the HDR project as suggested, giving:

which favors intensity values within the camera's dynamic range over specular/shadowed regions. The system of equations in the above is repeated for each color channel, giving a total of  constraints and

constraints and  unknowns. To reconstruct the normal, we solve the least squares problem using the conjugate gradient method as it is fewer lines of MATLAB code. This gives us a solution for

unknowns. To reconstruct the normal, we solve the least squares problem using the conjugate gradient method as it is fewer lines of MATLAB code. This gives us a solution for  . To recover the normal directions,

. To recover the normal directions,  , we simply unitize

, we simply unitize  .

.

To find the albedo, we apply the formula described in the notes. For every pixel/color channel, we have:

which can be solved directly using a loop over the image.

The final phase of the algorithm is surface reconstruction. To find the surface, we use the two orthogonality constraints as mentioned in the notes:

Along with the additional constraint that

for all points not in the image. To solve this large system, we use the preconditioned conjugate gradient method. On average, about 2000 iterations were necessary for convergence.

for all points not in the image. To solve this large system, we use the preconditioned conjugate gradient method. On average, about 2000 iterations were necessary for convergence.

The software for this project was written in MATLAB. All necessary files are included in this archive. To install the program, simply extract the files into a folder, then place the psmImages directory within the same folder. Because the images provided are in .tga format, you must have the tgatoppm and ppmtopcx utilities in your command line path and be using some form Unix. Once this is done, you may run the program to reconstruct a scene using the following MATLAB command:

reconstructObject('psmImages/objectName')

Where objectName is the directory containing the images of the object you want to reconstruct (eg. owl, horse, buddha). The program assumes a naming scheme the same as the sample files and requires that each sample is named something like object.1.tga and that there is a mask of the image labeled object.mask.tga.

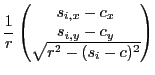

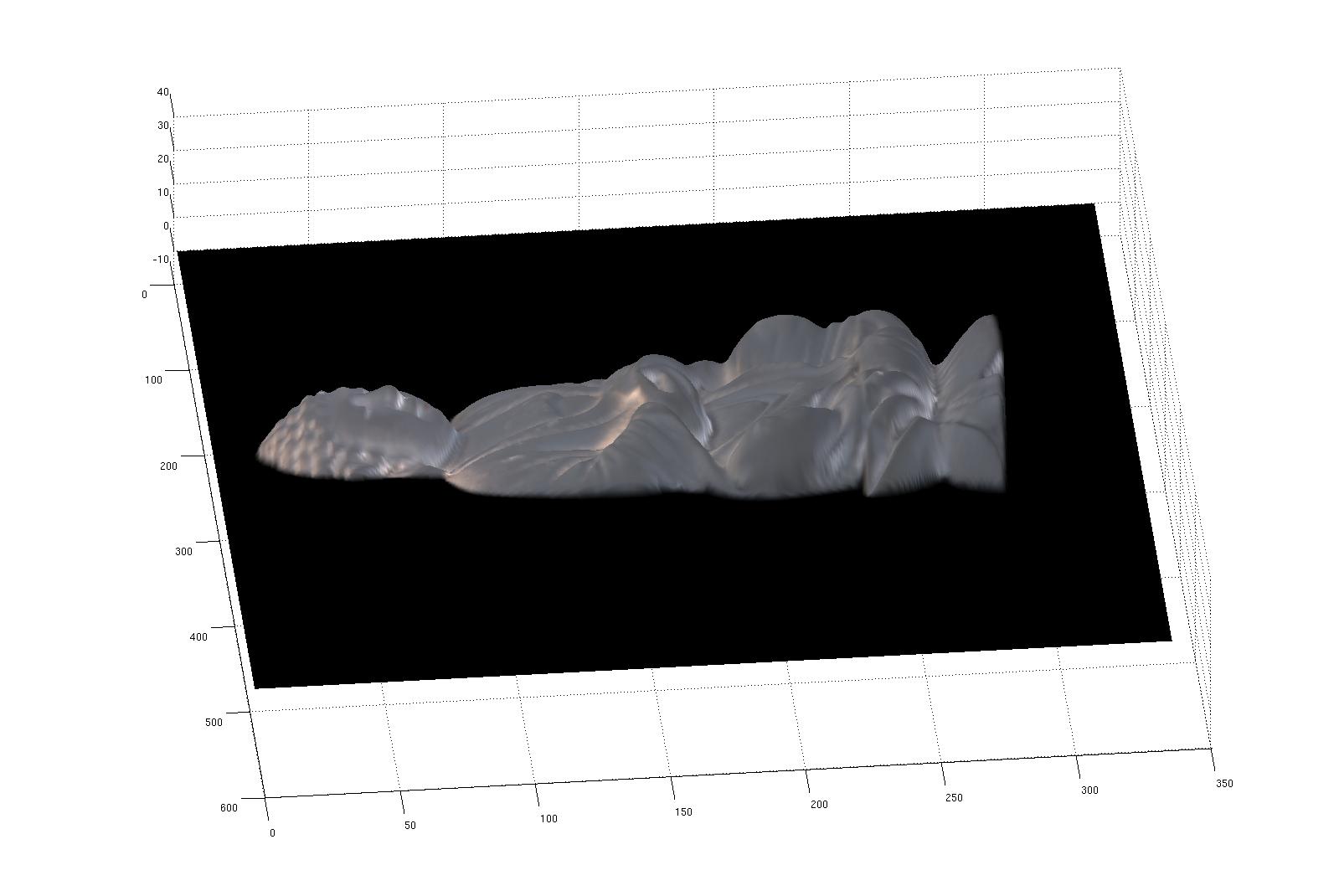

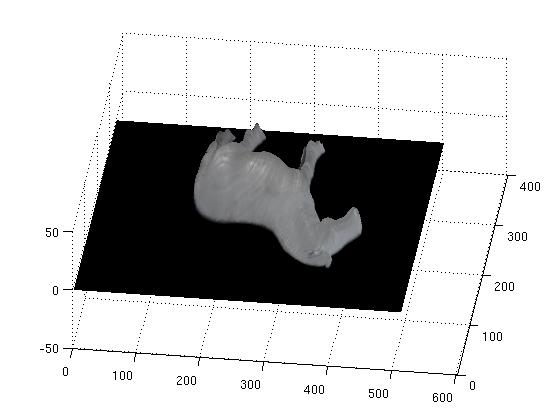

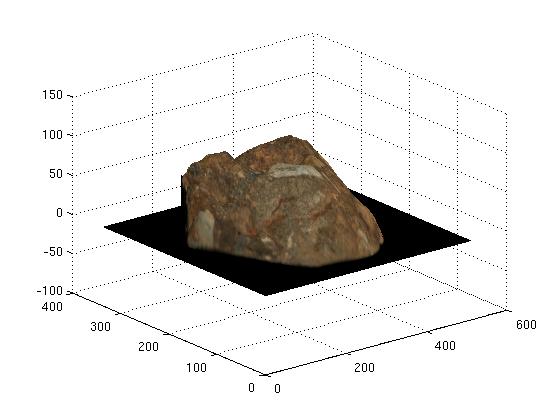

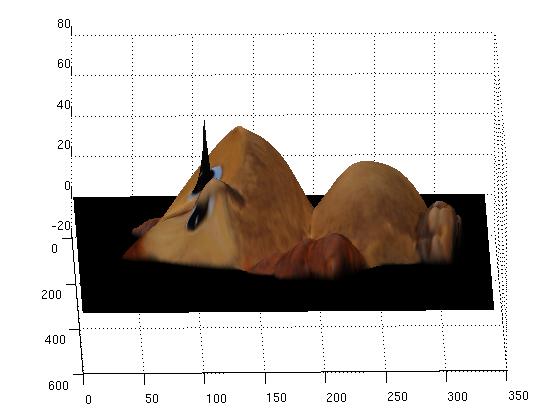

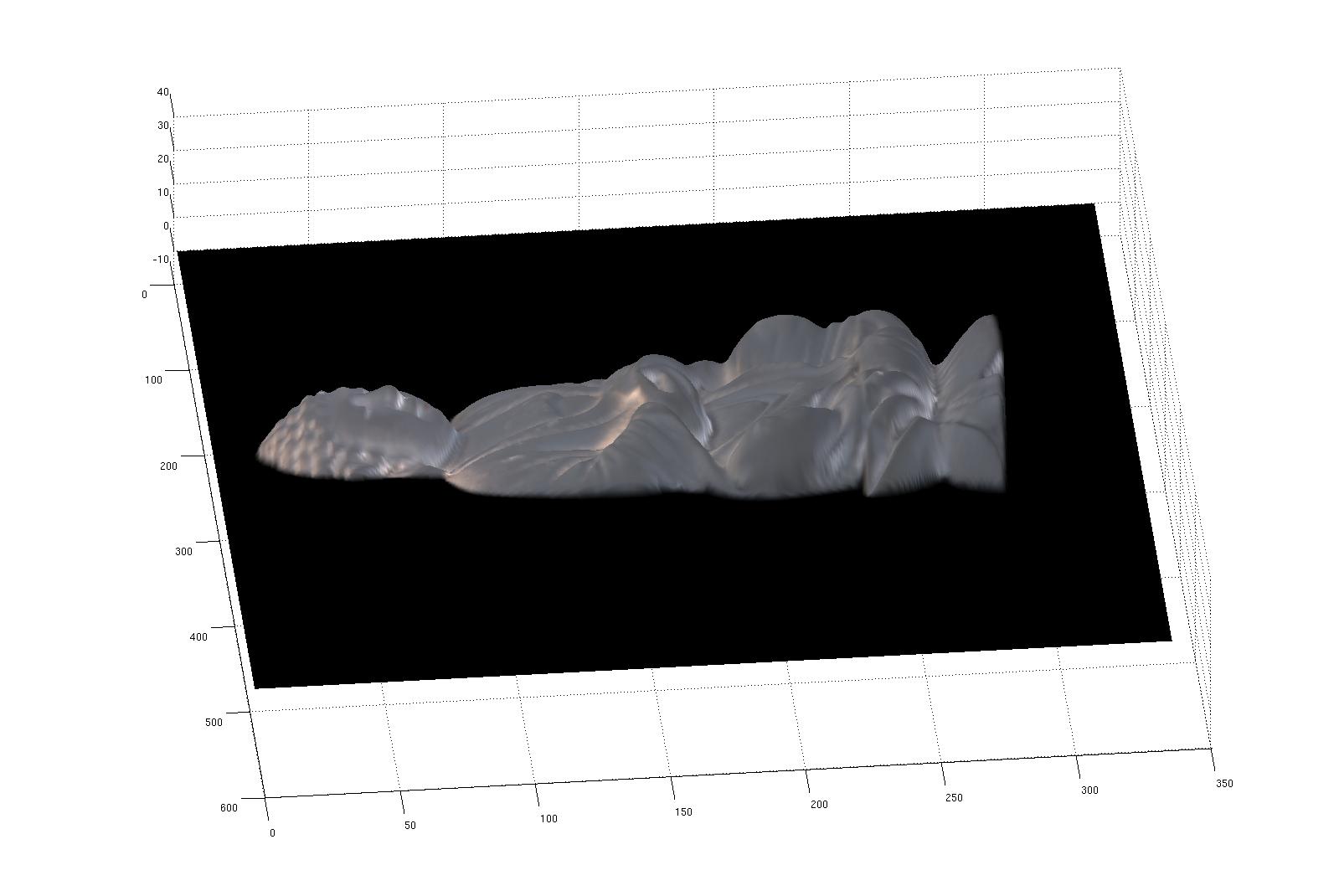

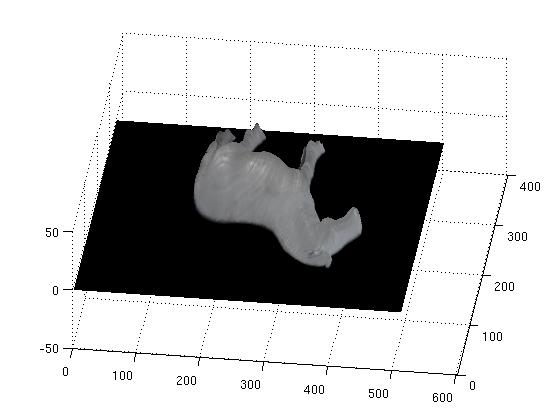

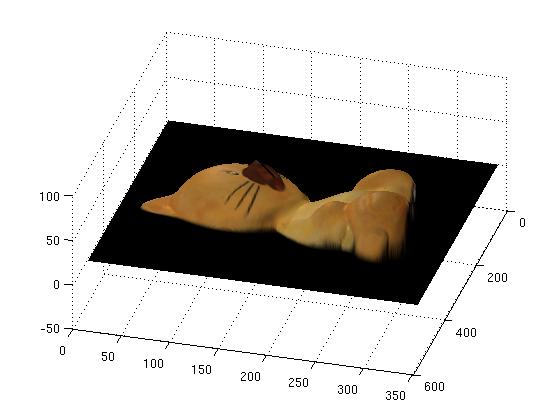

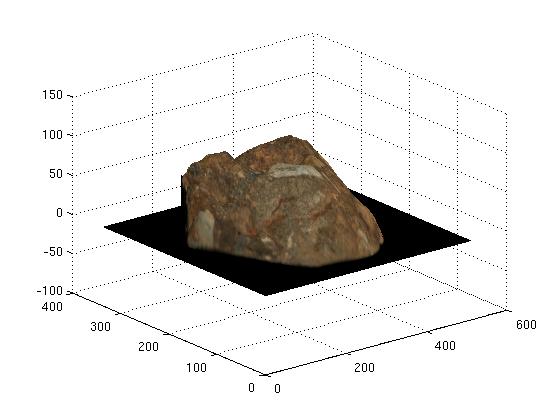

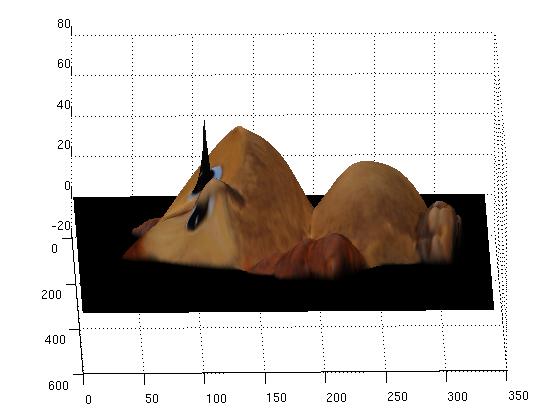

Overall, my implementation of this algorithm was quite successful, owing mostly to the nice test data kindly provided by the grader. In the following figure, we can see several reconstructed views of the buddha, horse, cat and rock:

However, for the owl data set we ran into some problems as can be seen in the following reconstructed view. The spike in this picture is an unfortunate consequence of the high specularity on the owl's eye. This can be seen in the corresponding normal map, where there is a discontinuity.

Because of the fact that my previous project took so much time, I decided not to implement any enhancements for this particular exercise (other than the improved weighting.) However, the final product works and was completed on time, so there really isn't much to complain about.

The basic idea of computing a surface from its normals works pretty well. However, the method for determining the normals we used in this project is probably not the best. As mentioned in class, we could easily eliminate the requirement that we have a chrome sphere in the scene by estimating the lighting and normal direction simultaneously.

A major issue in this algorithm is that it doesn't work very well for specular objects. To get around this it might be possible to exploit the fact that specular reflection preserves polarization. Using this trick, we could estimate the degree to which the polarized light was rotated, giving the surface normal while allowing the specular components to be cancelled out.