Non-Photorealistic Real-time Volume Rendering

Brandon Ellenberger and K. Evan Nowak

Project Description

Our goal was to alter previously implemented real-time volume

rendering methods to

generate non-photorealistic styled output. Real-time volume

methods generate representations of volumes by storing pre-computed

volume data in 3D textures in hardware. These 3D texture are

then

accessed and blended using a series of view-facing polygons that slice

into the textures. One can achieve different effects by

changing how the polygons are rendered and blended. Modern

hardware shading languages provides a large degree of flexibility in

how texture data can be altered and represented.

We used the OpenGL Shading Language (GLSL) in order to implement a

number of NPR methods that are described in a paper by David Ebert and

Penny Rhenigans, Volume illustration: non-photorealistic

rendering of volume models (IEEE Visualization 00). The NPR

methods we have implemented are: boundary

enhancement,

silhouette enhancement, edge

coloring, distance coloring, tone

shading and

toon shading. We also experimented with

second order derivative filters, specifically the Laplacian

filter, for finding significant volume features. Following is

a short description of each method and

results:

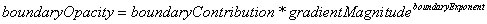

Boundary Enhancement

For this method the opacity of each voxel-sample is enhanced based on

the gradient magnitude of that voxel. The gradient magnitude is

precomputed and stored as a float texture in hardware. This

effect can serve to emphasize regions of contrast in a volume.

Below is the equation we use to determine the opacity

contribution based on the gradient magnitude.

Here the gradient magnitude is normalized across the volume so that the

values range from 0 to 1. The boundary exponent serves to change

the sensitivity of the magnitude.

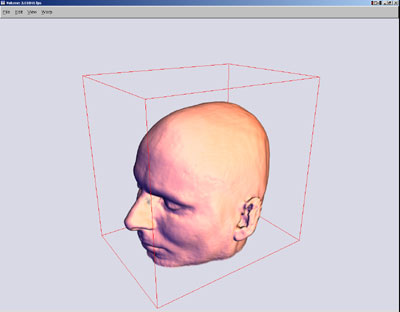

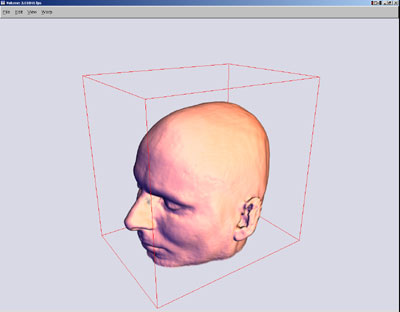

The only opacity contribution in this image is that of the boundary

enhancement equation. Notice that certain features in the volume

are more opaque than others, particularly the boundary between the air

and skull and skin, and between the skull and the brain tissue.

The darkness in the image is the

result of diffuse shading.

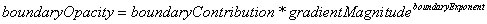

Silhouette Enhancement

To enhance the volumes silhouettes the opacity of each volume sample is

enhance based on that voxel's gradient direction relative to the

viewer.

The gradient is also stored in a 3D texture. The amount of

enhancement is proportional to the perpendicularity of the voxel, as

demonstrated by this equation:

In this image only the features that are perpendicular to the viewer

are opaque, for example the outline of the skull and the creases in the

brain.

Edge Coloring

In order to further enhance the edges of the volume, voxels are

darkened based on their orientation relative to the view

direction.

Perpendicular voxels are colored black, whereas voxels more

parallel to the viewing direction are not changed. This achieves

the effect of outlining the perpendicular edges of the volume.

Rather than simply color a voxel-fragment black, we

mix between the diffuse color and black based on the perpendicularity

of the gradient. Certain voxels are not darkened at all according

to a threshold

parameter.

Here diffuse lighting is turned off and the only voxel-samples that are

darkened are those that are more perpendicular to the viewer.

Notice the dark edges around the nose, ear, brow and eyelids.

The volume with no shading is presented for comparison.

With no edge coloring, all the features except the outline of the

volume are lost.

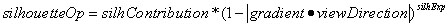

Tone

Shading

We implemented the Gooch shading model in order to better accentuate

dim features. According to the Gooch model, colors range from

cool tones to warm tones rather than dark to light. The user can

specify the cool and warm colors and their contribution to the final

color:

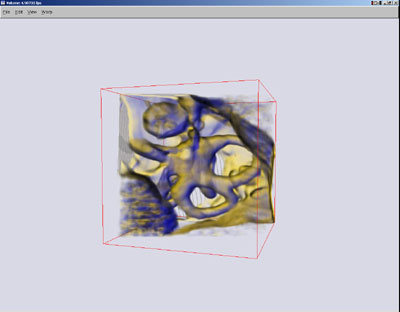

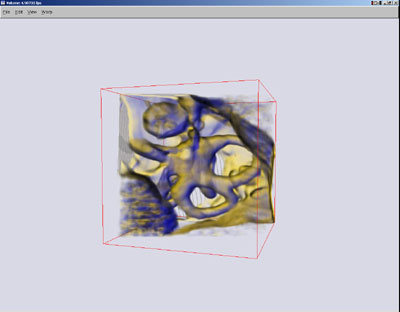

Here we have the volume tone shaded compared to the simple diffuse

shaded volume. In the tone shaded volume the cool color is pure

blue and the warm color is pure yellow. Upon close inspection one

notices that the definition around the center curve of the brow is more

defined in the tone volume. (The volumes are being viewed from

slightly different view-points.)

Toon Shading

With toon shading the volume is rendered with 4 colors ranging in tones

based on the light's intensity at that point, giving a toon-like

effect. The user supplies a color which serves as the darkest of

the four and the three other colors are interpolated between the

supplied color and pure white.

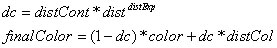

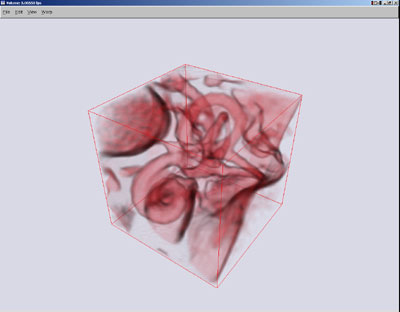

Here the chosen color was pure red. The varying levels of

contrast provide an interesting, more artistic effect.

Distance Coloring

For this method the color of each voxel-sample in the volume is

augmented based on its depth into the volume relative to the viewer.

The depth value ranges between 0 and 1 where a depth of 1

corresponds to a voxel that is one diagonal's length into the volume:

In this equation, "dc" corresponds to the modulated contribution of the

distance color (distCol) and "color" corresponds to the color

accumulated so far by other methods.

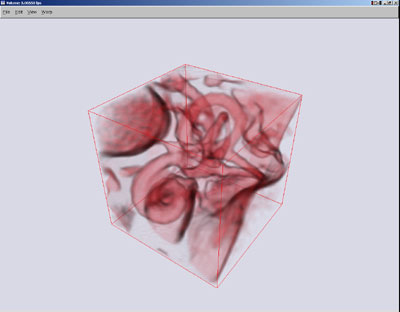

In this image the rectangular volume is set to be colored pure red and

the distance color is pure green. As one might expect, the volume

becomes more green in the further parts of the volume.

Laplacian Filter

The laplacian filter is a second order spatial derivative filter that

highlights regions of rapid intensity change. We stored these

values in a volume texture in order to enhance significant features,

such as creases. Our trials were met with limited success as they

only worked on contrived geometric examples.

In this image we have used values of the laplacian texture to determine

whether or not a fragments should be colored black. If a

voxel-sample's laplacian value was relatively high or relatively low,

it was colored black. Notice on some of the outside creases

certain pixels are green. This occurs because the laplacian

values for the given voxel-sample were just outside of the range.

However, inclusion of these sample would require the coloring of

other pixels as well, such as the sides of the volume. A more

useful method would require further research into second order

derivatives.

More Results

This is a volume of a inner ear with only edge coloring turned on.

The edge color helps one distinguish between structures, however

it fails to provide depth cues.

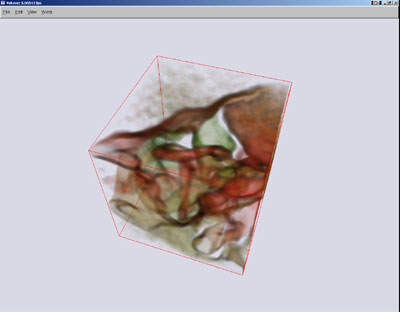

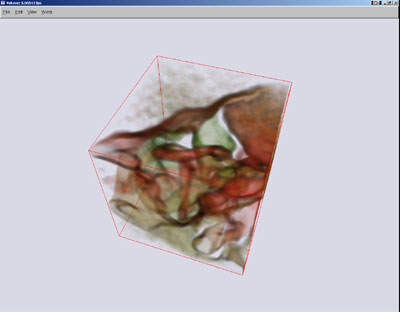

Here is the same volume with boundary opacity enhancement, edge

coloring and distance coloring. This achieves a similar effect to

that of the last rendering, but with the distance coloring, the user to

better sense which structures are in front. The boundary

enhancement serves to make important structures more opaque.

Here we have the ear again with tone coloring and edge coloring turned

on, with the a clipping plane removing the front of the volume.

The tone shading does a good job of accentuating curvature.

Here is the MRI head with tone shading, edge coloring and a bit of the

normal color added in. This rendering illustrates the ability to

composite different effects.

Source Code

For those interested, here is the source to our GLSL fragment and

vertex shader:

NPR.frag

NPR.vert