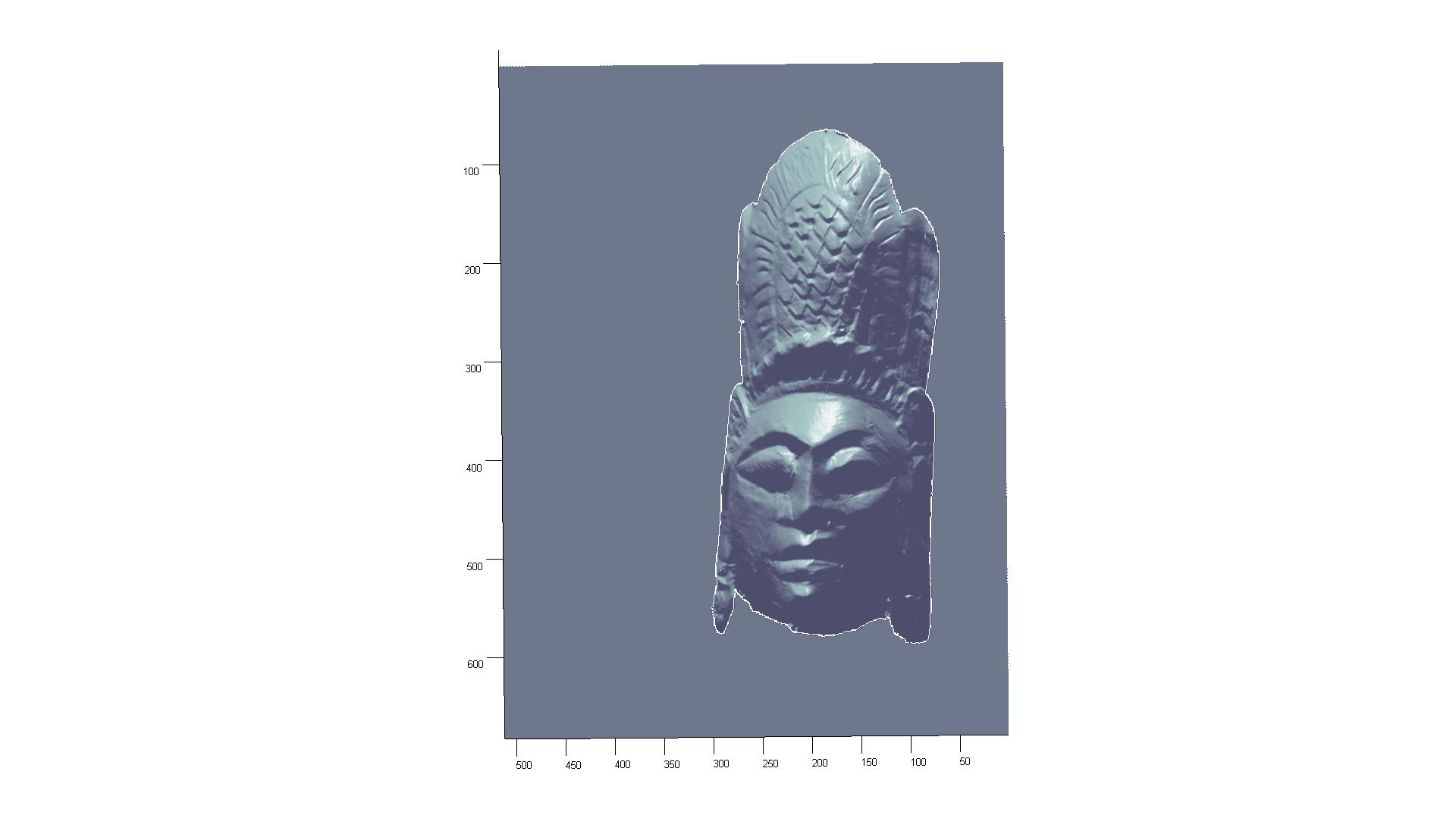

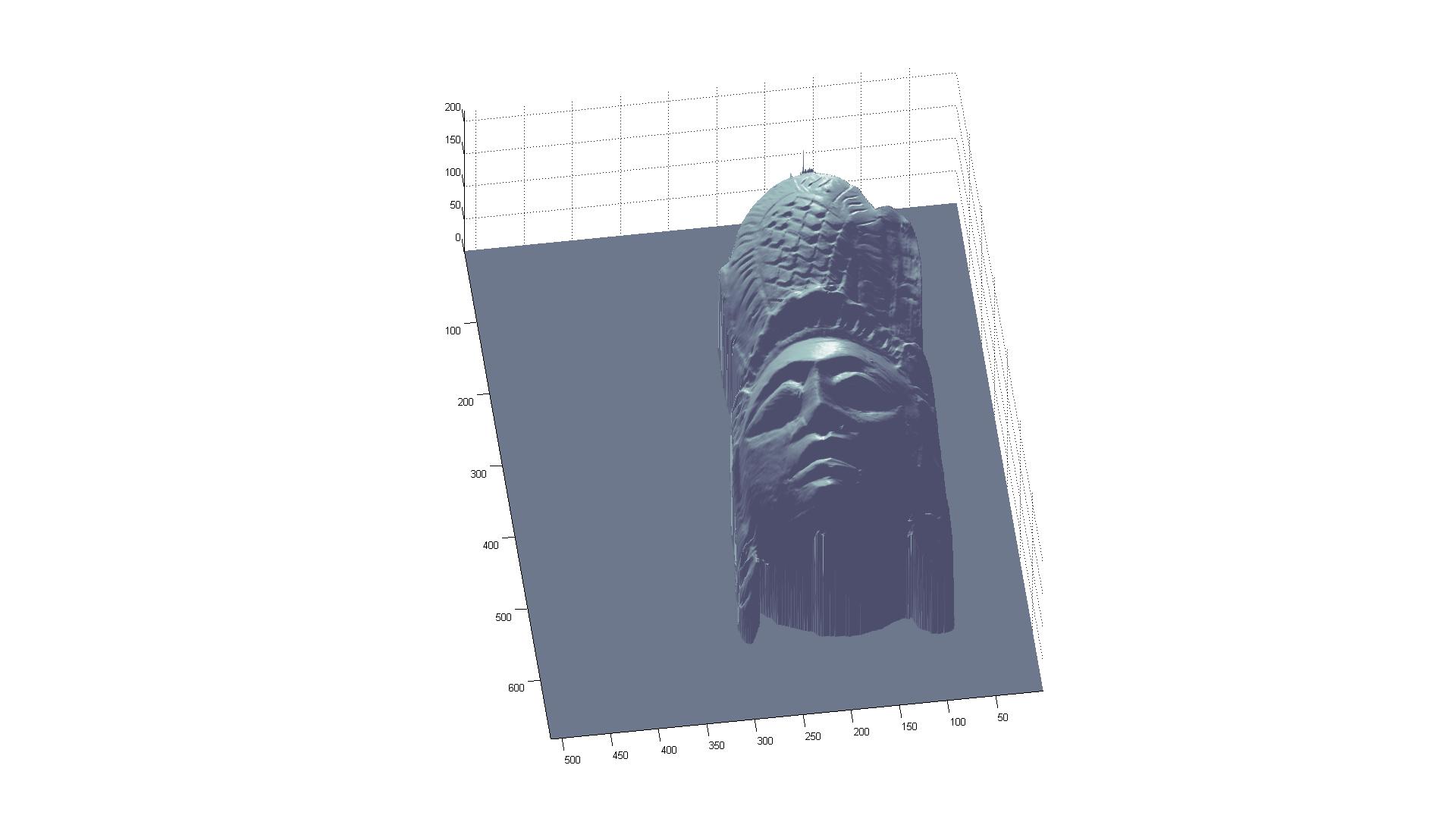

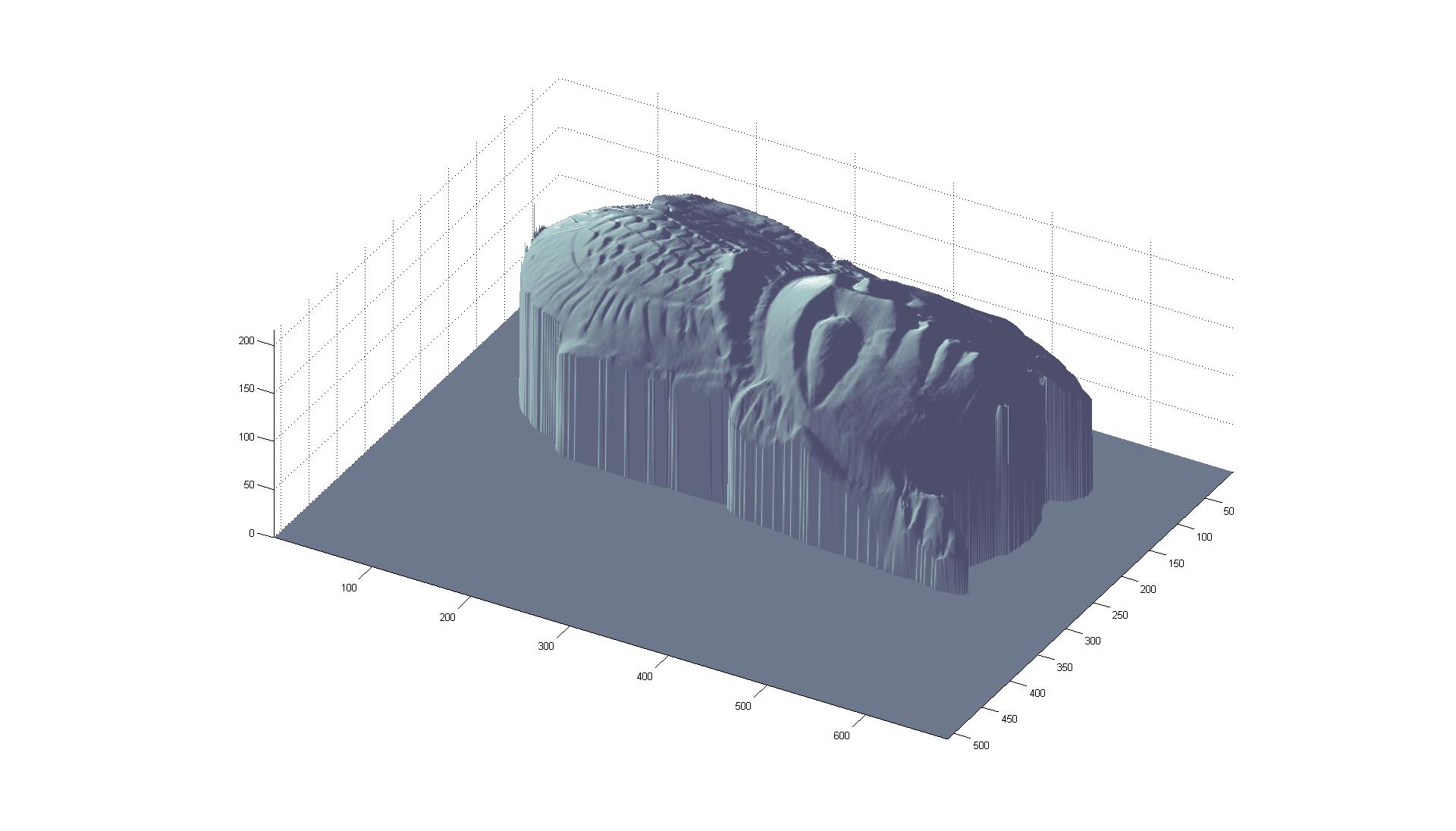

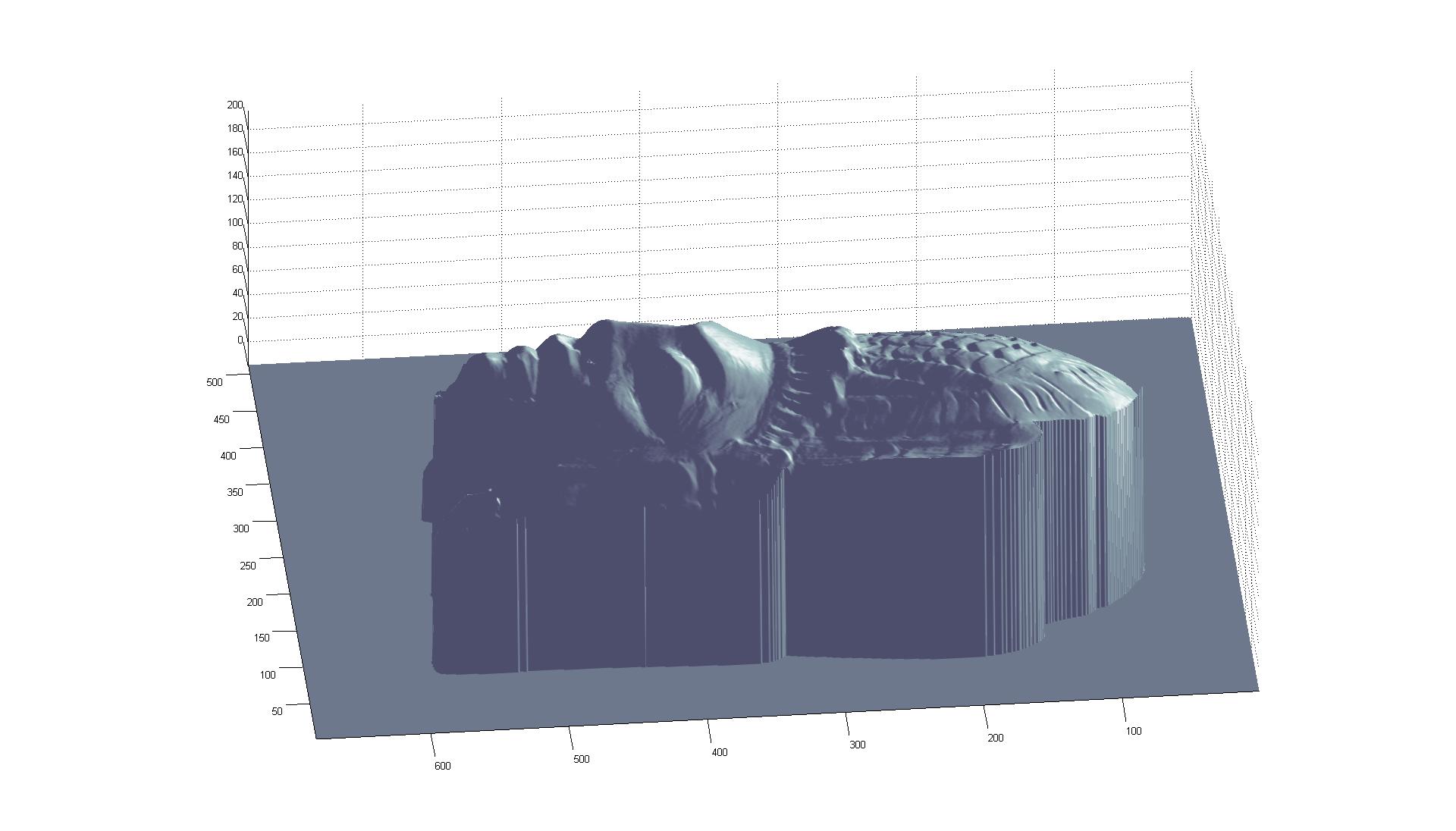

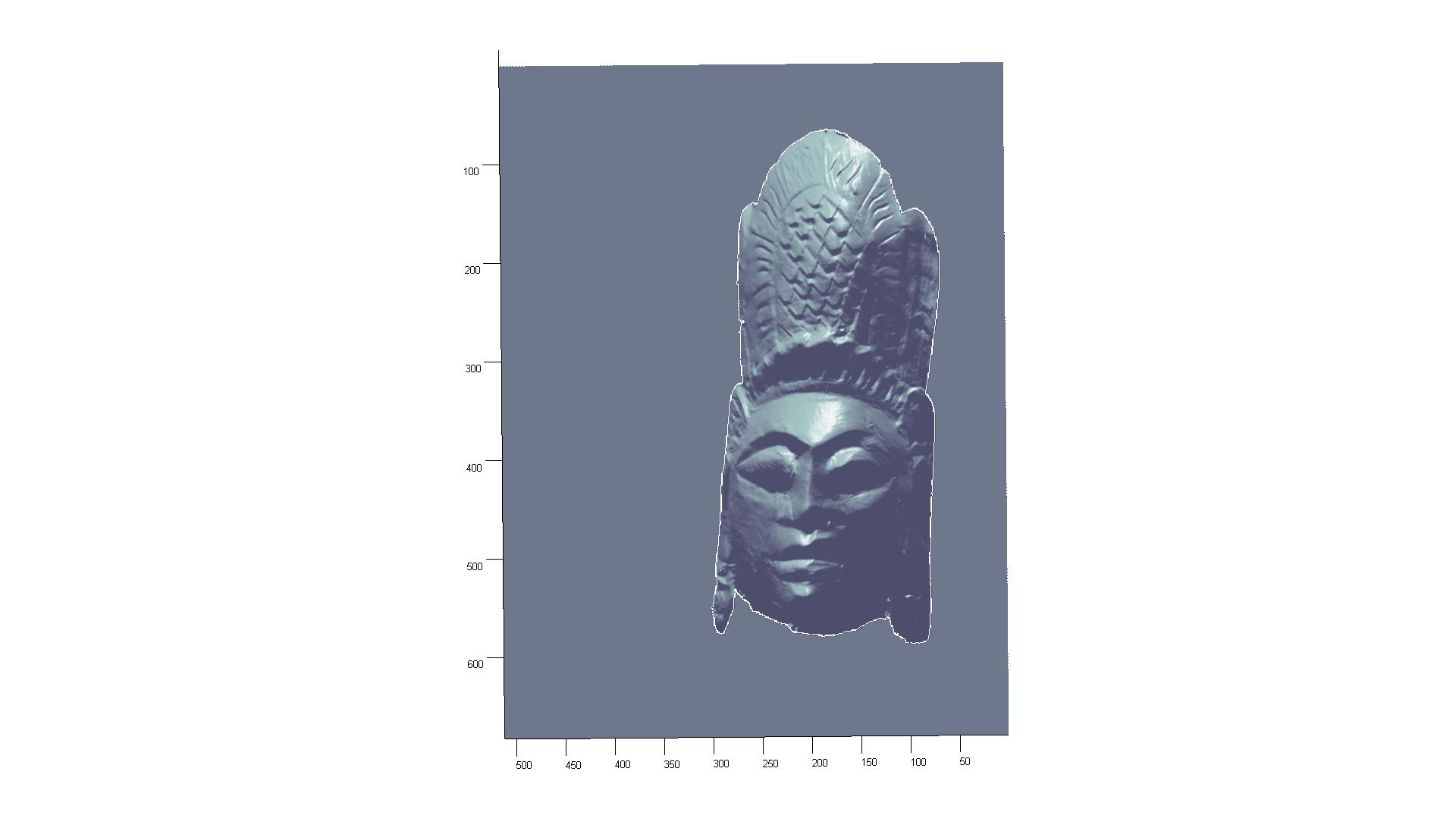

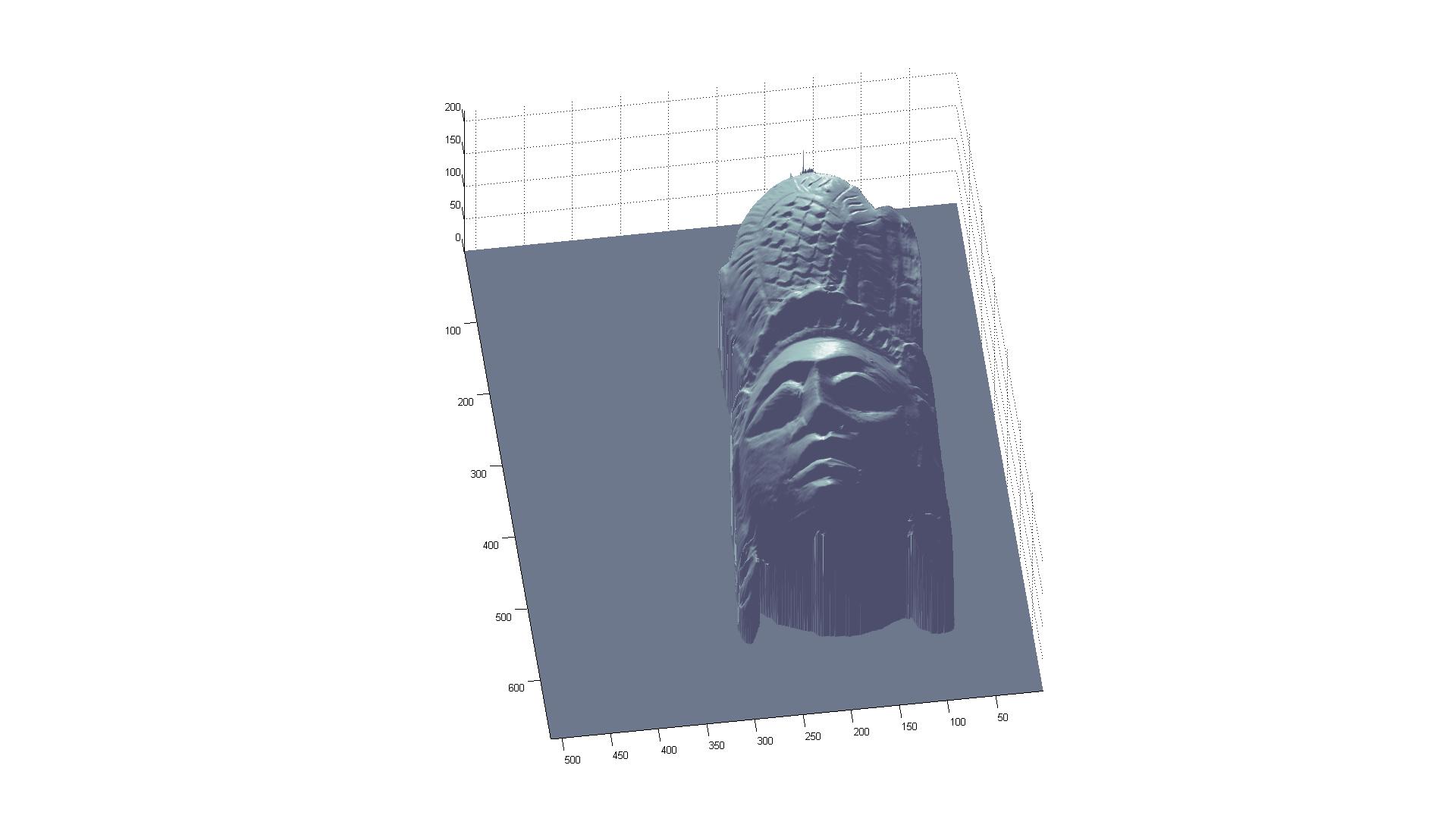

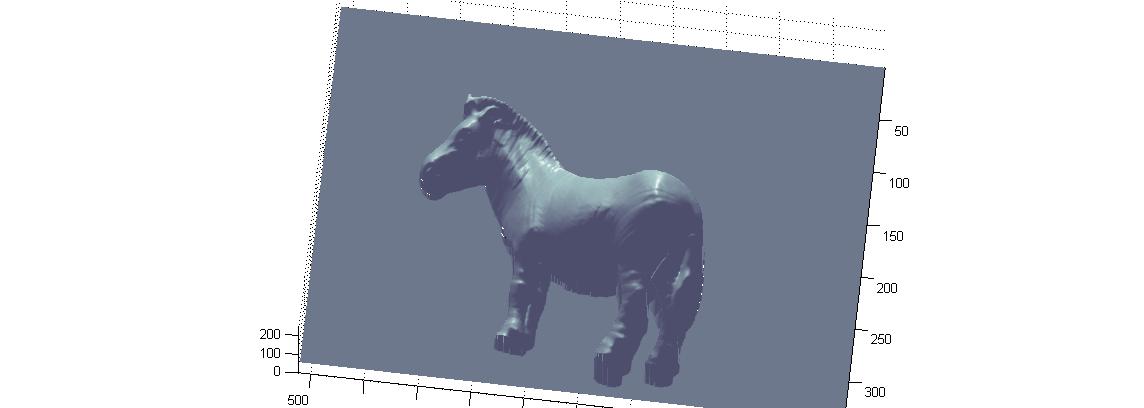

In this project we implemented the Woodham photometric stereo algorithm using provided source images of objects around which light sources have been moved, with an additional series of images of a specular sphere under identical lighting conditions for a reference with known normals. We used a similar setup to generate additional results using novel objects. Sajika worked on solving for the normals and the albedo, Michael worked on the surface reconstruction and the report.

|

|

|

|

|

|

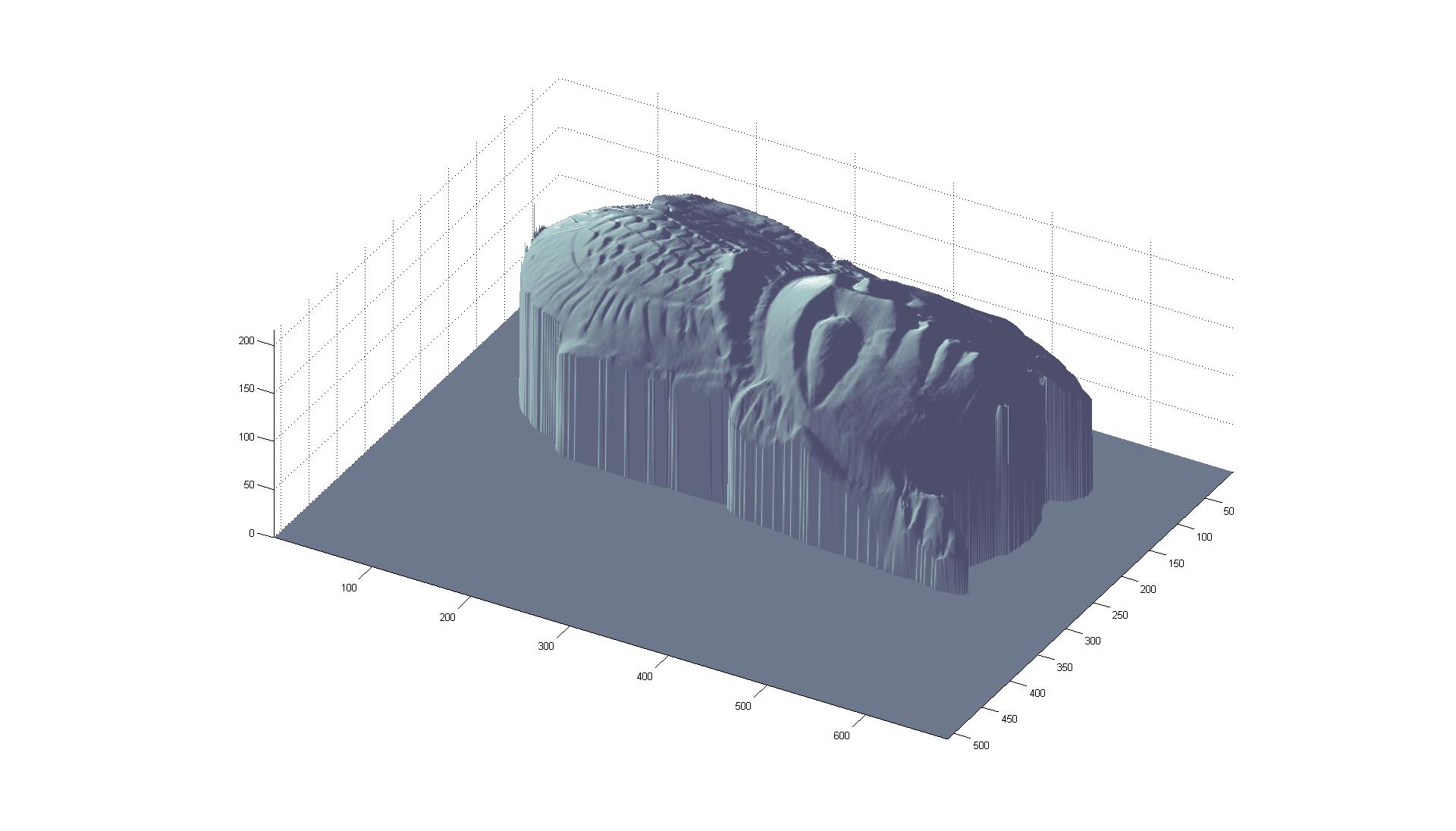

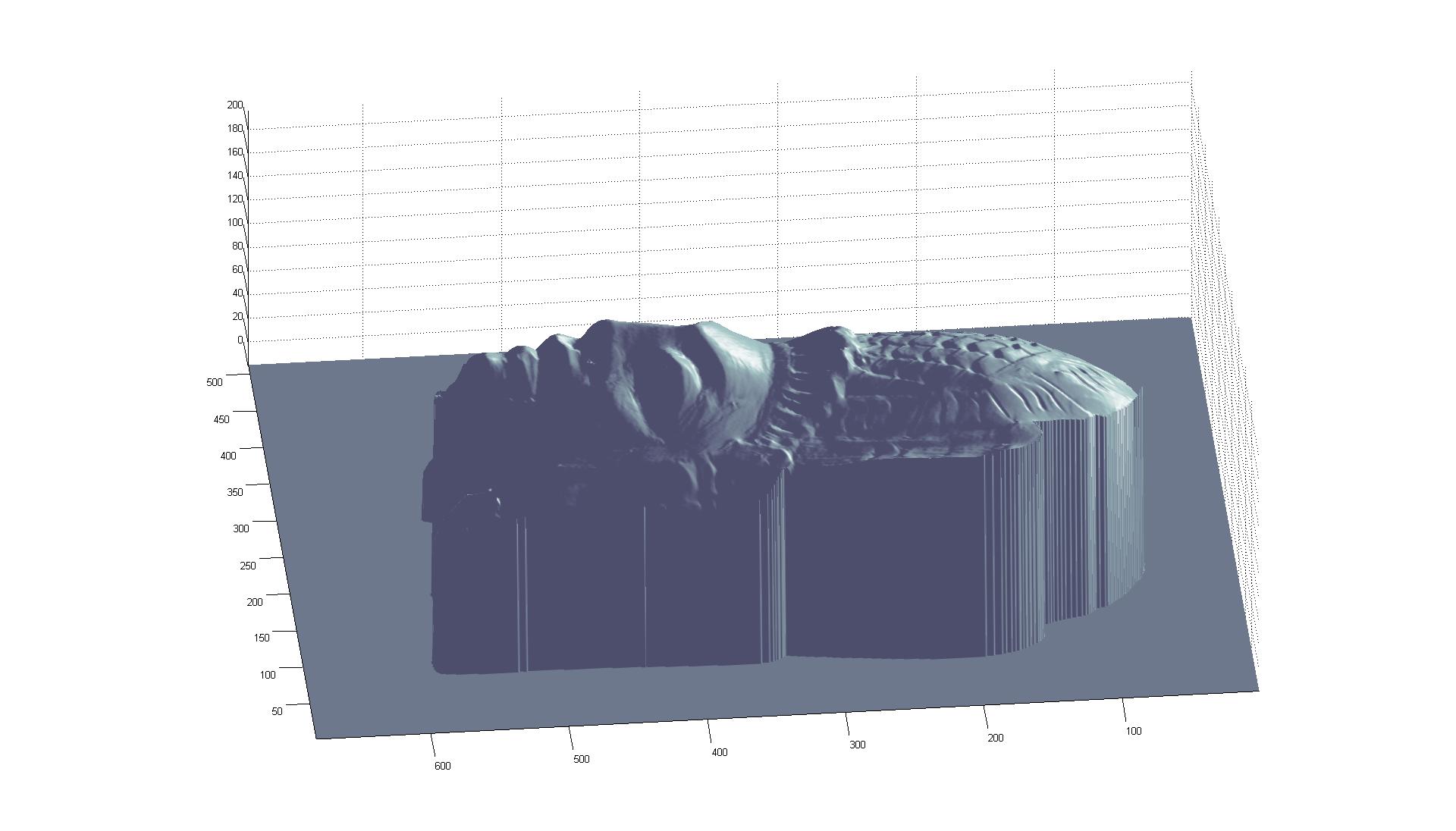

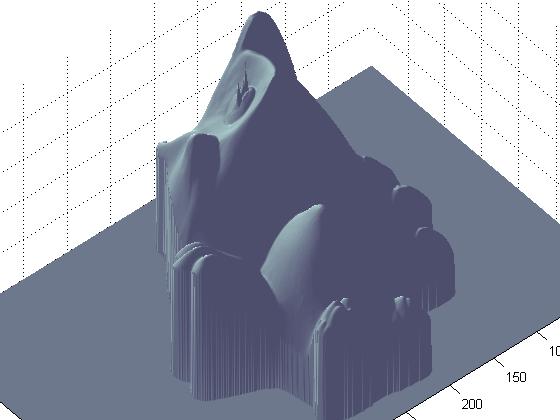

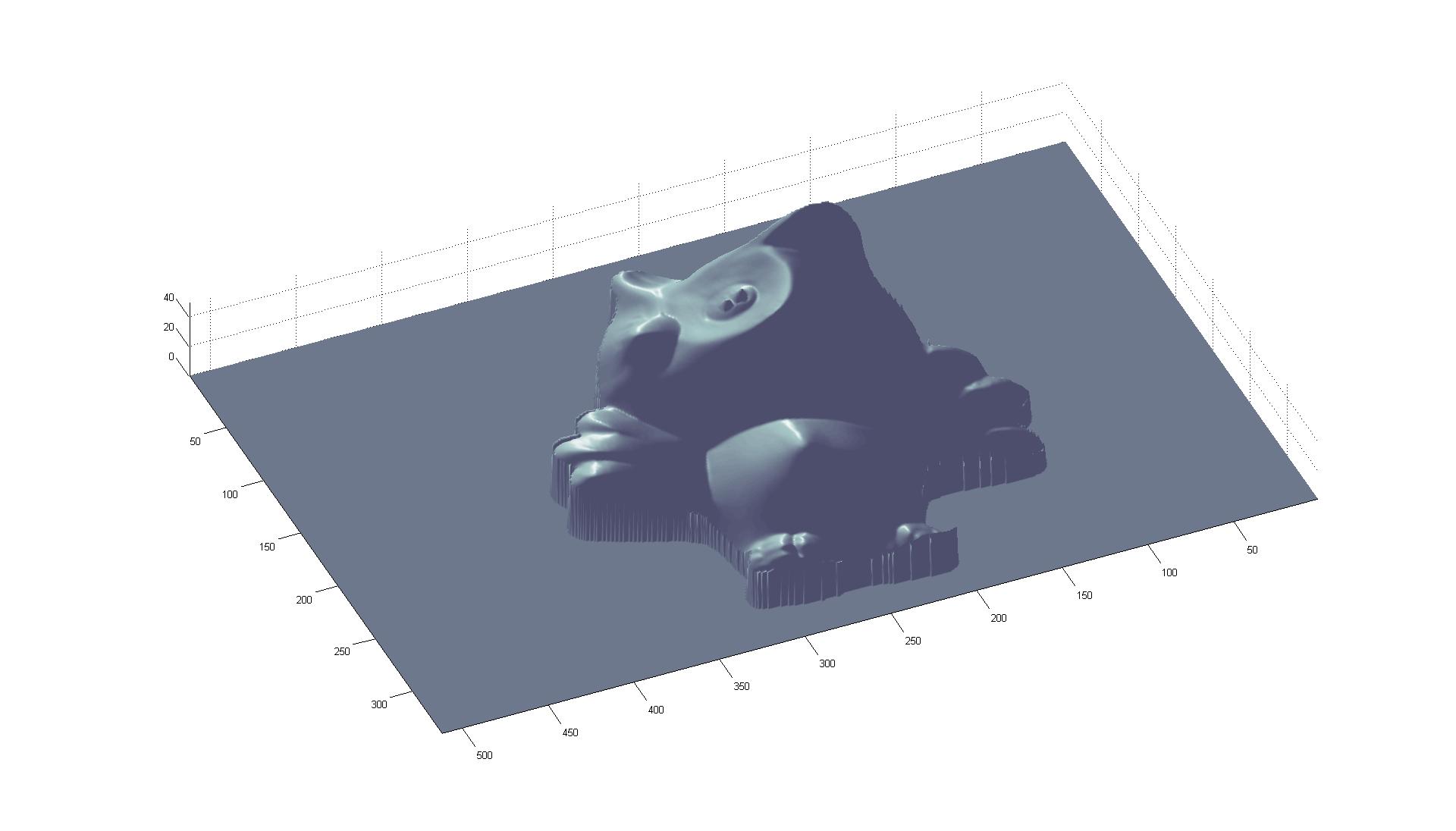

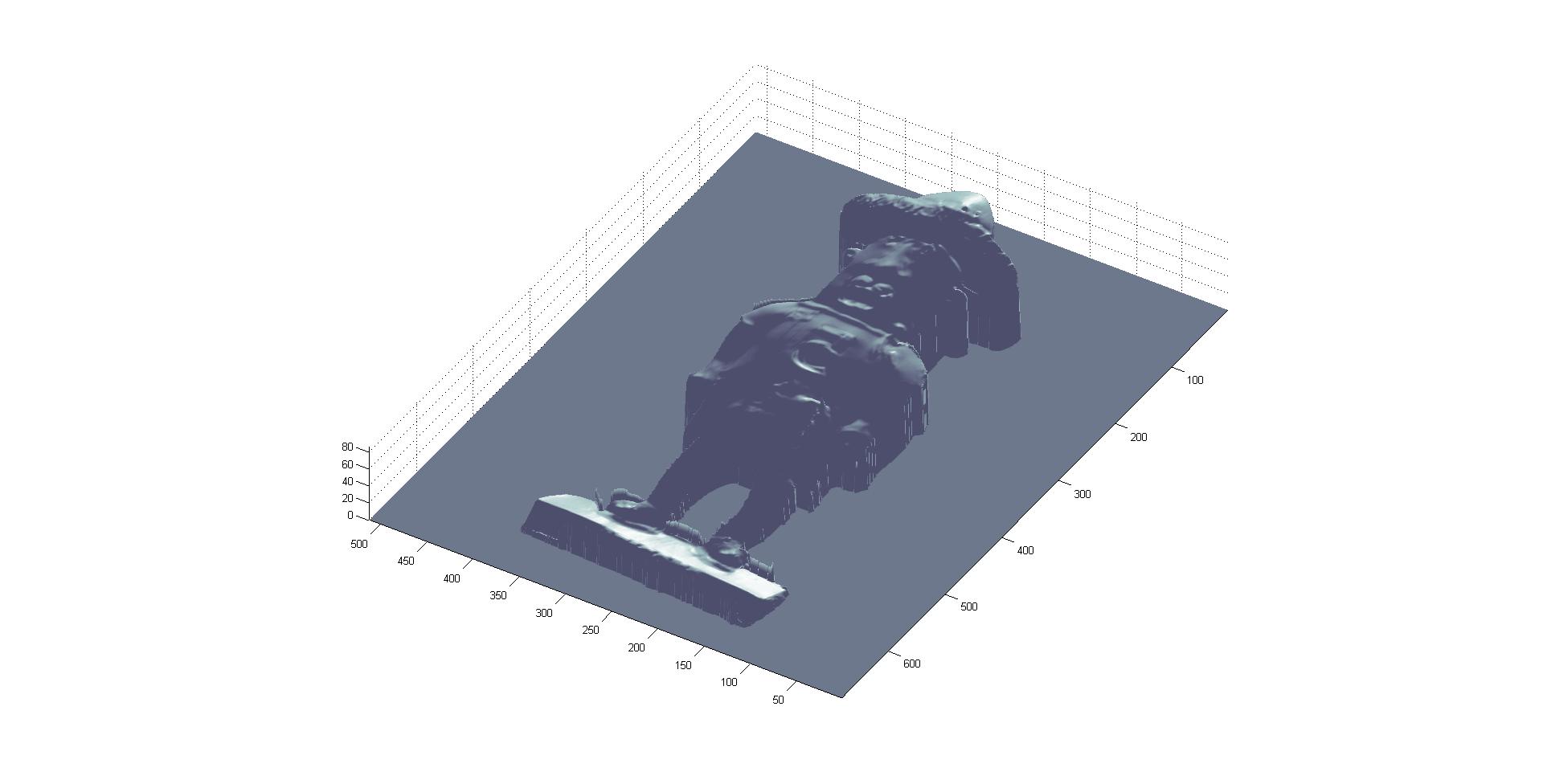

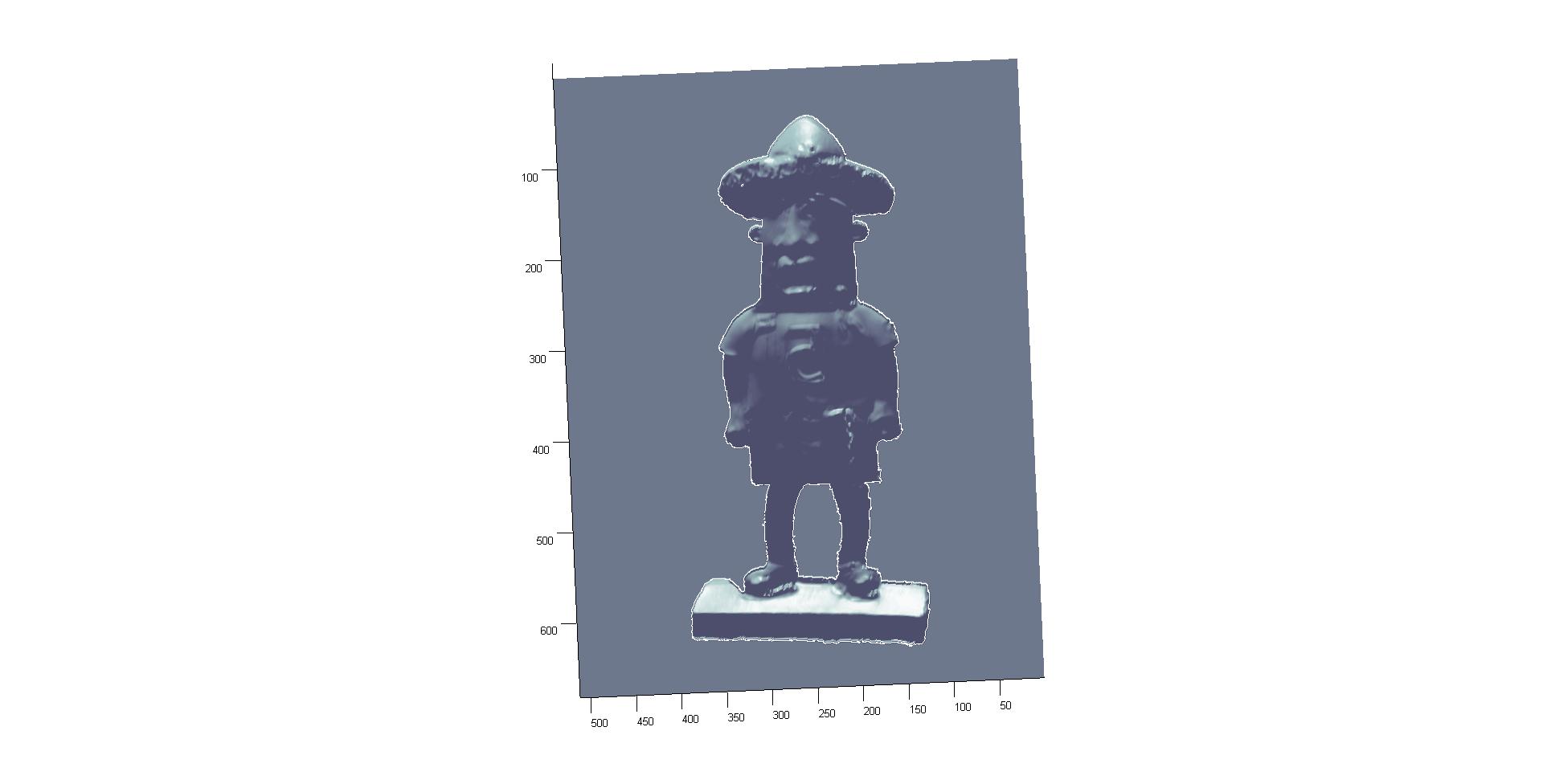

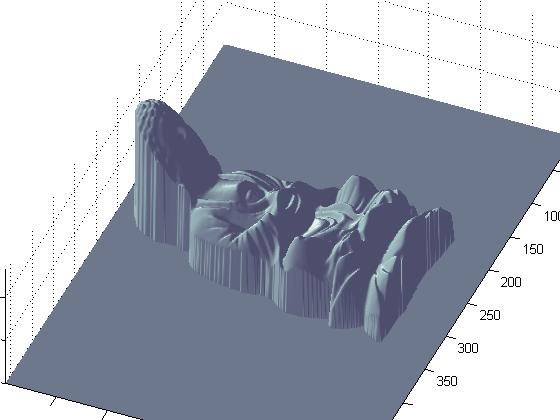

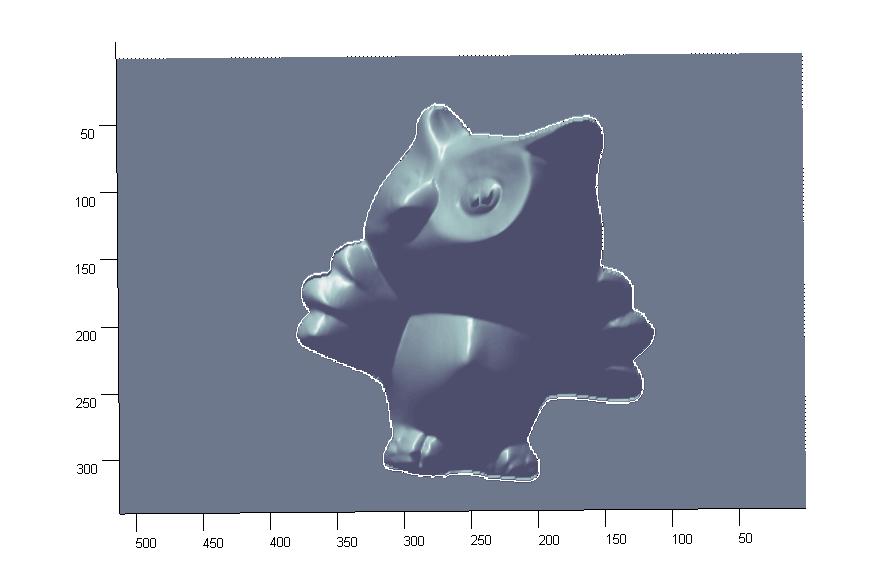

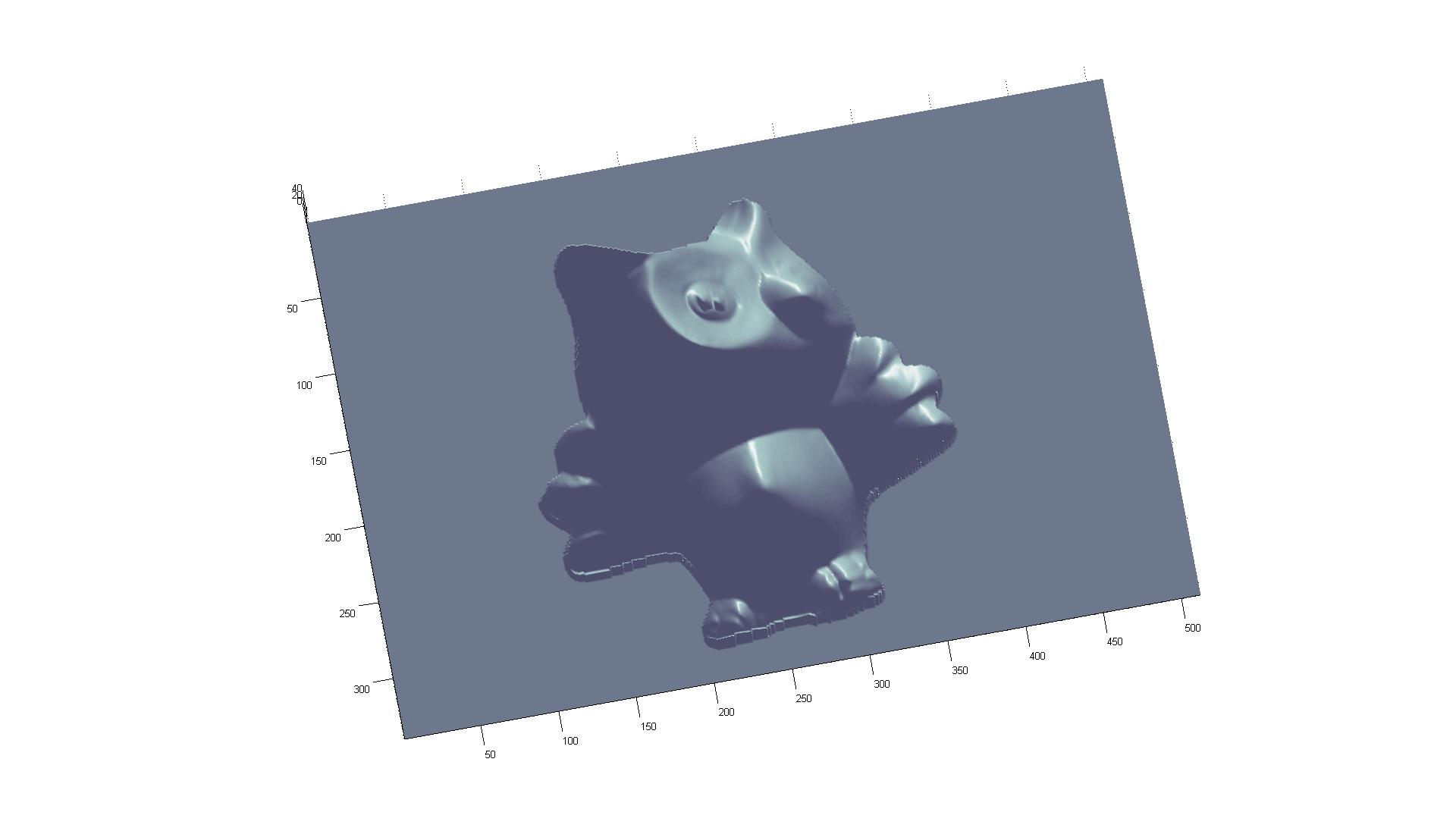

We made two modifications to the surface reconstruction code: we added the ability to convolve the resulting surface map with a gaussian to remove high frequencies, and also used the albedo intensity as a way of setting a brightness threshold for the surface reconstruction (e.g. you can exclude dark pixels from the constraint matrix, which removes a lot of the edge noise). This smoothing gets rid of some artifacts caused by specular highlights, especially in the case of the eyes of the owl, where the formerly high frequency spikes are now smaller bumps.

|

|

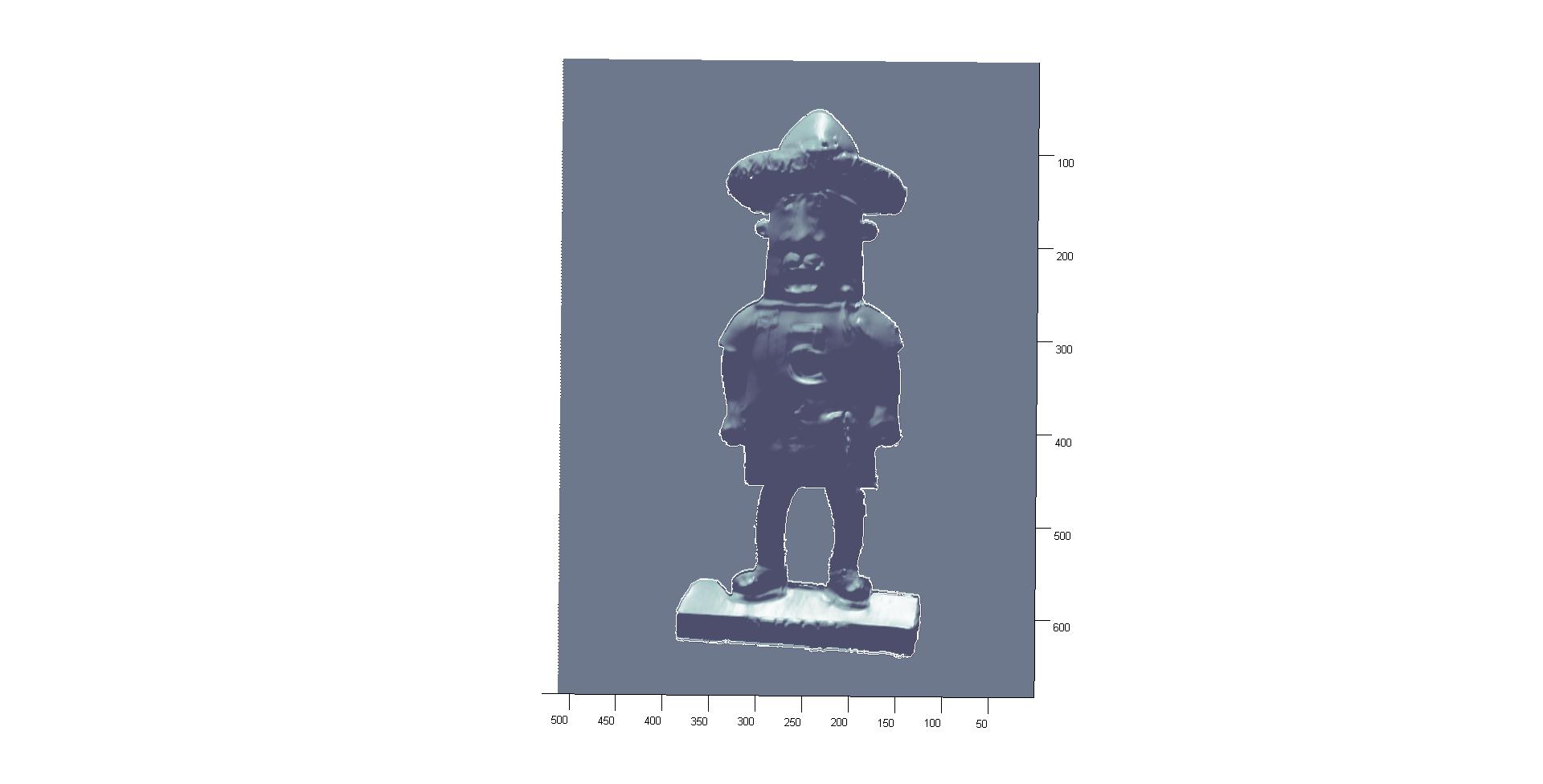

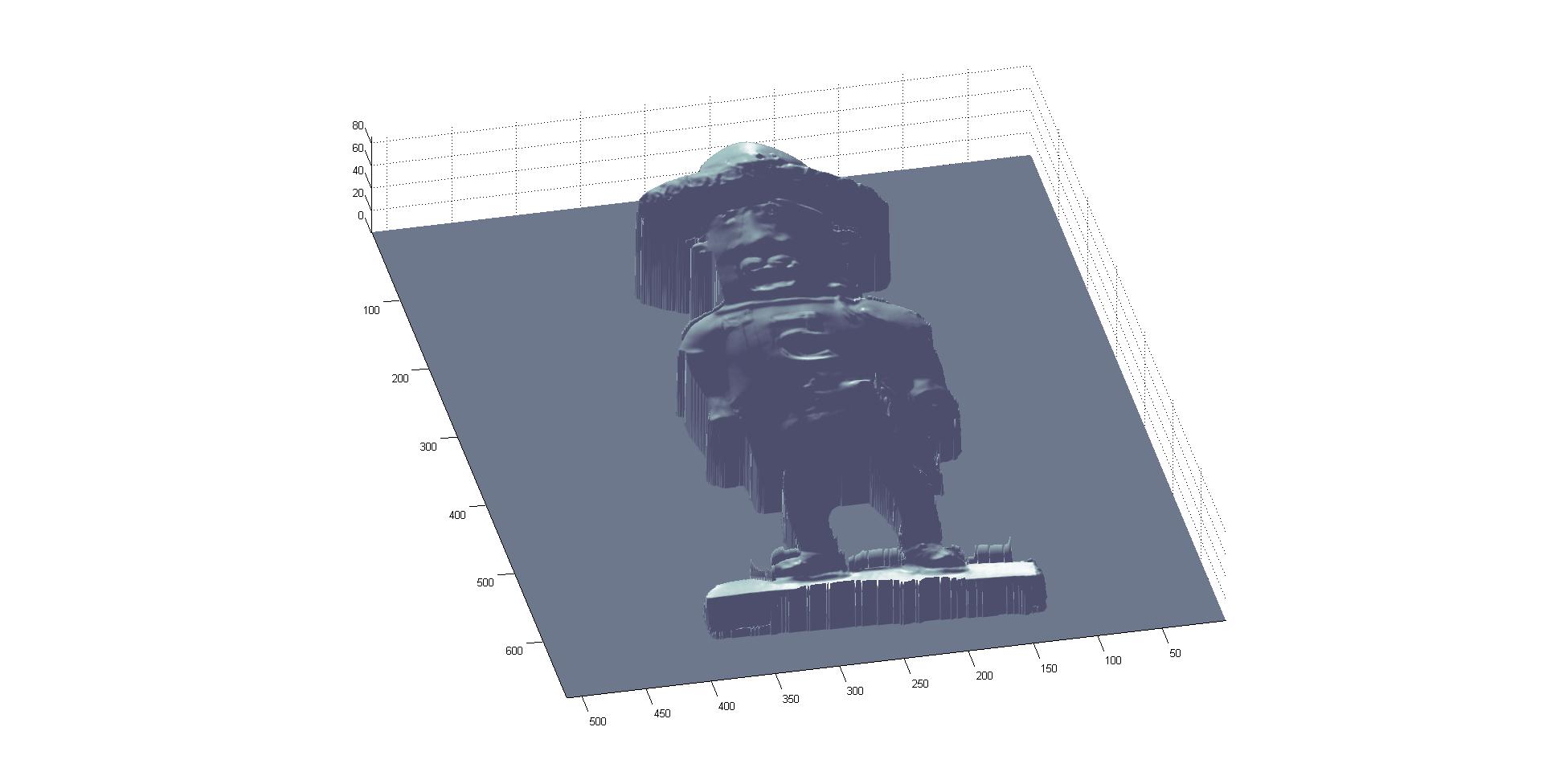

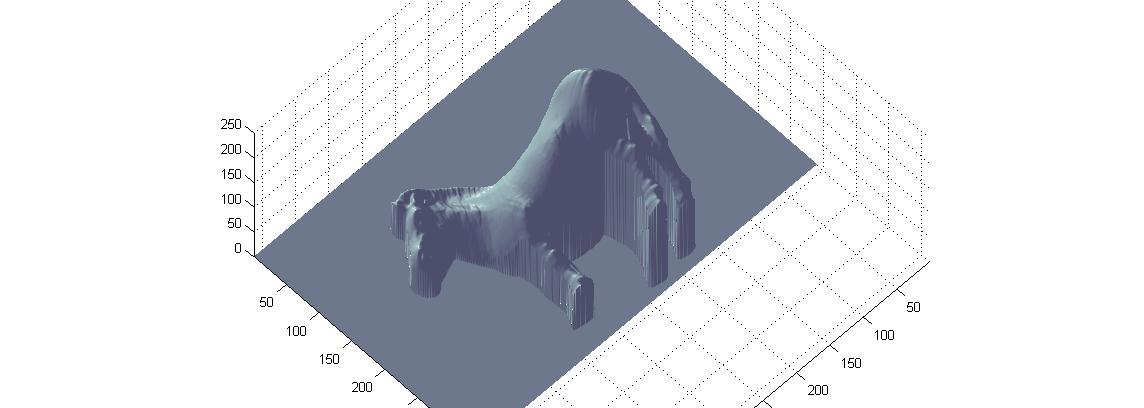

We also used a Christmas tree ornament and our own setup to take additional images of novel objects. For this we set up the chrome ball and the object in the same scene side by side on a chair covered with a black back drop. Then we set up the camera on a tripod with the following settings; shutter speed=1/250s, f-stop = f/9 in a room with lights turned off, these settings were used to minimize the effects of other light sources. The light source was an off camera flash set up to fire as a slave unit, the location of the flash was changed between each shot while trying to maintain the distance to the scene constant. A remote control was used to take the pictures while changing the location of the light source.

Since our conditions were not as ideal as the source images (for instance, we lacked perfect darkness and also lacked precise ways to place the light) we used Photoshop to generate the image masks and also exaggerate the specular highlights (treshold) on our specular sphere in order to aid in finding lights. Since the precise lighting angles could not easily be duplicated in each round of shooting, we included the reference sphere and the objects in the frame of each image, then used Photoshop to split the resulting images in half for later processing. Sajika handled most of tasks with novel objects including the setting up taking pictures and processing them in Photoshop, while Mike helped with the set up and moving the light source.

Since this method was imprecise, certain reconstructions did not work very well. Objects that were too big to fit comfortably in the frame were one problem, and changes in lighting conditions also led to failures in capturing translucent objects. Including the chrome ball by the side of the obeject resulted in some inter reflextions, that resulted in a strange highlight on the object.

With the curated images, our results were usually good. The exceptions were the case where specular highlights interfered (in the case of the owl's eyes) and where the image was suitably "odd" that using the iterative pcg matrix to solve for the surface reconstruction was not successful, as was the case with the gray sphere images. In the latter case the results were still respectable, but more ovoid than sphere-like.

|

|

|  |

|  |

|

|

|

|

|

|

|

|

|

|

|

|

The method works well for diffuse objects. Unfortunately most objects in the real world are not totally diffuse, making it impractical for most applications. In addition, there are very few viable "failure modes;" either the process worked well or it did not. Bug fixing in particular was difficult, as there is not a wide margin between "plausible, but flawed" results and "completely correct" results, as was the case in the previous projects. Luckily the conceptual underpinnings of the project were strong and simple enough to aid in understanding the inner workings of the code.