CS744 Assignment 1

Due: Sep 28, 2022, 11am Central Time

Overview

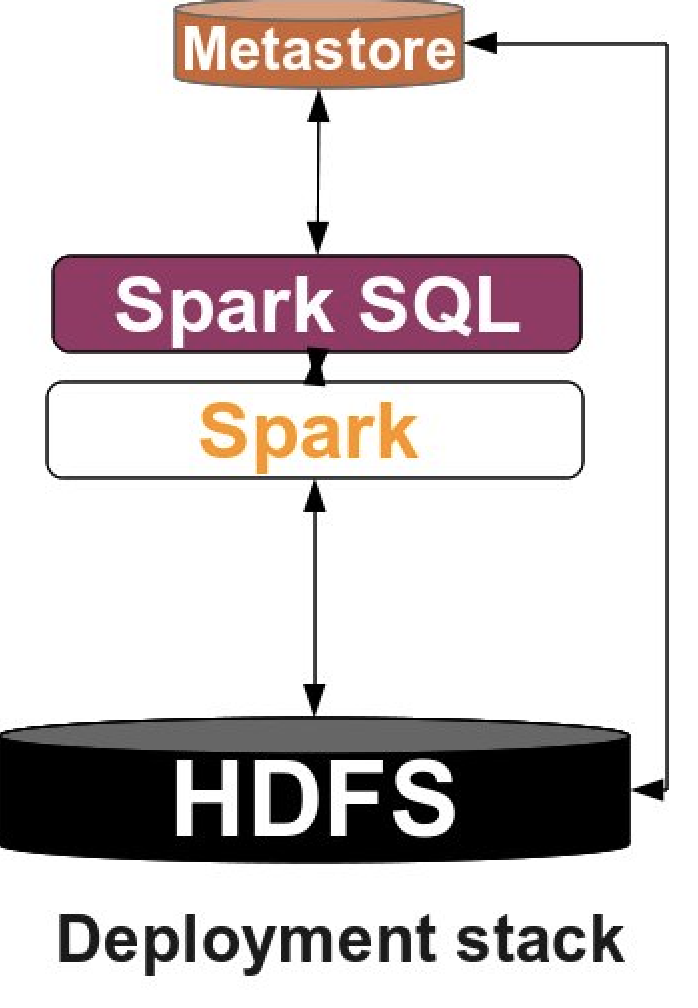

This assignment is designed to support your in-class understanding of how data analytics stacks work and get some hands-on experience in using them. You will need to deploy Apache Hadoop as the underlying file system and Apache Spark as the execution engine. You will then develop several small applications based on them. You will produce a short report detailing your observations and takeaways.

Learning Outcomes

After completing this programming assignment, you should be able to:

- Deploy and configure Apache Spark and HDFS.

- Write simple Spark applications and launch them in a cluster.

- Describe how Apache Spark and HDFS work, and interact with each other.

Environment Setup

You will complete your assignment in CloudLab. You can refer to Assignment 0 to learn how to use CloudLab. We suggest you create one experiment per group and work together. An experiment lasts 16 hours, which is very short. So, set a time frame where all your group members can sit together and focus on the project, or make sure to extend the experiment when it is necessary.

In this assignment, we provide you with a CloudLab profile called “cs744-fa22-assignment1” under the “UWMadison744-F22” project for you to start your experiment. The profile is a simple 3-node cluster with Ubuntu installed on each machine. While launching the experiment make sure to name it appropriately and use the correct group.

You get full control of the machines once the experiment is created, so feel free to download any missing packages you need in the assignment.

As the first step, you should run the following commands on every VM (node0, node1, and node2). You can find the ssh commands to log into each VM in the CloudLab experiment list view.

sudo apt updatesudo apt install openjdk-8-jdk- enable the SSH service among the nodes in the cluster. To do this, you have to generate a private/public key pair using:

ssh-keygen -t rsaon the leader node (we will use node0 as the leader in our description.) while the others are assigned as followers only. Then, manually copy the public key of node0 to theauthorized_keysfile in all the nodes (including node0) under~/.ssh/. To get the content of the public key, do:cat ~/.ssh/id_rsa.pubWhen you copy the content, copy the entire output of the above command, but make sure you do not append any newlines. Otherwise, it will not work.

NOTE: You may need to periodically recopy the key to the authorized files as CloudLab sometimes overwrites this data (if for example you notice all of a sudden Spark can no longer connect to a worker).

Once you have done this you can copy files from node0 to the other nodes

using tools like parallel-ssh. To use parallel-ssh you will

need to create a file with the hostnames of all the machines. The file with the hostnames can be created by running hostname on each node and using the output as a line in the hostnames file. More generally, lines in the host file are of the form [user@]host[:port]. You can test your parallel-ssh with a

command like:

parallel-ssh -i -h <host_file_name> -O StrictHostKeyChecking=no <command>

which will run command on each host. For example, you can use pwd.

Part 0: Mounting disks

Your home directory in the CloudLab machine is relatively small and can only hold 16GB of data. We have also enabled another mount point to contain around 96GB of space on each node which should be sufficient to complete this assignment.

However you need to create this mount point using the following commands (on each node).

> sudo mkfs.ext4 /dev/xvda4

# This formats the partition to be of type ext4

> sudo mkdir -p /mnt/data

# Create a directory where the filesystem will be mounted

> sudo mount /dev/xvda4 /mnt/data

# Mount the partition at the particular directory

After you complete the above steps you can verify this is correct by running

TA744@node2:~$ df -h | grep "data"

/dev/xvda4 95G 60M 90G 1% /mnt/data

Now you can use /mnt/data to store files in HDFS or to store shuffle data in Spark (see below). It helps to change the ownership of this directory so that sudo is not required for all future commands. You can identify the current user with the command who and then change the directory ownership with sudo chown -R <user> /mnt/data.

Part 1: Software Deployment

Apache Hadoop

Apache Hadoop is a collection of open-source software utilities that provide simple distributed programming models for processing of large data sets. It mainly consists of the Hadoop Distributed File System (HDFS), Hadoop MapReduce, and Hadoop YARN. In this assignment, we will only use HDFS. HDFS consists of a NameNode process running on the leader instance and a set of DataNode processes running on follower instances. The NameNode records metadata and handles requests. The DataNode stores actual data.

You can find the detailed deployment instructions in this link or you can follow our simplified version:

First, let’s download Hadoop on every machine in the cluster. Note that you can do this on node0 and then use parallel-ssh or parallel-scp to run the same command or copy data to all VMs.

wget https://dlcdn.apache.org/hadoop/common/hadoop-3.3.4/hadoop-3.3.4.tar.gz

tar zvxf hadoop-3.3.4.tar.gz

There are a few configuration files we need to edit (you can make these edits on node0 and then copy the files as described below). They are originally empty so users have to manually set them.

Add the following contents in the <property> field in hadoop-3.3.4/etc/hadoop/core-site.xml:

<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://namenode_IP:9000</value>

</property>

</configuration>

where namenode_IP refers to the IP address of node0. This configuration indicates where

the NameNode will be listening for connections.

NOTE: Make sure to use internal IPs in your config

files as specified in the FAQ below. The namenode_IP can be found by running ifconfig on node0. Use the inet IP address which starts with 10 (e.g., 10.10.1.1).

Also you need to add the following in hadoop-3.3.4/etc/hadoop/hdfs-site.xml. Make sure you specify the path by yourself (you should create folders by yourself if needed. For example, create hadoop-3.3.4/data/namenode/ and set it to be the path for namenode dir).

These directories indicate where data for the NameNode and DataNode will be stored respectively. You can create these directories in /mnt/data created earlier. The path in the xml file should be absolute.

Note that the same path needs to exist on all the follower machines which will be running DataNodes.

<configuration>

<property>

<name>dfs.namenode.name.dir</name>

<value>/path/to/namenode/dir/</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>/path/to/datanode/dir</value>

</property>

</configuration>

You also need to manually specify JAVA_HOME in hadoop-3.3.4/etc/hadoop/hadoop-env.sh.

You can get the path with the command: update-alternatives --display java. Take the value of the current link and remove the trailing /bin/java. For example, a possible link can be /usr/lib/jvm/java-8-openjdk-amd64/jre.

Then, set the JAVA_HOME by replacing export JAVA_HOME=${JAVA_HOME} with export JAVA_HOME=/actual/path.

We also need to edit hadoop-3.3.4/etc/hadoop/workers to add the IP address of all the datanodes.

Once again remember to use the internal IP address!

In our case, we need to add the IP addresses for all the nodes in the cluster, so every node can store data (you can delete the existing localhost line in this file).

Copy the config files with these changes to all the machines. For example:

parallel-scp -h hosts -O StrictHostKeyChecking=no <file> <path to copy file to on remote machine>

Now, we start to format the namenode and start the namenode daemon.

Firstly, on node0, add the absolute paths to hadoop-3.3.4/bin and hadoop-3.3.4/sbin to $PATH (setenv PATH $PATH":<path>"). Then, do:

hdfs namenode -format

start-dfs.sh

This will also start all the datanode daemons.

To check the HDFS status, go to (port 9870 is for hadoop version 3 and higher):

<namenode_IP>:9870/dfshealth.html

You can also use command jps to check whether HDFS is up, there should be a NameNode process that is running on node0, and a DataNode process that is running on each of your VMs.

Now, the HDFS is setup. Type the following to see the available commands in HDFS. You can stop HDFS with stop-dfs.sh.

hdfs dfs -help

Apache Spark

Apache Spark is a powerful open-source unified analytics engine for big data processing, which is built upon its core idea of Resilient Distributed Datasets (RDDs). Spark standalone consists of a set of daemons: a Master daemon, and a set of Worker daemons. Spark applications are coordinated by a SparkContext object which will connect to the Master, responsible for allocating resources across applications. Once connected, Spark acquires Executors on every Worker node in the cluster, which are processes that run computations and store data for your applications. Finally, the application’s tasks are handed to Executors for execution. We will use Spark in standalone mode, which means it doesn’t need to rely on resource management systems like YARN.

Instructions on building a Spark cluster can be found in Spark’s official document. Or you can follow our instructions:

Firstly, download and decompress the Spark binary on each node in the cluster:

wget https://dlcdn.apache.org/spark/spark-3.3.0/spark-3.3.0-bin-hadoop3.tgz

tar zvxf spark-3.3.0-bin-hadoop3.tgz

Similar to HDFS you will need to modify spark-3.3.0-bin-hadoop3/conf/workers to include the IP

address of all the follower machines. Recall that we should be using internal IP addresses!

Spark 3.3 requires Python 3.7 or higher. Thus, we also recommend the following steps.

- on each node run

sudo apt install python3.7 - add

export PYSPARK_PYTHON=/usr/bin/python3.7andexport PYSPARK_DRIVER_PYTHON=/usr/bin/python3.7tospark-3.3.0-bin-hadoop3/conf/spark-env.sh

We also recommend binding Spark to the correct IP address by setting the SPARK_LOCAL_IP and SPARK_MASTER_HOST in the

spark-3.3.0-bin-hadoop3/conf/spark-env.sh at this time. (See the FAQ Network setup).

To start the Spark standalone cluster you can then run the following command on node0:

spark-3.3.0-bin-hadoop3/sbin/start-all.sh

You can go to <node0_IP>:8080 to check the status of the Spark cluster.

To check that the cluster is up and running you can use jps to check that a Master process is running on node0, and a Worker process is running on each of your follower VMs.

To stop all nodes in the cluster, do

spark-3.3.0-bin-hadoop3/sbin/stop-all.sh

Next, setup the properties for the memory and CPU used by Spark applications. Set Spark driver memory to 30GB and executor memory to 30GB. Set executor cores to be 5 and number of cpus per task to be 1. Document about setting properties is here.

Part 2: A simple Spark application

In this part, you will implement a simple Spark application. We have provided some sample data collected by IOT devices at http://pages.cs.wisc.edu/~shivaram/cs744-fa18/assets/export.csv. You need to sort the data firstly by the country code alphabetically (the third column) then by the timestamp (the last column). Here is an example:

Input:

| … | cca2 | … | device_id | … | timestamp |

|---|---|---|---|---|---|

| … | US | … | 1 | … | 1 |

| … | IN | … | 2 | … | 2 |

| … | US | … | 3 | … | 2 |

| … | CN | … | 4 | … | 4 |

| … | US | … | 5 | … | 3 |

| … | IN | … | 6 | … | 1 |

Output:

| … | cca2 | … | device_id | … | timestamp |

|---|---|---|---|---|---|

| … | CN | … | 4 | … | 4 |

| … | IN | … | 6 | … | 1 |

| … | IN | … | 2 | … | 2 |

| … | US | … | 1 | … | 1 |

| … | US | … | 3 | … | 2 |

| … | US | … | 5 | … | 3 |

You should first load the data into HDFS. Then, write a Spark program in Java/Python/Scala to sort the data. Examples of self-contained applications in all of those languages are given here.

We suggest you also go through the Spark SQL Guide and the APIs. Spark DataFrame is a distributed collection of data organized into named columns. It is conceptually equal to a table in a relational database. In our case you will create DataFrames from the data that you load into HDFS. Users may also ask Spark to persist a DataFrame in memory, allowing it to be reused efficiently in subsequent actions (not necessary to do for this part of the assignment, but will need to do it in part 3).

An example of a couple commands if you are using PySpark (Python API that supports Spark) that should be handy.

from pyspark.sql import SparkSession

# The entry point into all functionality in Spark is the SparkSession class.

spark = (SparkSession

.builder

.appName(appName)

.config("some.config.option", "some-value")

.master(master)

.getOrCreate())

# You can read the data from a file into DataFrames

df = spark.read.json("/path/to/a/json/file")

After loading data you can apply DataFrame operations on it.

df.select("name").show()

df.filter(df['age'] > 21).show()

In order to run your Spark application you need to submit it using spark-submit script from Spark’s bin directory. More details on submitting applications could be found here.

Finally, your application should output the results into HDFS in form of csv. It should take in two arguments, the first a path to the input file and the second the path to the output file. Note that if two data tuples have the same country code and timestamp, the order of them does not matter.

Part 3: PageRank

In this part, you will need to implement the PageRank algorithm, which is an algorithm used by search engines like Google to evaluate the quality of links to a webpage. The algorithm can be summarized as follows:

- Set initial rank of each page to be 1.

- On each iteration, each page p contributes to its outgoing neighbors a value of rank(p)/(# of outgoing neighbors of p).

- Update each page’s rank to be 0.15 + 0.85 * (sum of contributions).

- Go to next iteration.

In this assignment, we will run the algorithm on two data sets. Berkeley-Stanford web graph is a smaller data set to help you test your algorithm. And enwiki-20180601-pages-articles (we have already put it to path /proj/uwmadison744-f22-PG0/data-part3/enwiki-pages-articles/) is a larger one to help you better understand the performance of Spark. Each line in the data set consists of a page and one of its neighbors. You need to copy them to HDFS first. In this assignment, always run the algorithm for a total of 10 iterations.

Task 1. Write a Scala/Python/Java Spark application that implements the PageRank algorithm.

Task 2. In order to achieve high parallelism, Spark will split the data into smaller chunks called partitions which are distributed across different nodes in the cluster. Partitions can be changed in several ways. For example, any shuffle operation on a DataFrame (e.g., join()) will result in a change in partitions (customizable via user’s configuration). In addition, one can also decide how to partition data when writing DataFrames back to disk. For this task, add appropriate custom DataFrame/RDD partitioning and see what changes.

Task 3. Persist the appropriate DataFrame/RDD(s) as in-memory objects and see what changes.

Task 4. Kill a Worker process and see the changes. You should trigger the failure to a desired worker VM when the application reaches 25% and 75% of its lifetime:

-

Clear the memory cache using

sudo sh -c "sync; echo 3 > /proc/sys/vm/drop_caches". -

Kill the Worker process.

With respect to Task 1-4, in your report you should report the application completion time. Present/reason about the difference in performance or your own findings, if any. Take a look at the lineage graphs of applications from Spark UI, or investigate the log to find the amount of network/storage read/write bandwidth and the number of tasks for every execution to help you better understand the performance issues. You may also wish to look at CPU usage, memory usage, disk IO, network IO, etc.

Deliverables

You should submit a tar.gz file to Canvas, which consists of a brief report (filename: groupx.pdf) and the code of each task (you will be put into your groups on canvas so only 1 person should need to submit it).

- Include your responses for Tasks 1-4 in the report. This should include details of what you found, the reasons behind your findings and corresponding evidence in terms of screenshots or graphs etc. NOTE: Please create a subsection for each task and clearly indicate your response to each task.

- In the report, add a section detailing the specific contributions of each group member.

- Put the code of each part and each task into separate folders and give them meaningful names. Code should be commented well (that will be worth some percentage of your grade for the assignment, grader will be looking at your code). Also create a README file for each task and provide the instructions about how to run your code.

- Include a

run.shscript for each part of the assignment that can re-execute your code on a similar CloudLab cluster assuming that Hadoop and Spark are present in the same location.

FAQ’s

These are based on the questions which students who previously took the course asked.

Regarding Experiments on Cloudlab The default length of experiments on cloudlab is 16hrs. You can extend a running experiment by another 16hrs if you need more time. Extensions longer than that require approval by cloudlab staff are not recommended. Make sure you create one experiment per group so all groups have access to compute clusters.

Permission denied (Public Keys) Make sure you have copied the SSH keys correctly. Oftentimes copying ends up adding a new line, which leads to this failure. Be careful about this.

Network setup on cloudlab All cloudlab nodes in Wisconsin are connected to

switches, a switch for a control network and a faster switch for an experiment

network. For all your experiments you should use the experiment network. To

use the experiment network use the IP-Addresses which start with 10.*.*.*

instead of IP addresses that start with 172.*.*.* or 128.*.*.*. More

information about cloudlab

network can be found here. You can find the ip-address using ifconfig command. To force

HDFS to use these, you need to configure them in the worker files. For Spark

you may need to export SPARK\_LOCAL\_IP=<IP> or export SPARK_MASTER_HOST=<IP> in the conf/spark-env.sh files with the IP’s to get Spark to listen on the

experiment network. If you do this, make sure to restart Spark so these changes take affect. Also note configs passed via command line are overridden by

spark-default configs. So make sure to make changes in the spark configs.

Using Non-Routable IP’s The profile “cs744-fa22-assignmet1” creates non-routable IP’s. To access instances via internet you will need to use SOCK proxy or ssh tunneling to access the health pages. Detailed steps can be found here. The main things to do are:

- Open a new shell and run something like

ssh -D 8123 -C -N -p 29610 myhost - Where myhost is the hostname for node0 in you experiment from CloudLab list view

- After you do this, change your browser settings to use localhost:8123 as the proxy

- Then you should be able to open the health pages using addresses of the form http://10.10.1.1:8080. Also because of non-routable IP’s you will need to look at list view for your experiment to find the exact port to use for ssh.

Acknowledgements

This assignment uses insights from Professor Aditya Akella’s assignment 1 of CS744 Fall 2017 fall and Professor Mosharaf Chowdhury’s assignment 1 of ECE598 Fall 2017.