Diffusion Models - An Overview

What are Diffusion Models?

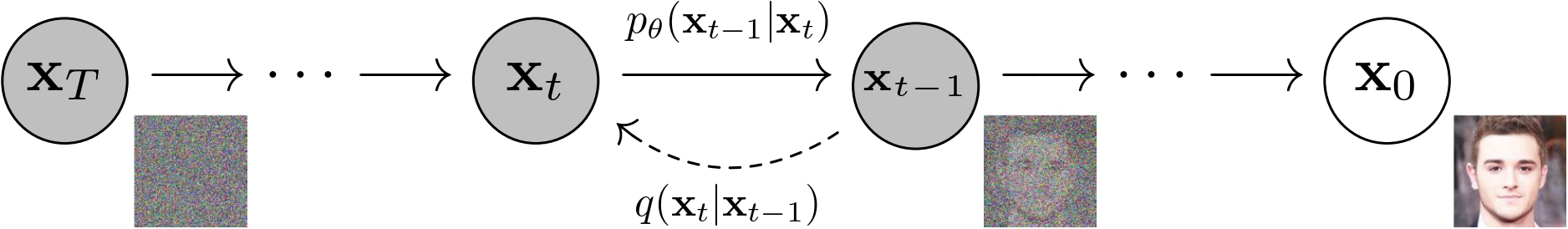

Diffusion Models are a class of generative models that have gained significant attention in the field of artificial intelligence, particularly for their ability to generate high-quality images, audio, and other formats of data. They work by simulating a diffusion process, where data is gradually transformed into noise and then we learn parameters to model the reverse process, effectively denoising the data back to its original form.

The forward diffusion process

We can formally define the forward diffusion process as a Markov chain that gradually adds Gaussian noise to the data over a finite number of time steps $T$. The objective of the forward process is to destroy the signal in the data. A single step of the forward process at time step $t \in [ 1, \ldots, T ]$ can be formalized as \eqref{eq:forward-process-step} and the full forward process as \eqref{eq:full-forward-process}.

\[\begin{equation} \label{eq:forward-process-step} q(x_t \mid x_{t-1}) = \mathcal{N}(x_t; \sqrt{1 - \beta_t} x_{t-1}, \beta_t \mathbb{I}) \end{equation}\]Here,

- $x_0$ represents the original observered data (e.g., an image).

- $x_t$ is the noisy state of the latent variable at time step $t$.

- $x_T$ is the final noisy state, which is approximately Gaussian noise.

- $q(x_{t+1} \mid x_t)$ - Forward diffusion kernel is the conditional probability distribution that defines the transition at time step $t$.

- $\beta_t$ is the fixed variance schedule that controls the amount of noise added at each time step.

- $\sqrt{1 - \beta_t}$ determines how much of the original signal is retained at time step $t$.

The posterior

\[\begin{equation} \label{eq:full-forward-process} q(x_1, \ldots, x_t \mid x_0) = q(x_1 \mid x_0) q(x_2 \mid x_0) \ q(x_t \mid x_{t-1}) = \mathcal{N}(x_t; \sqrt{1 - \beta_t} x_{t-1}, \beta_t \mathbb{I}) \end{equation}\]Since the forward process is a markov chain, we can express the full conditional distribution using

Let us define the notation $x_{a:b} = \{ x_a, x_{a+1}, \ldots, x_b \}$ to represent the set of variables from time steps $a$ to $b$.

Since the forwards process is a Markov chain, we can express the conditional distribution

Here, $\beta_t$ is a hyperparameter (variance schedule) that controls the amount of noise added at each time step.

Denoising Diffusion Probabilistic Models (DDPM)

A DDPM makes use of two Markov chains: a forward chain to perturb the data to noise and a reverse chain that converts this noise back to data. The forward process is typically hand crafted with the goal to transform any data distribution into a simple prior distribution (e.g., Gaussian). The reverse process reverses this transformation by learning transition kernels parameterized by deep neural networks. New data points are generated by first sampling from the prior distribution and then sampling through the learned reverse Markov chain.

Formally, given a data distribution $x_0 \sim q(x_0)$, the forward markov process generates a sequence of random variables $x_1, x_2, \ldots, x_T$ with the transition kernel $q(x_t \mid x_{t-1})$.

\[\begin{equation} q(x_1, \ldots, x_T \mid x_0) = \prod_{t=1}^{T} q(x_t \mid x-{t-1}) \end{equation}\]In DDPMs, the transition kernel $q(x_t \mid x_{t-1})$ is hand designed to incrementally transform the data distribution q(x_0) into a tractable prior distribution. Typically, this is done through Gaussian perturbation, and the most common choice for the transition kernel is

\[\begin{equation} q(x_t \mid x_{t-1}) = \mathcal{N}(x_t; \sqrt{1 - \beta_t} x_{t-1}, \beta_t \mathbb{I}), \end{equation}\]where, $\beta_t$ is a hyperparameter chosen ahead of model training. Using this kernel significantly simplifies our discussion as it lets us marginalize out intermediate steps in the forward process and directly sample $x_t$ from $x_0$.

If we define $\alpha_t = 1 - \beta_t$ and $\bar{\alpha}_t = \prod_{s=0}^{t} \alpha_s$, we can express the distribution of $x_t$ given $x_0$ as

\[\begin{equation} q(x_t \mid x_0) = \mathcal{N}(x_t; \sqrt{\bar{\alpha}\_t} x_0, (1 - \bar{\alpha}\_t) \mathbb{I}) \end{equation}\]A quick note

A quick sidenote to explain this result Hello testing this text asdkljfaldsj fklajsdflk jasdlkfjal ksdjf aljdflaskd flaskjdf laksjdf klasdj saljdflksaj \begin{equation} q(x_t \mid x_0) \sim \mathcal{N}(x_t; \sqrt{\bar{\alpha}_t} x_0, (1 - \bar{\alpha}_t) \mathbb{I}) \end{equation} Testing changes $ x \sim \mathcal{N}(0, 1) $

\[x \sim \mathcal{N}(0, 1)\]

Enjoy Reading This Article?

Here are some more articles you might like to read next: