|

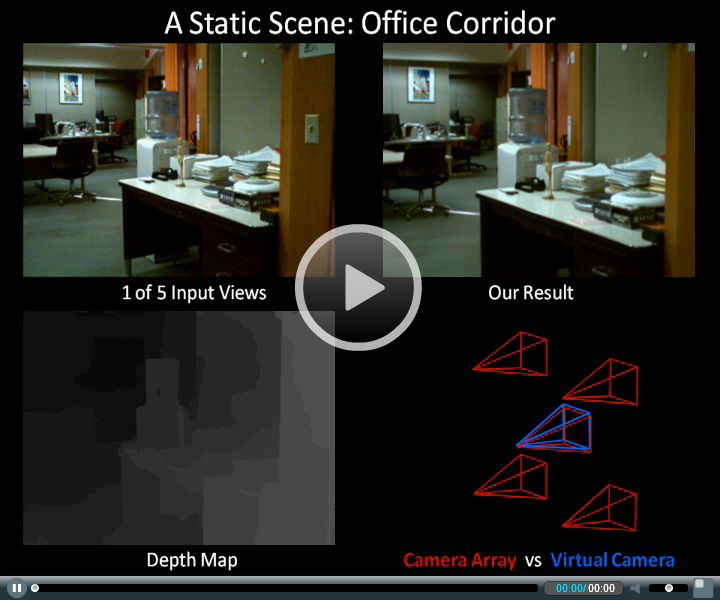

The following four datasets contain five-view PNG image sequences. The frame rate is

25 fps, and the image size is 480 x 360. The images have been corrected to remove

radial distortion. Intrinsic and extrinsic camera parameters for each of the five cameras are

available here.

Clarification (added March 13, 2011): due to

image quality issues in one of the corner cameras, the images provided (and those used to

generate our demos) were captured using the following five cameras: topmost center, leftmost

center, center, rightmost center, and bottommost center -- not the corner

cameras, as indicated in Figure 1 of the paper.

Water cooler dataset, 350 frames [ZIP 421.3 MB]

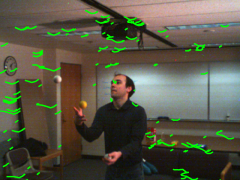

Solo juggler dataset, 530 frames [ZIP 689.3 MB]

Video game dataset, 550 frames [ZIP 714.8 MB]

Crowd dataset, 260 frames [ZIP 294.4 MB]

|

3D stereoscopic video stabilization, Demo at ICCV 2011.

3D stereoscopic video stabilization, Demo at ICCV 2011.