The hometown cluster is a small-scale and flexible testbed. It holds many types of programmable network hardware, including SmartNICs, RMT switches, and networking accelerators. We have built it for several years and performed incremental infrastructure updates.

1. Hardware Specification

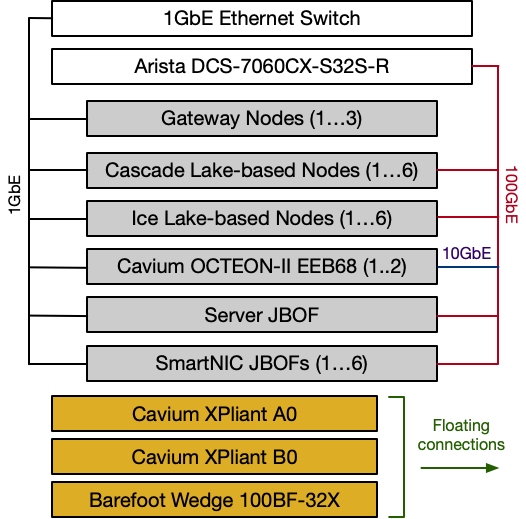

The cluster consists of the following compute/storage nodes and switches.

-

3x Gateway Nodes: Each node is a Dell T620 box, enclosing two Intel E5-2620 processors (2.0GHz), 32GB DDR3 memory, an Intel 1GbE dual-port I350 NIC, and two 2TB SATA hard drives.

-

6x Cascade Lake-based Compute Nodes: Each node is a 2U Supermicro server (Ultra 6029U-TR4). It has two Intel Xeon Gold 5218 processors (2.3GHz), 128GB DDR4 memory, an Intel 1GbE dual-port I350 NIC, an Intel 960GB D3-S4510 SATA SSD, and two Seagate Exos 7E8 1.0TB SATA hard drives. We also equip the server with one Nvidia/Mellanox 100GbE dual-port CX5 NIC and two of the following four SmartNICs (i.e., Marvell/LiquidIO CN2360 25GbE dual-port SmartNIC, Broadcom PS225 25GbE dual-port SmartNIC, Nvidia/Mellanox BlueField-1 25GbE dual-port SmartNIC, and Nvidia/Mellanox BlueField-2 100GbE dual-port SmartNIC).

-

6x Ice Lake-based Compute Nodes: Each node is a 2U Supermicro server (Ultra SuperServer 220U-TNR). It has two Intel Xeon Gold 6346 processors (3.1GHz), 384GB DDR4 memory, 512GB Intel Optane Persistent Memory Modules, an Intel 1GbE dual-port I350-T2V2 NIC, an Intel 480GB D3-S4510 SATA SSD, and an Seagate Exos 7E2000 2.0TB SATA hard drive. We then equip the server with one Nvidia/Mellanox 100GbE dual-port CX5 NIC and one of the following three SmartNICs (i.e., Marvell LiquidIO3 CN3380 50GbE dual-port SmartNIC, Nvidia/Mellanox 100GbE dual-port BlueField-2 SmartNIC, Nvidia/Mellanox 200GbE single-port BlueField-3 SmartNIC).

-

1x Server JBOF: It is a 2U Supermicro server, comprising two Intel Xeon E5-2620v4 processors (2.1GHz), 128GB DDR4, an Intel 1GbE dual-port I350 NIC, an Intel 240GB DC S4600 SATA SSD, 1 Mellanox 100GbE dual-port CX5 NIC, and 8 Samsung 960GB DCT983 NVMe SSDs.

-

6x SmartNIC JBOFs: Each storage node contains a Broadcom Stingray PS1100R 100GbE dual-port SmartNIC (which has an 8-core ARM A72 processor running at 3.0GHz and 8GB), a standalone PCIe carrier board, and 4 Samsung 960GB DCT983 NVMe SSDs.

-

2x Cavium OCTEON-II EEB68 Boards: We use them as in-network accelerators. Each has one Cavium CN6880 processor (with 32 cnMIPS64 cores running at 1.2 GHz), 2GB DDR3 memory, 2 1GbE management/debug ports, and 4 10GbE XAUI ports.

-

1x Arista DCS-7060CX-32S-R Switch: It has 32x 100GbE ports and 2x10GbE ports.

-

3x programmable Switches: The cluster houses three programmable switches: a Barefoot Wedge 100BF-32X switch with 32x 100GbE ports, a Cavium XPliant A0 switch with 32x 10/25GbE ports, and a Cavium XPliant B0 switch with 32x 40GbE ports.

-

Cables: The cluster uses SFP, SFP28, QSFP28, and QSFP28-4xSFP28 breakout AOC cables.

2. System Architecture

The figure presents an overview of our cluster. It spans across two racks. The 1GbE Ethernet switch is provided by the department for general network connectivity (like SSH/IPMI). The three gateway nodes are assigned with public IPs. We use the Arista switch as the default data traffic. Except for the Cavium OCTEON-II boards using 10GbE links, all the rest are connected via 100GbE ones. The three programmable switches have floating connections that are connected to CX5 and SmartNICs. We can use them to realize a simple two-layer FatTree topology.

3. Example Use Cases

The hometown cluster has been used for three types of research projects: (1) building in-network accelerated solutions; (2) developing distributed systems using fast networks; and (3) exploring storage disaggregation. The cluster is still under active use.