Prev: L14, Next: L16

Course Links: Canvas, Piazza, TopHat (212925)

Zoom Links: MW 4:00, TR 1:00, TR 2:30.

Tools

📗 You can expand all TopHat Quizzes and Discussions: , and print the notes: , or download all text areas as text file: .

📗 For visibility, you can resize all diagrams on page to have a maximum height that is percent of the screen height: .

📗 Calculator:

📗 Canvas:

pen

# Midterm Information

📗 October 25, from 5:45 to 7:15. All multiple choice.

📗 Room assignments (by last name):

➩ A-G (expected 148 students): (1025 W. Johnson St.) Educational Science Room 204

➩ H-K (expected 103 students): (250 N. Mills St.) Noland Hall Room 132

➩ L-S (expected 190 students): (1202 W. Johnson St.) Psychology Room 105

➩ T-Z (expected 97 students): (1202 W. Johnson St.) Psychology Room 113

📗 Please bring a number 2 pencil and your student ID (Wiscard) to the exam. Your student ID is required to turn in your exam.

📗 A note sheet (1 page 8.5'' x 11'', double-sided, typed or handwritten) is allowed.

📗 A calculator is allowed but not required. The calculator should not be an app on your phone or tablet or any device that can connect to the Internet.

📗 If you have requested McBurney accommodation, please email one of the instructors before Monday, October 21.

📗 The midterm covers lectures 1 through 13, everything on the lecture notes except for the blocks that are marked "optional".

📗 You can find selected past exams (some final exam questions may be relevant since the order of the topics are different every year) on Canvas under Files and here: Link.

📗 There will be review sessions on Oct 19 (Saturday) and Oct 20 (Sunday) from 12:00 to 2:00 on Zoom (recorded) to discuss selected past exam questions: Zoom.

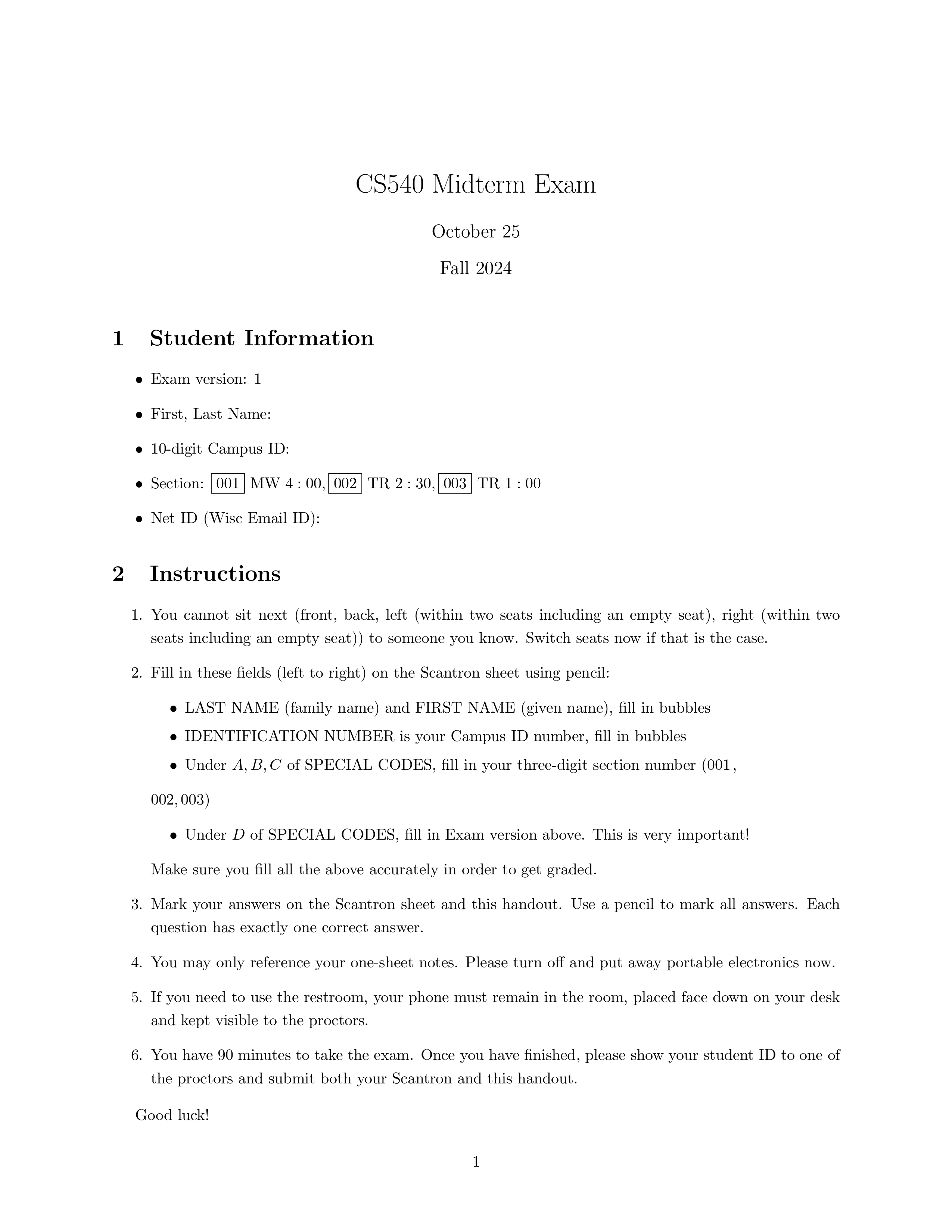

📗 The cover page of the exam will look like:

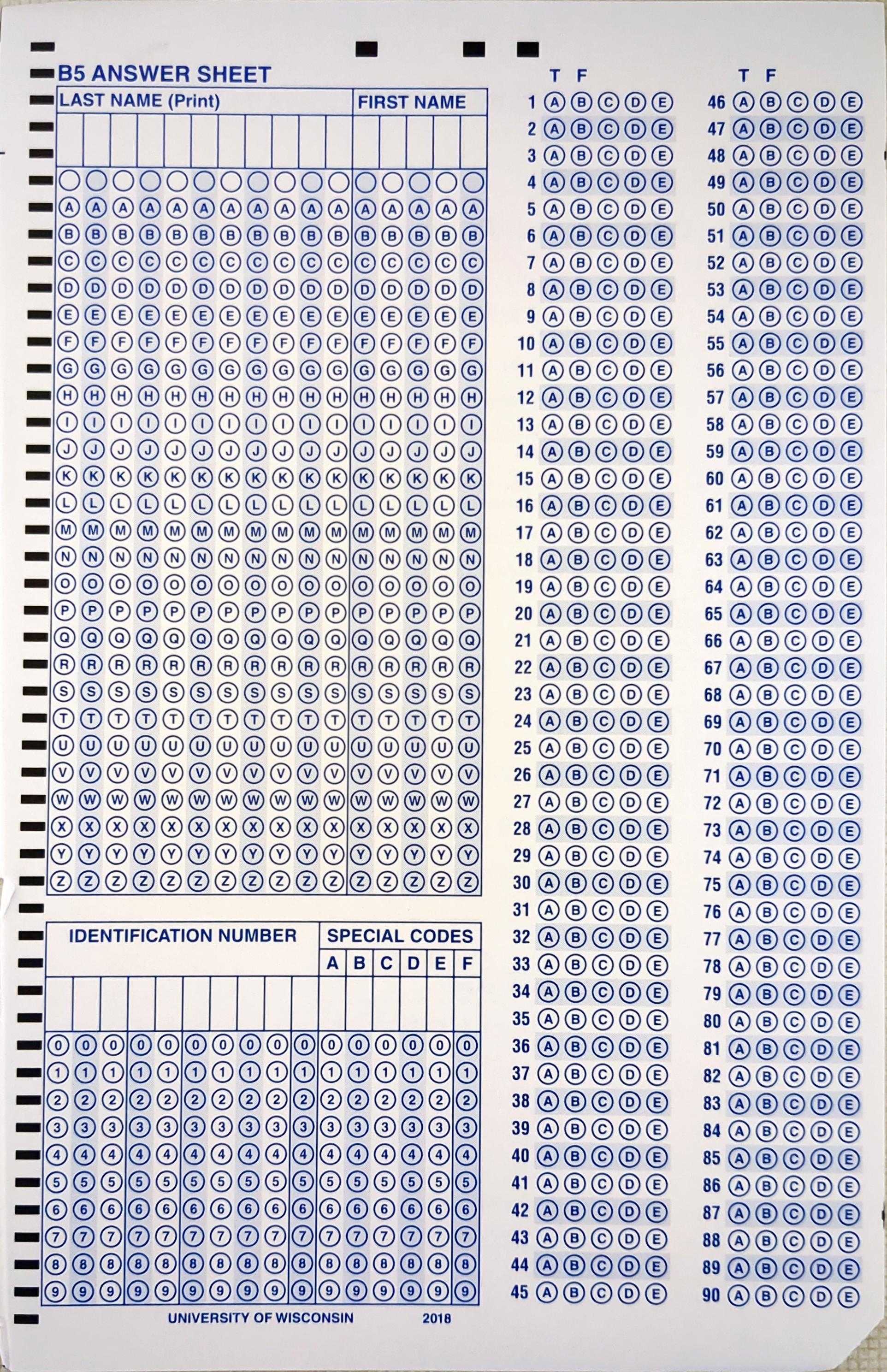

📗 The scantron sheet will look like:

# Coordination Game

📗 Some numbers and functions will change on the actual midterm.

➩ Q27: Consider a stochastic gradient descent step of a logistic regression with square loss and \(L_{2}\) regularization, if the weight is \(w = 1\), the bias is \(b = -1\), the randomly selected item for this iteration has \(x_{i} = 1\) and \(y_{i} = 1\) (the activation value of this item is \(a_{i} = \dfrac{1}{2}\)), the regularization parameter \(\lambda = 1\), the learning rate is \(\alpha = 1\), what is the weight \(w\) after this stochastic gradient descent step? The loss function is \(C = \dfrac{1}{2} \left(g\left(w x_{i} + b\right) - y_{i}\right)^{2} + \lambda \left(w^{2} + b^{2}\right)\) where \(g\left(z\right) = \dfrac{1}{1 + e^{-z}}\) is the sigmoid activation function. [Note: on the exam, another activation function might be used.]

➩ Q25: For some loss function \(C = C_{1} + C_{2}\) with \(\dfrac{\partial C_{i}}{\partial w} = x_{i} w^{2}\), the training set (after shuffling) with two items with \(x_{1} = 1\), \(x_{2} = 2\) is used in one epoch of stochastic gradient descent to minimize \(C\). The weight at the beginning of the this epoch is \(w = 1\). What is the weight after this epoch (two stochastic gradient descent steps, \(x_{1}\) first, \(x_{2}\) next)? The learning rate is \(\alpha = 1\).

➩ Q23: In a three-layer fully connected neural network, let \(a^{\left(1\right)}_{i1}\) be the first sigmoid activation unit in the first hidden layer and \(a^{\left(2\right)}_{i2}\) be the second tanh activation unit in the second hidden layer. What is \(\dfrac{\partial a^{\left(2\right)}_{i2}}{\partial a^{\left(1\right)}_{i1}}\)? For tanh activation function \(g\left(z\right)\), the derivative is \(g'\left(z\right) = 1 - g\left(z\right)^{2}\), and for sigmoid activation function \(g\left(z\right)\), the derivative is \(g'\left(z\right) = g\left(z\right)\left(1 - g\left(z\right)\right)\). [Note: on the exam, choices are expressions written in terms of \(a^{\left(l\right)}_{ij}\) and \(w^{\left(l\right)}_{jk}\).]

➩ Q20: Which of the following training items would violate the hard margin support vector machine constraints with \(w = 1, b = 1\)? The training set is \(\left\{\left(x_{i}, y_{i}\right)\right\}_{i=1}^{5} = \left\{\left(-2, 0\right), \left(-1, 0\right), \left(0, 1\right), \left(1, 1\right), \left(2, 1\right)\right\}\). The support vector machine classifies items with \(w x_{i} + b \geq 0\) as class \(1\).

➩ Q6: The first two principal components based on a training set with \(3\) features are \(\begin{bmatrix} \dfrac{-1}{\sqrt{2}} \\ \dfrac{1}{\sqrt{2}} \\ 0 \end{bmatrix}\) and \(\begin{bmatrix} \dfrac{1}{\sqrt{2}} \\ \dfrac{1}{\sqrt{2}} \\ 0 \end{bmatrix}\). The PCA weights (or PCA features) of a training item is \(\begin{bmatrix} 1 \\ 2 \\ 3 \end{bmatrix}\), what are the original features of this item?

➩ Q4: Consider the problem of classifying whether a painting is art (A) or trash (T) based on the price (P), which can be high (H), medium (M), or low (L), and a naive Bayes model is training on a training set has the prior probability \(\mathbb{P}\left\{A\right\} = \dfrac{1}{2}\) and conditional probabilities \(\mathbb{P}\left\{H | A\right\} = \dfrac{1}{2}, \mathbb{P}\left\{L | A\right\} = 0\), \(\mathbb{P}\left\{H | T\right\} = 0, \mathbb{P}\left\{L | T\right\} = \dfrac{1}{2}\). Now given a new painting with medium price, what is the conditional probability it is art (that is \(\mathbb{P}\left\{A | M\right\}\))?

Prev: L14, Next: L16

Last Updated: November 21, 2025 at 11:39 PM