Prev: L15, Next: L17

Zoom: Link, TopHat: Link, GoogleForm: Link, Piazza: Link, Feedback: Link.

Tools

📗 You can expand all TopHat Quizzes and Discussions: , and print the notes: , or download all text areas as text file: .

📗 For visibility, you can resize all diagrams on page to have a maximum height that is percent of the screen height: .

📗 Calculator:

📗 Canvas:

pen

# Unsupervised Learning

📗 Supervised learning: \(\left(x_{1}, y_{1}\right), \left(x_{2}, y_{2}\right), ..., \left(x_{n}, y_{n}\right)\).

📗 Unsupervised learning: \(\left(x_{1}\right), \left(x_{2}\right), ..., \left(x_{n}\right)\).

➩ Clustering: separates items into groups.

➩ Novelty (outlier) detection: finds items that are different (two groups).

➩ Dimensionality reduction: represents each item by a lower dimensional feature vector while maintaining key characteristics.

📗 Unsupervised learning applications:

➩ Google news.

➩ Google photo.

➩ Image segmentation.

➩ Text processing.

➩ Data visualization.

➩ Efficient storage.

➩ Noise removal.

# High Dimensional Data

📗 Text and image data are usually high dimensional: Link.

➩ The number of features of bag of words representation is the size of the vocabulary.

➩ The number of features of pixel intensity features is the number of pixels of the images.

📗 Dimensionality reduction is a form of unsupervised learning since it does not require labeled training data.

# Principal Component Analysis

📗 Principal component analysis rotates the axes (\(x_{1}, x_{2}, ..., x_{m}\)) so that the first \(K\) new axes (\(u_{1}, u_{2}, ..., u_{K}\)) capture the directions of the greatest variability of the training data. The new axes are called principal components: Link, Wikipedia.

➩ Find the direction of the greatest variability, \(u_{1}\).

➩ Find the direction of the greatest variability that is orthogonal (perpendicular) to \(u_{1}\), say \(u_{2}\).

➩ Repeat until there are \(K\) such directions \(u_{1}, u_{2}, ..., u_{K}\).

TopHat Discussion

ID:📗 [1 points] Given the following dataset, find the direction (click on the diagram below to change the direction) in which the variation is the largest.

Projected variance:

Direction:

Projected points:

TopHat Discussion

ID:📗 [1 points] Given the following dataset, find the direction (click on the diagram below to change the direction) in which the variation is the largest.

Projected variance:

Direction:

Projected points:

# Geometry

📗 A vector \(u_{k}\) is a unit vector if it has length 1: \(\left\|u_{k}\right\|^{2} = u^\top_{k} u_{k} = u_{k 1}^{2} + u_{k 2}^{2} + ... + u_{k m}^{2} = 1\).

📗 Two vectors \(u_{j}, u_{k}\) are orthogonal (or uncorrelated) if \(u^\top_{j} u_{k} = u_{j 1} u_{k 1} + u_{j 2} u_{k 2} + ... + u_{j m} u_{k m} = 0\).

📗 The projection of \(x_{i}\) onto a unit vector \(u_{k}\) is \(\left(u^\top_{k} x_{i}\right) u_{k} = \left(u_{k 1} x_{i 1} + u_{k 2} x_{i 2} + ... + u_{k m} x_{i m}\right) u_{k}\) (it is a number \(u^\top_{k} x_{i}\) multiplied by a vector \(u_{k}\)). Since \(u_{k}\) is a unit vector, the length of the projection is \(u^\top_{k} x_{i}\).

Math Note

📗 The dot product between two vectors \(a = \left(a_{1}, a_{2}, ..., a_{m}\right)\) and \(b = \left(b_{1}, b_{2}, ..., b_{m}\right)\) is usually written as \(a \cdot b = a^\top b = \begin{bmatrix} a_{1} & a_{2} & ... & a_{m} \end{bmatrix} \begin{bmatrix} b_{1} \\ b_{2} \\ ... \\ b_{m} \end{bmatrix} = a_{1} b_{1} + a_{2} b_{2} + ... + a_{m} b_{m}\). In this course, to avoid confusion with scalar multiplication, the notation \(a^\top b\) will be used instead of \(a \cdot b\).

📗 If \(x_{i}\) is projected onto some vector \(u_{k}\) that is not a unit vector, then the formula for projection is \(\left(\dfrac{u^\top_{k} x_{i}}{u^\top_{k} u_{k}}\right) u_{k}\). Since for unit vector \(u_{k}\), \(u^\top_{k} u_{k} = 1\), the two formulas are equivalent.

TopHat Discussion

📗 [1 points] Compute the projection of the red vector onto the blue vector (drag the tips of the red or blue arrow, the green arrow represents the projection).

Red vector: , blue vector: .

Unit red vector: , unit blue vector: .

Projection: , length of projection: .

# Statistics

📗 The (unbiased) estimate of the variance of \(x_{1}, x_{2}, ..., x_{n}\) in one dimensional space (\(m = 1\)) is \(\dfrac{1}{n - 1} \left(\left(x_{1} - \mu\right)^{2} + \left(x_{2} - \mu\right)^{2} + ... + \left(x_{n} - \mu\right)^{2}\right)\), where \(\mu\) is the estimate of the mean (average) or \(\mu = \dfrac{1}{n} \left(x_{1} + x_{2} + ... + x_{n}\right)\). The maximum likelihood estimate has \(\dfrac{1}{n}\) instead of \(\dfrac{1}{n-1}\).

📗 In higher dimensional space, the estimate of the variance is \(\dfrac{1}{n - 1} \left(\left(x_{1} - \mu\right)\left(x_{1} - \mu\right)^\top + \left(x_{2} - \mu\right)\left(x_{2} - \mu\right)^\top + ... + \left(x_{n} - \mu\right)\left(x_{n} - \mu\right)^\top\right)\). Note that \(\mu\) is an \(m\) dimensional vector, and each of the \(\left(x_{i} - \mu\right)\left(x_{i} - \mu\right)^\top\) is an \(m\) by \(m\) matrix, so the resulting variance estimate is a matrix called variance-covariance matrix.

📗 If \(\mu = 0\), then the projected variance of \(x_{1}, x_{2}, ..., x_{n}\) in the direction \(u_{k}\) can be computed by \(u^\top_{k} \Sigma u_{k}\) where \(\Sigma = \dfrac{1}{n - 1} X^\top X\), and \(X\) is the data matrix where row \(i\) is \(x_{i}\).

➩ If \(\mu \neq 0\), then \(X\) should be centered, that is, the mean of each column should be subtracted from each column.

Math Note

📗 The projected variance formula can be derived by \(u^\top_{k} \Sigma u_{k} = \dfrac{1}{n - 1} u^\top_{k} X^\top X u_{k} = \dfrac{1}{n - 1} \left(\left(u^\top_{k} x_{1}\right)^{2} + \left(u^\top_{k} x_{2}\right)^{2} + ... + \left(u^\top_{k} x_{n}\right)^{2}\right)\) which is the estimate of the variance of the projection of the data in the \(u_{k}\) direction.

# Principal Component Analysis

📗 The goal is to find the direction that maximizes the projected variance: \(\displaystyle\max_{u_{k}} u^\top_{k} \Sigma u_{k}\) subject to \(u^\top_{k} u_{k} = 1\).

➩ This constrained maximization problem has solution (local maxima) \(u_{k}\) that satisfies \(\Sigma u_{k} = \lambda u_{k}\), and by definition of eigenvalues, \(u_{k}\) is the eigenvector corresponding to the eigenvalue \(\lambda\) for the matrix \(\Sigma\): Wikipedia.

➩ At a solution, \(u^\top_{k} \Sigma u_{k} = u^\top_{k} \lambda u_{k} = \lambda u^\top_{k} u_{k} = \lambda\), which means, the larger the \(\lambda\), the larger the variability in the direction of \(u_{k}\).

➩ Therefore, if all eigenvalues of \(\Sigma\) are computed and sorted \(\lambda_{1} \geq \lambda_{2} \geq ... \geq \lambda_{m}\), then the corresponding eigenvectors are the principal components: \(u_{1}\) is the first principal component corresponding to the direction of the largest variability; \(u_{2}\) is the second principal component corresponding to the direction of the second largest variability orthogonal to \(u_{1}\), ...

TopHat Quiz

(Past Exam Question) ID:📗 [3 points] Given the variance matrix \(\hat{\Sigma}\) = , what is the first principal component? Enter a unit vector.

📗 Answer (comma separated vector): .

# Number of Dimensions

📗 There are a few ways to choose the number of principal components \(K\).

➩ \(K\) can be selected based on prior knowledge or requirement (for example, \(K = 2, 3\) for visualization tasks).

➩ \(K\) can be the number of non-zero eigenvalues.

➩ \(K\) can be the number of eigenvalues that are larger than some threshold.

# Reduced Feature Space

📗 An original item is in the \(m\) dimensional feature space: \(x_{i} = \left(x_{i 1}, x_{i 2}, ..., x_{i m}\right)\).

📗 The new item is in the \(K\) dimensional space with basis \(u_{1}, u_{2}, ..., u_{k}\) has coordinates equal to the projected lengths of the original item: \(\left(u^\top_{1} x_{i}, u^\top_{2} x_{i}, ..., u^\top_{k} x_{i}\right)\).

📗 Other supervised learning algorithms can be applied on the new features.

TopHat Quiz

(Past Exam Question) ID:📗 [2 points] You performed PCA (Principal Component Analysis) in \(\mathbb{R}^{3}\). If the first principal component is \(u_{1}\) = \(\approx\) and the second principal component is \(u_{2}\) = \(\approx\) . What is the new 2D coordinates (new features created by PCA) for the point \(x\) = ?

📗 In the diagram, the black axes are the original axes, the green axes are the PCA axes, the red vector is \(x\), the red point is the reconstruction \(\hat{x}\) using the PCA axes.

📗 Answer (comma separated vector): .

# Reconstruction

📗 The original item can be reconstructed using the principal components. If all \(m\) principal components are used, then the original item can be perfectly reconstructed: \(x_{i} = \left(u^\top_{1} x_{i}\right) u_{1} + \left(u^\top_{2} x_{i}\right) u_{2} + ... + \left(u^\top_{m} x_{i}\right) u_{m}\).

📗 The original item can be approximated by the first \(K\) principal components: \(x_{i} \approx \left(u^\top_{1} x_{i}\right) u_{1} + \left(u^\top_{2} x_{i}\right) u_{2} + ... + \left(u^\top_{K} x_{i}\right) u_{K}\).

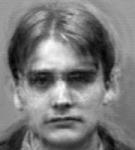

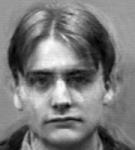

➩ Eigenfaces are eigenvectors of face images: every face can be written as a linear combination of eigenfaces. The first \(K\) eigenfaces and their coefficients can be used to determine and reconstruct specific faces: Link, Wikipedia.

Example

📗 An example of 111 eigenfaces:

📗 Reconstruction examples:

| K | Face 1 | Face 2 |

| 1 |

|

|

| 20 |

|

|

| 40 |

|

|

| 50 |

|

|

| 80 |

|

|

➩ Images by wellecks

# Non-linear PCA

📗 PCA features are linear in the original features.

📗 The kernel trick (used in support vector machines) can be used to first produce new features that are non-linear in the original features, then PCA can be applied to the new features: Wikipedia

➩ Dual optimization techniques can be used for kernels such as the RBF kernel which produces infinite number of new features.

📗 A neural network with both input and output being the features (no soft-max layer) is can be used for non-linear dimensionality reduction, called an auto-encoder: Wikipedia.

➩ The hidden units (\(K < m\) units, fewer than the number of input features) can be considered a lower dimensional representation of the original features.

# Generative Adversarial Networks

➩ The generator (deconvolutional neural network): input is noise, output is fake images (or text).

➩ The discriminator (convolutional neural network): input the fake or real image, output is binary class whether the image is real.

➩ The two networks are trained together, or competing in a zero-sum game: the generator tries to maximize the discriminator loss, and the discriminator tries to minimize the same loss: Wikipedia.

➩ The solution to the zero-sum game, called the Nash equilibrium, is the solution to \(\displaystyle\min_{w^{\left(G\right)}} \displaystyle\max_{w^{\left(D\right)}} C\left(w^{\left(G\right)}, w^{\left(D\right)}\right)\), where \(C\) is the loss of the discriminator.

📗 Variational auto-encoder is another generative model that maps input into a lowest dimensional latent space (encoder) that can be used to reconstruct the original input (decoder), similar to PCA: Wikipedia, Wikipedia.

📗 Diffusion model is a generative model that gradually add or remove noise (adding noise is similar to encoder, removing noise is similar to decoder): Wikipedia.

# Transformers

📗 Transformer is a neural network with attention mechanism and without reccurent units: Wikipedia.

📗 Attention units keep track of which parts of the sentence is important and pay attention to, for example, scaled dot product attention units: Wikipedia.

➩ \(a^{\left(h\right)}_{t} = g\left(w^{\left(x\right)} \cdot x_{t} + b^{\left(x\right)}\right)\) is not recurrent.

➩ There are value units \(a^{\left(v\right)}_{t} = w^{\left(v\right)} \cdot a^{\left(h\right)}_{t}\), key units \(a^{\left(k\right)}_{t} = w^{\left(k\right)} \cdot a^{\left(h\right)}_{t}\), and query units \(a^{\left(q\right)}_{t} = w^{\left(q\right)} \cdot a^{\left(h\right)}_{t}\), and attention context can be computed as \(g\left(\dfrac{a^{\left(q\right)}_{s} \cdot a^{\left(k\right)}_{t}}{\sqrt{m}}\right) \cdot a^{\left(v\right)}_{t}\) where \(g\) is the softmax activation: here \(a^{\left(q\right)}_{s}\) represents the first word, \(a^{\left(k\right)}_{t}\) represents the second word, \(a^{\left(q\right)}_{s} \cdot a^{\left(k\right)}_{t}\) is the dot product (which represents the cosine of the angle between the two words, i.e. how similar or related the two words are), and \(a^{\left(v\right)}_{t}\) is the value of the second word to send to the next layer.

➩ The attention matrix is usually masked so that a unit \(a_{t}\) cannot pay attention to another unit in the future \(a_{t+1}, a_{t+2}, a_{t+3}, ...\) by making the \(a^{\left(q\right)}_{s} \cdot a^{\left(k\right)}_{t} = -\infty\) when \(s \geq t\) so that \(e^{a^{\left(q\right)}_{s} \cdot a^{\left(k\right)}_{t}} = 0\) when \(s \geq t\).

➩ There can be multiple parallel attention units called multi-head attention.

📗 Positional encoding are used so that features contain information about the word token and its position.

📗 Layer normalization trick is used so that means and variances of the units between attention layers and fully connected layers stay the same.

# Large Language Models

➩ Fine tuning for pre-trained models freezes a subset of the weights (usually in earlier layers) and updates the other weights (usually the later layers) based on new datasets: Wikipedia.

➩ Prompt engineering does not alter the weights, and only provides more context (in the form of examples): Link, Wikipedia.

➩ Reinforcement learning from human feedback (RLHF) uses reinforcement learning techniques to update the model based on human feedback (in the form of rewards, and the model optimizes the reward instead of some form of costs): Wikipedia.

📗 BERT (Bidirectional Encoder Representations from Transformers): Wikipedia.

📗 Many other large language models developed by different companies: Link.

📗 Adversarial attacks on LLM can in the form of prompt injection attacks: Link.

test pc,pt,pj,es,pn q

📗 Notes and code adapted from the course taught by Professors Jerry Zhu, Yudong Chen, Yingyu Liang, and Charles Dyer.

📗 Content from note blocks marked "optional" and content from Wikipedia and other demo links are helpful for understanding the materials, but will not be explicitly tested on the exams.

📗 Please use Ctrl+F5 or Shift+F5 or Shift+Command+R or Incognito mode or Private Browsing to refresh the cached JavaScript.

📗 You can expand all TopHat Quizzes and Discussions: , and print the notes: , or download all text areas as text file: .

📗 If there is an issue with TopHat during the lectures, please submit your answers on paper (include your Wisc ID and answers) or this Google form Link at the end of the lecture.

📗 Anonymous feedback can be submitted to: Form. Non-anonymous feedback and questions can be posted on Piazza: Link

Prev: L15, Next: L17

Last Updated: January 19, 2026 at 9:18 PM