Prev: L1, Next: L2 , Assignment: A1 , Practice Questions: M2 M3 M4 , Links: Canvas, Piazza, Zoom, TopHat (453473)

Tools

📗 Calculator:

📗 Canvas:

pen

📗 You can expand all TopHat Quizzes and Discussions: , and print the notes: , or download all text areas as text file: .

# Welcome to CS540

📗 The main components of the course include:

➩ Assignments and Projects (HW1-8 and CP1-4): 60%

➩ Exams: 40%

📗 *NEW* Competitive Projects (CP1-4):

➩ Participate in 2 or more competitions (out of 4 choices).

➩ Create your own training dataset, network architecture, and training algorithm (with strategic considerations).

➩ Strict deadline and format to submit the neural networks you trained.

➩ Grades based on ranking in class: in the past, the grades are curved at the end, and the assignment averages are close to perfect, so the ranking is effectively only based on the exams. Ranking-based assignments starting this summer will shift some of the weights from the exams back to the assignments, which is the more important part of the course.

📗 *NEW* Use of LLMs:

➩ Students are encouraged to generate code for assignments and projects and solve exam questions using Large Language Models (LLMs).

➩ Remember to give attribution and provide the prompts.

TopHat Discussion

📗 Why are you taking the course?

➩ Learn how to use AI tools like ChatGPT? This is not covered in the course.

➩ Learn how to program AI tools like ChatGPT? Only simple models.

➩ Learn the math and statistics behind AI algorithms? Yes, this is the focus of the course.

# ChatGPT

📗 GPT stands for Generative Pre-trained Transformer.

➩ Unsupervised learning (convert text to numerical vectors).

➩ Supervised learning: (1) discriminative (predict answers based on questions), (2) generative (predict next word based on previous word).

➩ Reinforcement learning (update model based on human feedback).

TopHat Discussion

📗 Have you used ChatGPT (or another Large Language Model)? What did you use LLM for?

➩ Solve homework or exam questions? For CS540, it is possible with some prompt engineering: Link.

➩ Write code for projects? For CS540, it is allowed and encouraged you use large language models (LLMs) to help with writing code (at the moment, most of LLMs cannot write complete projects).

➩ Write stories or create images? In the past, there were CS540 assignments asking students to use earlier versions of GPT to perform these tasks and compare the results with human creations.

➩ Other uses?

# N-gram Model

📗 A sentence is a sequence of words (tokens). Each unique word token is called a word type. The set of word types of called the vocabulary.

📗 A sentence with length \(d\) can be represented by \(\left(w_{1}, w_{2}, ..., w_{d}\right)\).

📗 The probability of observing a word \(w_{t}\) at position \(t\) of the sentence can be written as \(\mathbb{P}\left\{w_{t}\right\}\) (or in statistics \(\mathbb{P}\left\{W_{t} = w_{t}\right\}\)).

📗 N-gram model is a language model that assumes the probability of observing a word \(w_{t}\) at position \(t\) only depends on the words at positions \(t-1, t-2, ..., t-N+1\). In statistics notation, \(\mathbb{P}\left\{w_{t} | w_{t-1}, w_{t-2}, ..., w_{0}\right\} = \mathbb{P}\left\{w_{t} | w_{t-1}, w_{t-2}, ..., w_{t-N+1}\right\}\) (the \(|\) is pronounced as "given", \(\mathbb{P}\left\{a | b\right\}\) is "the probability of a given b".

# Unigram Model

📗 Unigram model assumes independence (not a realistic language model): \(\mathbb{P}\left\{w_{1}, w_{2}, ..., w_{d}\right\} = \mathbb{P}\left\{w_{1}\right\} \mathbb{P}\left\{w_{2}\right\} ... \mathbb{P}\left\{w_{d}\right\}\).

📗 Independence means \(\mathbb{P}\left\{w_{t} | w_{t-1}, w_{t-2}, ... w_{0}\right\} = \mathbb{P}\left\{w_{t}\right\}\) or the probability of observing \(w_{t}\) is independent of all previous words.

# Maximum Likelihood Estimation

📗 Given a training set (many sentences or text documents), \(\mathbb{P}\left\{w_{t}\right\}\) is estimated by \(\hat{\mathbb{P}}\left\{w_{t}\right\} = \dfrac{c_{w_{t}}}{c_{1} + c_{2} + ... + c_{m}}\), where \(m\) is the size of the vocabulary (and the vocabulary is \(\left\{1, 2, ..., m\right\}\)), and \(c_{w_{t}}\) is the number of times the word \(w_{t}\) appeared in the training set.

📗 This is called the maximum likelihood estimator because it maximizes the likelihood (probability) of observing the sentences in the training set.

Math Note

📗 Suppose the vocabulary is \({a, b}\), and \(p_{1} = \hat{\mathbb{P}}\left\{a\right\}, p_{2} = \hat{\mathbb{P}}\left\{b\right\}\) with \(p_{1} + p_{2} = 1\) based on the a training set with \(c_{1}\) number of \(a\)'s and \(c_{2}\) number of \(b\)'s. Then the probability of observing the sentence is \(\dbinom{c_{1} + c_{2}}{c_{1}} p_{1}^{c_{1}} p_{2}^{c_{2}}\), which is maximized at \(p_{1} = \dfrac{c_{1}}{c_{1} + c_{2}}, p_{2} = \dfrac{c_{2}}{c_{1} + c_{2}}\).

TopHat Quiz

📗 [1 points] Given a training set (the script of "Guardians of the Galaxy" for Vin Diesel: Wikipedia), "I am Groot, I am Groot, ... (13 times), ..., I am Groot, We are Groot". What is the maximum likelihood estimates of the unigram model based on this training set? What is the probability of observing a new sentence "I am Groot" based on the estimated gram model?

📗 Click to generate the next word:

📗 Answer:

# Bigram Model

📗 Bigram model assumes Markov property: \(\mathbb{P}\left\{w_{1}, w_{2}, ..., w_{d}\right\} = \mathbb{P}\left\{w_{1}\right\} \mathbb{P}\left\{w_{2} | w_{1}\right\} \mathbb{P}\left\{w_{3} | w_{2}\right\} ... \mathbb{P}\left\{w_{d} | w_{d-1}\right\}\).

📗 Markov property means \(\mathbb{P}\left\{w_{t} | w_{t-1}, w_{t-2}, ... w_{0}\right\} = \mathbb{P}\left\{w_{t} | w_{t-1}\right\}\) or the probability distribution of observing \(w_{t}\) only depends on the previous word in the sentence \(w_{t-1}\). A visualiation of Markov chains: Link.

📗 The maximum likelihood estimator of \(\mathbb{P}\left\{w_{t} | w_{t-1}\right\}\) is \(\hat{\mathbb{P}}\left\{w_{t} | w_{t-1}\right\} = \dfrac{c_{w_{t-1} w_{t}}}{c_{w_{t-1}}}\), where \(c_{w_{t-1} w_{t}}\) is the number of times the phrase (sequence of words) \(w_{t-1} w_{t}\) appeared in the training set.

Math Note

📗 Conditional probability is defined as \(\mathbb{P}\left\{a | b\right\} = \dfrac{\mathbb{P}\left\{a, b\right\}}{\mathbb{P}\left\{b\right\}}\), so \(\mathbb{P}\left\{w_{t} | w_{t-1}\right\} = \dfrac{\mathbb{P}\left\{w_{t-1} w_{t}\right\}}{\mathbb{P}\left\{w_{t-1}\right\}}\), where \(\mathbb{P}\left\{w_{t-1} w_{t}\right\}\) is the probability of observing the phrase \(w_{t-1} w_{t}\).

TopHat Quiz

📗 [1 points] Given a training set (the script of "Guardians of the Galaxy" for Vin Diesel: Wikipedia), "I am Groot, I am Groot, ... (13 times), ..., I am Groot, We are Groot". What is the maximum likelihood estimates of the unigram model based on this training set? What is the probability of observing a new sentence "I am Groot" based on the estimated gram model?

📗 Click to generate the next word:

📗 Answer:

# Transition Matrix

📗 The bigram probabilities can be stored in a matrix called the transition matrix of a Markov chain. The number in row \(i\) column \(j\) is the probability \(\mathbb{P}\left\{j | i\right\}\) or the estimated probability \(\hat{\mathbb{P}}\left\{j | i\right\}\): Link.

📗 Given the initial distribution of word types, the distribution of the next token can be found by multiplying the transition matrix by the initial distribution.

📗 The stationary distribution of a Markov chain is an initial distribution such that all subsequent distributions will be the same as the initial distribution, which means if the transition matrix is \(M\), then the stationary distribution is a distribution \(p\) satisfying \(p M = p\).

Math Note

📗 An alternative way to compute the stationary distribution (if it exists) is by starting with any initial distribution \(p_{0}\) and multiply it by \(M\) infinite number of times (that is \(p^\top_{0} M^{\infty}\)).

📗 It is easier to find powers of diagonal matrices, so if the transition matrix can be written as \(M = P D P^{-1}\) where \(D\) is a diagonal matrix (off-diagonal entries are 0, diagonal entries are called eigenvalues), and \(P\) is the matrix where the columns are eigenvectors, then \(M^{\infty} = \left(P D P^{-1}\right)\left(P D P^{-1}\right) ... = P D^{\infty} P^{-1}\).

# Trigram Model

📗 The same formula can be applied to trigram models: \(\hat{\mathbb{P}}\left\{w_{t} | w_{t-1}, w_{t-2}\right\} = \dfrac{c_{w_{t-2} w_{t-1} w_{t}}}{c_{w_{t-2} w_{t-1}}}\).

📗 In a document, some longer sequences of tokens never appear, for example, when \(w_{t-2} w_{t-1}\) never appears, the maximum likelihood estimator \(\hat{\mathbb{P}}\left\{w_{t} | w_{t-1}, w_{t-2}\right\}\) will be \(\dfrac{0}{0}\) and undefined. As a result, Laplace smoothing (add-one smoothing) is often used: \(\hat{\mathbb{P}}\left\{w_{t} | w_{t-1}, w_{t-2}\right\} = \dfrac{c_{w_{t-2} w_{t-1} w_{t}} + 1}{c_{w_{t-2} w_{t-1}} + m}\), where \(m\) is the number of unique words in the document.

📗 Laplace smoothing can be used for bigram and unigram models too: \(\hat{\mathbb{P}}\left\{w_{t} | w_{t-1}\right\} = \dfrac{c_{w_{t-1} w_{t}} + 1}{c_{w_{t-1}} + m}\) for bigram and \(\hat{\mathbb{P}}\left\{w_{t}\right\} = \dfrac{c_{w_{t}} + 1}{c_{1} + c_{2} + ... + c_{m} + m}\) for unigram.

# N-gram Model

📗 In general N-gram probabilities can be estimated in a similar way: Wikipedia.

➩ Without smoothing: \(\hat{\mathbb{P}}\left\{w_{t} | w_{t-1}, w_{t-2}, ..., w_{t-N+1}\right\} = \dfrac{c_{w_{t-N+1}, w_{t-N+2}, ..., w_{t}}}{c_{w_{t-N+1}, w_{t-N+2}, ..., w_{t-1}}}\).

➩ With add-one Laplace smoothing: \(\hat{\mathbb{P}}\left\{w_{t} | w_{t-1}, w_{t-2}, ..., w_{t-N+1}\right\} = \dfrac{c_{w_{t-N+1}, w_{t-N+2}, ..., w_{t}} + 1}{c_{w_{t-N+1}, w_{t-N+2}, ..., w_{t-1}} + m}\), where \(m\) is the number of unique words in the document.

➩ With general Laplace smoothing with parameter \(\delta\): \(\hat{\mathbb{P}}\left\{w_{t} | w_{t-1}, w_{t-2}, ..., w_{t-N+1}\right\} = \dfrac{c_{w_{t-N+1}, w_{t-N+2}, ..., w_{t}} + \delta}{c_{w_{t-N+1}, w_{t-N+2}, ..., w_{t-1}} + \delta m}\).

TopHat Discussion

📗 Go to the Google Books Ngram Viewer: Link. Find a phrase with N-gram probability that is decreasing over time.

# Natural Language Processing

📗 When processing language data, documents need to be first turned into sequences of word tokens.

➩ Split the string by space and punctuation.

➩ Remove stop-words such as "the", "of", "a", "with".

➩ Lower case all characters.

➩ Stemming or lemmatization words: change "looks", "looked", "looking" to "look".

📗 Each document needs to be converted into a numerical vector for supervised learning tasks.

➩ Bag of words feature uses the number of occurrences of each word type: Wikipedia.

➩ Term-Frequency Inverse-Document-Frequency (TF-IDF) feature adjusts for whether each word type appears in multiple documents: Wikipedia.

# Bag of Words Feature

📗 Given a document \(i \in \left\{1, 2, ..., n\right\}\) and vocabulary with size \(m\), let \(c_{ij}\) be the number of times word \(j \in \left\{1, 2, ..., m\right\}\) appears in the document \(i\), the bag of words representation of document \(i\) is \(x_{i} = \left(x_{i 1}, x_{i 2}, ..., x_{i m}\right)\), where \(x_{ij} = \dfrac{c_{ij}}{c_{i 1} + c_{i 2} + ... + c_{i m}}\).

📗 Sometimes, the features are not normalized, meaning \(x_{ij} = c_{ij}\).

# TF IDF Features

📗 Term frequency is defined the same way as in the bag of words features, \(T F_{ij} = \dfrac{c_{ij}}{c_{i 1} + c_{i 2} + ... + c_{i m}}\).

📗 Inverse document frequency is defined as \(I D F_{j} = \log \left(\dfrac{n}{\left| \left\{i : c_{ij} > 0\right\} \right|}\right)\), where \(\left| \left\{i : c_{ij} > 0\right\} \right|\) is the number of documents that contain word \(j\).

📗 TF IDF representation of document \(i\) is \(x_{i} = \left(x_{i 1}, x_{i 2}, ..., x_{i m}\right)\), where \(x_{ij} = T F_{ij} \cdot I D F_{j}\).

TopHat Quiz

📗 [1 points] Given three documents "Guardians of the Galaxy", "Guardians of the Galaxy Vol. 2", "Guardians of the Galaxy Vol. 3", compute the bag of words features and the TF-IDF features of the 3 documents.

| Document | Phrase | Number of times |

| "Guardians of the Galaxy" | "I am Groot" | 13 |

| - | "We are Groot" | 1 |

| "Guardians of the Galaxy Vol. 2" | "I am Groot" | 17 |

| "Guardians of the Galaxy Vol. 3" | "I am Groot" | 13 |

| - | "I love you guys" | 1 |

📗 Answer:

# Supervised Learning Tasks

📗 If the documents are labeled, then a supervised learning task is: given a training set of document features (for example, bag of words, TF-IDF) and their labels, estimate a function that predicts the label for new documents.

➩ Given emails, predict whether they are spams or hams.

➩ Given comments, predict whether they are offensive or not.

➩ Given reviews, predict whether they are positive or negative.

➩ Given essays, predict the grade A, B, ... or F.

➩ Given documents, predict which language it is from.

📗 If the training set is \(\left(X, Y\right)\), where \(X = \left(x_{1}, x_{2}, ..., x_{n}\right)\) are features of the documents, and \(Y = \left(y_{1}, y_{2}, ..., y_{n}\right)\) are labels, then the problem is to estimate \(\hat{\mathbb{P}}\left\{y | x\right\}\), and given a new document \(x'\), the predicted label can be the \(y'\) that maximizes \(\hat{\mathbb{P}}\left\{y' | x'\right\}\).

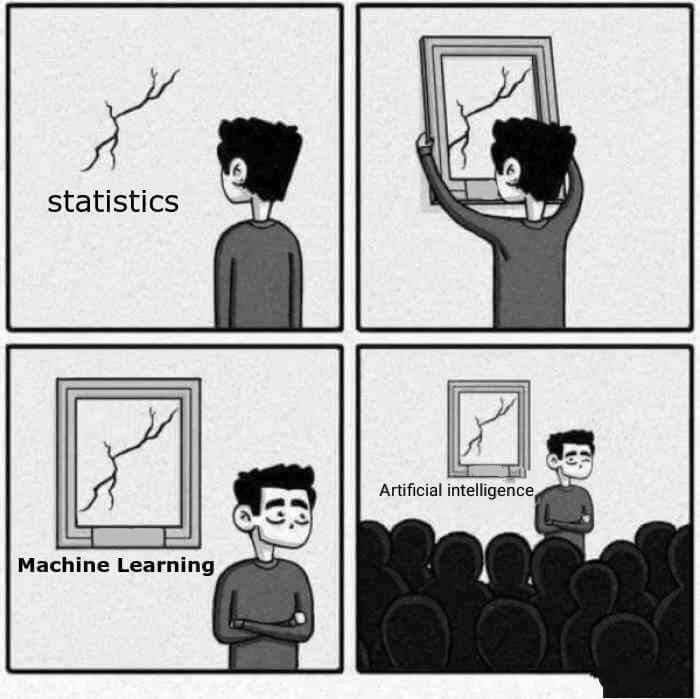

# Discriminative vs Generative Models

📗 Discriminative models directly estimate the probabilities \(\hat{\mathbb{P}}\left\{y | x\right\}\).

📗 Generative models estimate the likelihood probabilities \(\hat{\mathbb{P}}\left\{x | y\right\}\) and the prior probabilities \(\hat{\mathbb{P}}\left\{y\right\}\), then computes \(\hat{\mathbb{P}}\left\{y | x\right\} = \dfrac{\hat{\mathbb{P}}\left\{x | y\right\} \cdot \hat{\mathbb{P}}\left\{y\right\}}{\hat{\mathbb{P}}\left\{x | y = 1\right\} \cdot \hat{\mathbb{P}}\left\{y = 1\right\} + \hat{\mathbb{P}}\left\{x | y = 2\right\} \cdot \mathbb{P}\left\{y = 2\right\} + ... + \hat{\mathbb{P}}\left\{x | y = k\right\} \cdot \hat{\mathbb{P}}\left\{y = k\right\}}\) using Bayes rule.

TopHat Quiz

📗 [1 points] Consider the AmongUs ඞ example on Wikipedia, Image, what are the probabilities \(\mathbb{P}\left\{y = 0 | x = 1\right\}\) and \(\mathbb{P}\left\{y = 1 | x = 1\right\}\)?

| Number of occurrences | Being suspicious \(x = 1\) | Not being suspicious \(x = 0\) |

| An assassin \(y = 1\) | 3 | 1 |

| Not an assassin \(y = 0\) | 2 | 6 |

📗 Answer:

# Naive Bayes Classifier

📗 Naive Bayes classifier is a simple Bayesian network that assumes the features are independent: Wikipedia.

📗 The key assumption is the independence assumption: \(\mathbb{P}\left\{x_{i} | y\right\} = \mathbb{P}\left\{x_{i 1}, x_{i 2}, ..., x_{i m} | y\right\} = \mathbb{P}\left\{x_{i 1} | y\right\} \mathbb{P}\left\{x_{i 2} | y\right\} ... \mathbb{P}\left\{x_{i m} | y\right\}\).

TopHat Quiz

(Past Exam Question) ID:📗 [4 points] Consider the problem of detecting if an email message is a spam. Say we use four random variables to model this problem: a binary class variable \(S\) indicates if the message is a spam, and three binary feature variables: \(C, F, N\) indicating whether the message contains "Cash", "Free", "Now". We use a Naive Bayes classifier with associated CPTs (Conditional Probability Table):

| Prior | \(\mathbb{P}\left\{S = 1\right\}\) = | - | - |

| Hams | \(\mathbb{P}\left\{C = 1 | S = 0\right\}\) = | \(\mathbb{P}\left\{F = 1 | S = 0\right\}\) = | \(\mathbb{P}\left\{N = 1 | S = 0\right\}\) = |

| Spams | \(\mathbb{P}\left\{C = 1 | S = 1\right\}\) = | \(\mathbb{P}\left\{F = 1 | S = 1\right\}\) = | \(\mathbb{P}\left\{N = 1 | S = 1\right\}\) = |

Compute \(\mathbb{P}\){\(S = 1\) | \(C\) = , \(F\) = , \(N\) = }.

📗 Answer: .

# Other Naive Bayes Models

📗 There are other common Naive Bayes models including multinomial naive Bayes (used when the features are bag of words without normalization) and Gaussian naive Bayes (used when the features are continuous).

📗 If the naive Bayes independence assumption is relaxed, the resulting more general model is called Bayesian network (or Bayes network).

Additional Note

📗 If the features are bag of words (without normalization), then a common model of \(\mathbb{P}\left\{x_{i} | y\right\}\) is the multinomial model with unigram probabilities for each label: \(\mathbb{P}\left\{x_{i} | y\right\} = \dfrac{\left(x_{i 1} + x_{i 2} + ... + x_{i m}\right)!}{x_{i 1}! x_{i 2}! ... x_{i m}!} p_{y 1}^{x_{i 1}} p_{y 2}^{x_{i 2}} ... p_{y m}^{x_{i m}}\), where \(p_{y j}\) is the unigram probability that word \(j\) appears in a document with label \(y\).

➩ A special case when \(x_{ij}\) is binary or \(x_{ij} = 0, 1\), for example, whether a document contains a word type, is called Bernoulli naive Bayes.

➩ Technically, in the multinomial distribution, \(x_{i 1}, x_{i 2}, ..., x_{i m}\) are not independent due to the \(\left(x_{i 1} + x_{i 2} + ... + x_{i m}\right)!\), but the multinomial Bayes model is still considered "naive".

➩ Multinomial naive Bayes is consider a linear model since the log posterior distribution is linear in the features: \(\log \mathbb{P}\left\{y | x_{i}\right\} = c \left(\log \mathbb{P}\left\{y\right\} + x_{i 1} \log p_{y 1} + x_{i 2} \log p_{y 2} + ... + x_{i m} \log p_{y m}\right)\), where \(c\) is some constant.

📗 If the features are continuous (not binary or integer counts), then a common model is the Gaussian naive Bayes model: \(\mathbb{P}\left\{x_{i} | y\right\} = \mathbb{P}\left\{x_{i 1} | y\right\} \mathbb{P}\left\{x_{i 2} | y\right\} ... \mathbb{P}\left\{x_{i m} | y\right\}\), where \(\mathbb{P}\left\{x_{ij} | y\right\} = \dfrac{1}{\sqrt{2 \pi \sigma_{y j}^{2}}} e^{- \dfrac{\left(x_{ij} - \mu_{y j}\right)^{2}}{2 \sigma_{y j}^{2}}}\), where \(\mu_{y j}\) is the mean of feature \(j\) for documents with label \(y\), and \(\sigma^{2}_{y j}\) is the variance.

➩ The maximum likelihood estimates of \(\mu_{y j}\) is the sample mean of the feature \(j\) for documents with label \(y\), and \(\sigma^{2}_{y j}\) is the sample variance.

test gu,gb,bt,au,sp q

📗 Notes and code adapted from the course taught by Professors Jerry Zhu, Yingyu Liang, and Charles Dyer.

📗 Please use Ctrl+F5 or Shift+F5 or Shift+Command+R or Incognito mode or Private Browsing to refresh the cached JavaScript.

📗 Anonymous feedback can be submitted to: Form.

Prev: L1, Next: L2

Last Updated: January 19, 2026 at 9:18 PM