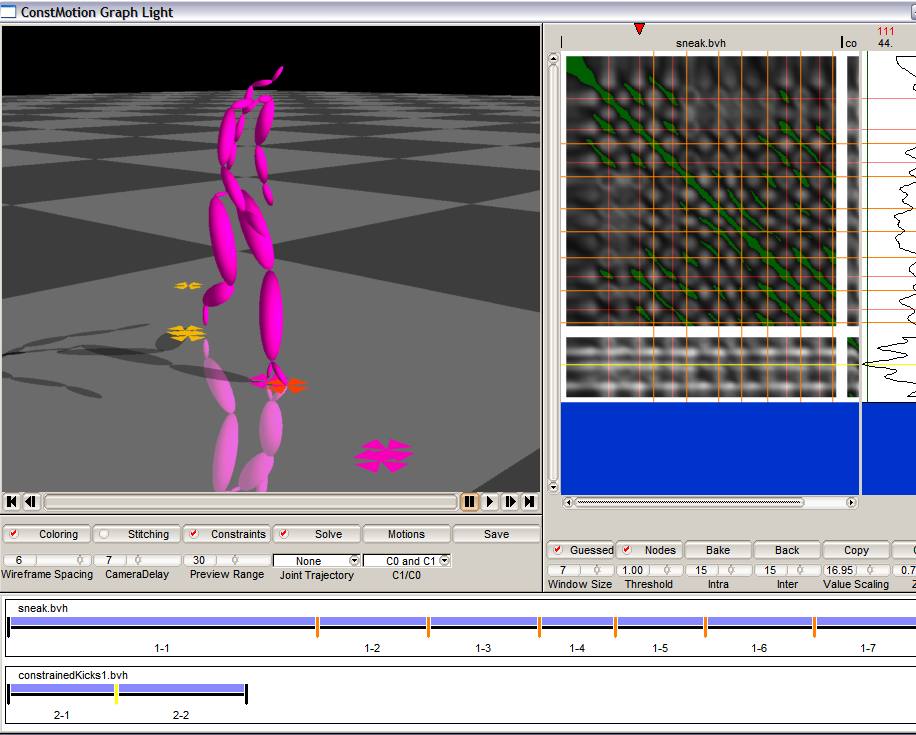

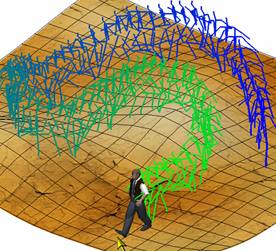

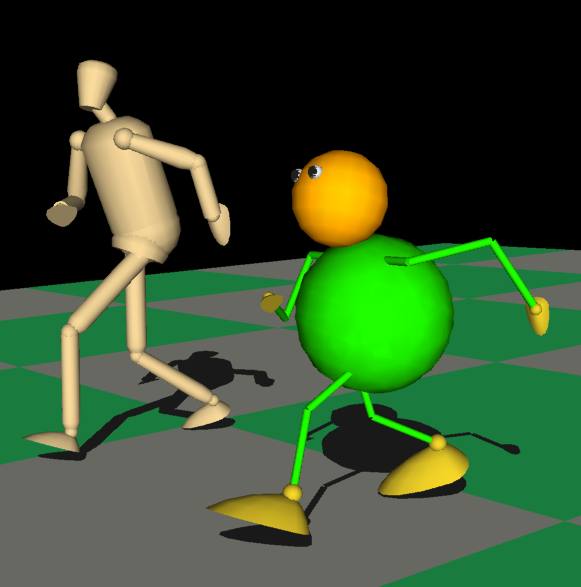

The goal of this project is to improve physical feasibility of edited motions. With the ideas on hierarchical displacement map and approximation, we developed an algorithm to modify edited motion so that it obey physical laws. We incorporate a concept of ZMP together with the conservations of linear and anular momentums. Our approach is more efficient than previous work and also gives easy control to model human behaviors.

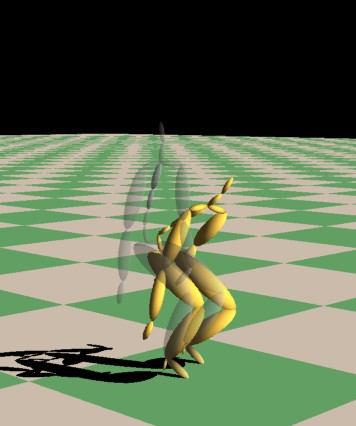

The figure right is a scene of physical touch-up. In the figure the character is just landing after jumping. The original motion is generated by stitching jumping and walking motion. Our touch-up introduces forward leaning which make motion look more realistic.

Here you can download our video files encoded with MS MPEG4 V2.

One big file(12M)

Piceces: 1st(4.2M) 2nd(4.0M) 3rd(3.9M) 4th(5.8M) 5th(3.5M)

The paper about this project is also published:

Hyun Joon Shin, Locas Kovar, and Michael Gleicher. Physical Touch-up of Human Motions, In proceedings of Pacific Graphics 2003, to appear, 2003.