Prev: W1 Next: W2

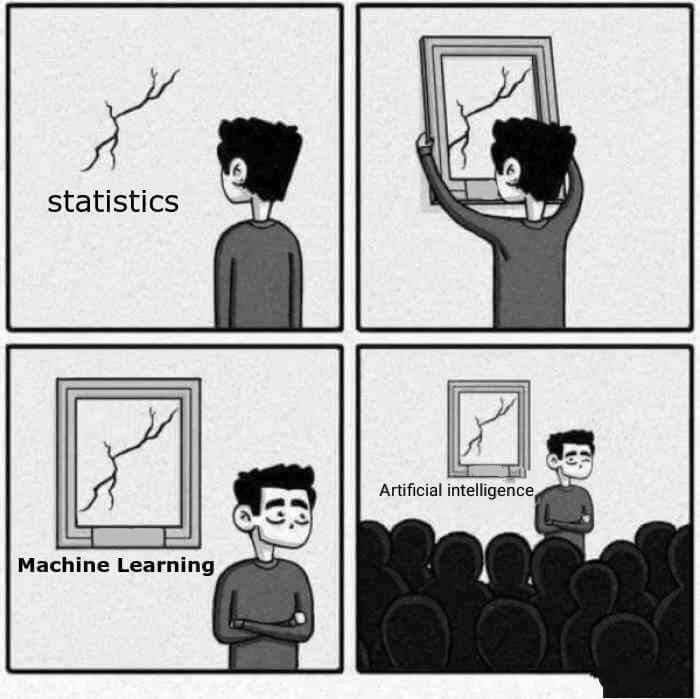

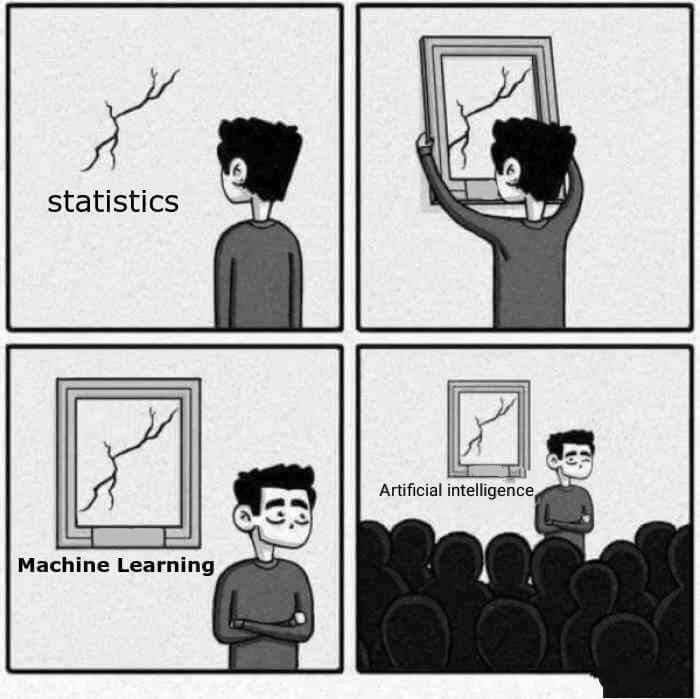

Image by sandserifcomics via towards data science

Lecture 1 Part 2 (Supervised learning): Link

Lecture 1 Part 3 (Perceptron learning): Link

Lecture 2 Part 1 (Loss functions): Link

Lecture 2 Part 2 (Logistic regression): Link

Lecture 2 Part 3 (Convexity): Link

Lecture 3 Part 1 (Neural Network): Link

Lecture 3 Part 2 (Backpropogation): Link

Lecture 3 Part 3 (Multi-Layer Network): Link

Lecture 4 Part 1 (Stochastic Gradient): Link

Lecture 4 Part 2 (Multi-Class Classification): Link

Lecture 4 Part 3 (Regularization): Link

This X does not exist: Link

Turtle or Rifle: Link

Art or garbage game: Link

Guess two-thirds of the average? Link

Gradient Descent: Link

Optimization: Link

Neural Network: Link

Generative Adversarial Net: Link

Neural Network: Link

Another Neural Network Demo: Link

Neural Network Videos by Grant Sanderson: Playlist

MNIST Neural Network Visualization: Link

Neural Network Simulator: Link

Overfitting: Link

Neural Network Snake: Video

Neural Network Car: Video

Neural Network Flappy Bird: Video

Neural Network Mario: Video

MyScript: algorithm Link demo Link

Maple Calculator: Link

Why cannot use linear regression for binary classification? Link

How to use Perceptron update formula? Link

How to find the size of the hypothesis space for linear classifiers? Link (Part 1)

Computation of Hessian of quadratic form Link

Computation of eigenvalues Link

Gradient descent for linear regression Link

What is the gradient descent step for cross-entropy loss with linear activation? Link (Part 1)

What is the sign of the next gradient descent step? Link (Part 2)

Which loss functions are equivalent to squared loss? Link (Part 3)

How to compute the gradient of the cross-entropy loss with linear activation? Link (Part 4)

How to find the location that minimizes the distance to multiple points? Link (Part 3)

Gradient descent for logistic activation with squared error Link

How to compute logistic gradient descent? Link

How derive 2-layer neural network gradient descent step? Link

How derive multi-layer neural network gradient descent induction step? Link

How to find the missing weights in neural network given data set? Link

How many weights are used in one step of backpropogation? Link (Part 2)

How to compute cross validation accuracy? Link

Multivariate Calculus: Textbook, Chapter 16 and/or (Economics) Tutorials, Chapters 2 and 3.

Linear Algebra: Textbook, Chapters on Determinant and Eigenvalue.

Probability and Statistics: Textbook, Chapters 3, 4, 5.

Test item: \(\left(x', y'\right)\), where \(j \in \left\{1, 2, ..., m\right\}\) is the feature index.

Perceptron algorithm update step: \(w = w - \alpha \left(a_{i} - y_{i}\right) x_{i}\), \(b = b - \alpha \left(a_{i} - y_{i}\right)\), \(a_{i} = 1_{\left\{w^\top x_{i} + b \geq 0\right\}}\), where \(a_{i}\) is the activation value of instance \(i\).

Squared loss minimization of perceptrons: \(\left(\hat{w}, \hat{b}\right) = \mathop{\mathrm{argmin}}_{w, b} \dfrac{1}{2} \displaystyle\sum_{i=1}^{n} \left(a_{i} - y_{i}\right)^{2}\), \(a_{i} = g\left(w^\top x_{i} + b\right)\), where \(\hat{w}\) is the optimal weights, \(\hat{b}\) is the optimal bias, \(g\) is the activation function.

Loss minimization problem: \(\left(\hat{w}, \hat{b}\right) = \mathop{\mathrm{argmin}}_{w, b} -\displaystyle\sum_{i=1}^{n} \left(y_{i} \log\left(a_{i}\right) + \left(1 - y_{i}\right) \log\left(1 - a_{i}\right)\right)\), \(a_{i} = \dfrac{1}{1 + \exp\left(- \left(w^\top x_{i} + b\right)\right)}\).

Batch gradient descrent step: \(w = w - \alpha \displaystyle\sum_{i=1}^{n} \left(a_{i} - y_{i}\right) x_{i}\), \(b = b - \alpha \displaystyle\sum_{i=1}^{n} \left(a_{i} - y_{i}\right)\), \(a_{i} = \dfrac{1}{1 + \exp\left(- \left(w^\top x_{i} + b\right)\right)}\), where \(\alpha\) is the learning rate.

\(a^{\left(1\right)}_{ij} = \dfrac{1}{1 + \exp\left(- \left(\left(\displaystyle\sum_{j'=1}^{m} x_{ij'} w^{\left(1\right)}_{j'j}\right) + b^{\left(1\right)}_{j}\right)\right)}\), where \(m\) is the number of features (or input units), \(w^{\left(1\right)}_{j' j}\) is the layer \(1\) weight from input unit \(j'\) to hidden layer unit \(j\), \(b^{\left(1\right)}_{j}\) is the bias for hidden layer unit \(j\), \(a_{ij}^{\left(1\right)}\) is the layer \(1\) activation of instance \(i\) hidden unit \(j\).

\(a^{\left(2\right)}_{i} = \dfrac{1}{1 + \exp\left(- \left(\left(\displaystyle\sum_{j=1}^{h} a^{\left(1\right)}_{ij} w^{\left(2\right)}_{j}\right) + b^{\left(2\right)}\right)\right)}\), where \(h\) is the number of hidden units, \(w^{\left(2\right)}_{j}\) is the layer \(2\) weight from hidden layer unit \(j\), \(b^{\left(2\right)}\) is the bias for the output unit, \(a^{\left(2\right)}_{i}\) is the layer \(2\) activation of instance \(i\).

Stochastic gradient descent step for two layer network with squared loss and logistic activation:

\(w^{\left(1\right)}_{j' j} = w^{\left(1\right)}_{j' j} - \alpha \left(a^{\left(2\right)}_{i} - y_{i}\right) a^{\left(2\right)}_{i} \left(1 - a^{\left(2\right)}_{i}\right) w_{j}^{\left(2\right)} a_{ij}^{\left(1\right)} \left(1 - a_{ij}^{\left(1\right)}\right) x_{ij'}\).

\(b^{\left(1\right)}_{j} \leftarrow b^{\left(1\right)}_{j} - \alpha \left(a^{\left(2\right)}_{i} - y_{i}\right) a^{\left(2\right)}_{i} \left(1 - a^{\left(2\right)}_{i}\right) w_{j}^{\left(2\right)} a_{ij}^{\left(1\right)} \left(1 - a_{ij}^{\left(1\right)}\right)\).

\(w^{\left(2\right)}_{j} \leftarrow w^{\left(2\right)}_{j} - \alpha \left(a^{\left(2\right)}_{i} - y_{i}\right) a^{\left(2\right)}_{i} \left(1 - a^{\left(2\right)}_{i}\right) a_{ij}^{\left(1\right)}\).

\(b^{\left(2\right)} \leftarrow b^{\left(2\right)} - \alpha \left(a^{\left(2\right)}_{i} - y_{i}\right) a^{\left(2\right)}_{i} \left(1 - a^{\left(2\right)}_{i}\right)\).

L2 regularization (sqaured loss): \(\displaystyle\sum_{i=1}^{n} \left(a_{i} - y_{i}\right)^{2} + \lambda \left(\displaystyle\sum_{j=1}^{m} \left(w_{j}\right)^{2} + b^{2}\right)\).

# Summary

📗 Tuesday to Friday lectures: 1:00 to 2:15, Zoom Link

📗 Monday to Saturday office hours: Zoom Link

📗 Personal meeting room: always open, Zoom Link

📗 Math Homework:

M1,

M2,

M3,

📗 Programming Homework:

P1,

📗 Examples and Quizzes:

Q1,

Q2,

Q3,

Q4,

📗 Discussions:

D1,

D2,

# Lectures

📗 Slides (will be posted before lecture, usually updated on Monday):

Blank Slides:

Part 1: PDF,

Part 2: PDF,

Part 3: PDF,

Part 4: PDF,

📗 The annotated lecture slides will not be posted this year: please copy down the notes during the lecture or from the Zoom recording.

📗 Notes

Image by sandserifcomics via towards data science

# Other Materials

📗 Pre-recorded videos from 2020

Lecture 1 Part 1 (Admin, 2021): Link and Link Lecture 1 Part 2 (Supervised learning): Link

Lecture 1 Part 3 (Perceptron learning): Link

Lecture 2 Part 1 (Loss functions): Link

Lecture 2 Part 2 (Logistic regression): Link

Lecture 2 Part 3 (Convexity): Link

Lecture 3 Part 1 (Neural Network): Link

Lecture 3 Part 2 (Backpropogation): Link

Lecture 3 Part 3 (Multi-Layer Network): Link

Lecture 4 Part 1 (Stochastic Gradient): Link

Lecture 4 Part 2 (Multi-Class Classification): Link

Lecture 4 Part 3 (Regularization): Link

📗 Relevant websites

Which face is real? Link This X does not exist: Link

Turtle or Rifle: Link

Art or garbage game: Link

Guess two-thirds of the average? Link

Gradient Descent: Link

Optimization: Link

Neural Network: Link

Generative Adversarial Net: Link

Neural Network: Link

Another Neural Network Demo: Link

Neural Network Videos by Grant Sanderson: Playlist

MNIST Neural Network Visualization: Link

Neural Network Simulator: Link

Overfitting: Link

Neural Network Snake: Video

Neural Network Car: Video

Neural Network Flappy Bird: Video

Neural Network Mario: Video

MyScript: algorithm Link demo Link

Maple Calculator: Link

📗 YouTube videos from previous summers

📗 Perceptron:

Why does the (batch) perceptron algorithm work? Link Why cannot use linear regression for binary classification? Link

How to use Perceptron update formula? Link

How to find the size of the hypothesis space for linear classifiers? Link (Part 1)

📗 Gradient Descent:

Why does gradient descent work? Link Computation of Hessian of quadratic form Link

Computation of eigenvalues Link

Gradient descent for linear regression Link

What is the gradient descent step for cross-entropy loss with linear activation? Link (Part 1)

What is the sign of the next gradient descent step? Link (Part 2)

Which loss functions are equivalent to squared loss? Link (Part 3)

How to compute the gradient of the cross-entropy loss with linear activation? Link (Part 4)

How to find the location that minimizes the distance to multiple points? Link (Part 3)

📗 Logistic Regression:

How to derive logistic regression gradient descent step formula? Link Gradient descent for logistic activation with squared error Link

How to compute logistic gradient descent? Link

📗 Neural Network:

How to construct XOR network? Link How derive 2-layer neural network gradient descent step? Link

How derive multi-layer neural network gradient descent induction step? Link

How to find the missing weights in neural network given data set? Link

How many weights are used in one step of backpropogation? Link (Part 2)

📗 Regularization:

Comparison between L1 and L2 regularization. Link How to compute cross validation accuracy? Link

📗 Math and Statistics Review

Checklist: Link, "math crib sheet": Link Multivariate Calculus: Textbook, Chapter 16 and/or (Economics) Tutorials, Chapters 2 and 3.

Linear Algebra: Textbook, Chapters on Determinant and Eigenvalue.

Probability and Statistics: Textbook, Chapters 3, 4, 5.

# Keywords and Notations

📗 Supervised Learning:

Training item: \(\left(x_{i}, y_{i}\right)\), where \(i \in \left\{1, 2, ..., n\right\}\) is the instance index, \(x_{ij}\) is the feature \(j\) of instance \(i\), \(j \in \left\{1, 2, ..., m\right\}\) is the feature index, \(x_{i} = \left(x_{i1}, x_{i2}, ...., x_{im}\right)\) is the feature vector of instance \(i\), and \(y_{i}\) is the true label of instance \(i\). Test item: \(\left(x', y'\right)\), where \(j \in \left\{1, 2, ..., m\right\}\) is the feature index.

📗 Linear Threshold Unit, Linear Perceptron:

LTU Classifier: \(\hat{y}_{i} = 1_{\left\{w^\top x_{i} + b \geq 0\right\}}\), where \(w = \left(w_{1}, w_{2}, ..., w_{m}\right)\) is the weights, \(b\) is the bias, \(x_{i} = \left(x_{i1}, x_{i2}, ..., x_{im}\right)\) is the feature vector of instance \(i\), and \(\hat{y}_{i}\) is the predicted label of instance \(i\). Perceptron algorithm update step: \(w = w - \alpha \left(a_{i} - y_{i}\right) x_{i}\), \(b = b - \alpha \left(a_{i} - y_{i}\right)\), \(a_{i} = 1_{\left\{w^\top x_{i} + b \geq 0\right\}}\), where \(a_{i}\) is the activation value of instance \(i\).

📗 Loss Function:

Zero-one loss minimization: \(\hat{f} = \mathop{\mathrm{argmin}}_{f \in \mathcal{H}} \displaystyle\sum_{i=1}^{n} 1_{\left\{f\left(x_{i}\right) \neq y_{i}\right\}}\), where \(\hat{f}\) is the optimal classifier, \(\mathcal{H}\) is the hypothesis space (set of functions to choose from). Squared loss minimization of perceptrons: \(\left(\hat{w}, \hat{b}\right) = \mathop{\mathrm{argmin}}_{w, b} \dfrac{1}{2} \displaystyle\sum_{i=1}^{n} \left(a_{i} - y_{i}\right)^{2}\), \(a_{i} = g\left(w^\top x_{i} + b\right)\), where \(\hat{w}\) is the optimal weights, \(\hat{b}\) is the optimal bias, \(g\) is the activation function.

📗 Logistic Regression:

Logistic regression classifier: \(\hat{y}_{i} = 1_{\left\{a_{i} \geq 0.5\right\}}\), \(a_{i} = \dfrac{1}{1 + \exp\left(- \left(w^\top x_{i} + b\right)\right)}\). Loss minimization problem: \(\left(\hat{w}, \hat{b}\right) = \mathop{\mathrm{argmin}}_{w, b} -\displaystyle\sum_{i=1}^{n} \left(y_{i} \log\left(a_{i}\right) + \left(1 - y_{i}\right) \log\left(1 - a_{i}\right)\right)\), \(a_{i} = \dfrac{1}{1 + \exp\left(- \left(w^\top x_{i} + b\right)\right)}\).

Batch gradient descrent step: \(w = w - \alpha \displaystyle\sum_{i=1}^{n} \left(a_{i} - y_{i}\right) x_{i}\), \(b = b - \alpha \displaystyle\sum_{i=1}^{n} \left(a_{i} - y_{i}\right)\), \(a_{i} = \dfrac{1}{1 + \exp\left(- \left(w^\top x_{i} + b\right)\right)}\), where \(\alpha\) is the learning rate.

📗 Neural Network:

Neural network classifier for two layer network with logistic activation: \(\hat{y}_{i} = 1_{\left\{a^{\left(2\right)}_{i} \geq 0.5\right\}}\) \(a^{\left(1\right)}_{ij} = \dfrac{1}{1 + \exp\left(- \left(\left(\displaystyle\sum_{j'=1}^{m} x_{ij'} w^{\left(1\right)}_{j'j}\right) + b^{\left(1\right)}_{j}\right)\right)}\), where \(m\) is the number of features (or input units), \(w^{\left(1\right)}_{j' j}\) is the layer \(1\) weight from input unit \(j'\) to hidden layer unit \(j\), \(b^{\left(1\right)}_{j}\) is the bias for hidden layer unit \(j\), \(a_{ij}^{\left(1\right)}\) is the layer \(1\) activation of instance \(i\) hidden unit \(j\).

\(a^{\left(2\right)}_{i} = \dfrac{1}{1 + \exp\left(- \left(\left(\displaystyle\sum_{j=1}^{h} a^{\left(1\right)}_{ij} w^{\left(2\right)}_{j}\right) + b^{\left(2\right)}\right)\right)}\), where \(h\) is the number of hidden units, \(w^{\left(2\right)}_{j}\) is the layer \(2\) weight from hidden layer unit \(j\), \(b^{\left(2\right)}\) is the bias for the output unit, \(a^{\left(2\right)}_{i}\) is the layer \(2\) activation of instance \(i\).

Stochastic gradient descent step for two layer network with squared loss and logistic activation:

\(w^{\left(1\right)}_{j' j} = w^{\left(1\right)}_{j' j} - \alpha \left(a^{\left(2\right)}_{i} - y_{i}\right) a^{\left(2\right)}_{i} \left(1 - a^{\left(2\right)}_{i}\right) w_{j}^{\left(2\right)} a_{ij}^{\left(1\right)} \left(1 - a_{ij}^{\left(1\right)}\right) x_{ij'}\).

\(b^{\left(1\right)}_{j} \leftarrow b^{\left(1\right)}_{j} - \alpha \left(a^{\left(2\right)}_{i} - y_{i}\right) a^{\left(2\right)}_{i} \left(1 - a^{\left(2\right)}_{i}\right) w_{j}^{\left(2\right)} a_{ij}^{\left(1\right)} \left(1 - a_{ij}^{\left(1\right)}\right)\).

\(w^{\left(2\right)}_{j} \leftarrow w^{\left(2\right)}_{j} - \alpha \left(a^{\left(2\right)}_{i} - y_{i}\right) a^{\left(2\right)}_{i} \left(1 - a^{\left(2\right)}_{i}\right) a_{ij}^{\left(1\right)}\).

\(b^{\left(2\right)} \leftarrow b^{\left(2\right)} - \alpha \left(a^{\left(2\right)}_{i} - y_{i}\right) a^{\left(2\right)}_{i} \left(1 - a^{\left(2\right)}_{i}\right)\).

📗 Multiple Classes:

Softmax activation for one layer networks: \(a_{ij} = \dfrac{\exp\left(- \left(w_{k^\top} x_{i} + b_{k}\right)\right)}{\displaystyle\sum_{k' = 1}^{K} \exp\left(- \left(w_{k'}^\top x_{i} + b_{k'}\right)\right)}\), where \(K\) is the number of classes (number of possible labels), \(a_{i k}\) is the activation of the output unit \(k\) for instance \(i\), \(y_{i k}\) is component \(k\) of the one-hot encoding of the label for instance \(i\). 📗 Regularization:

L1 regularization (squared loss): \(\displaystyle\sum_{i=1}^{n} \left(a_{i} - y_{i}\right)^{2} + \lambda \left(\displaystyle\sum_{j=1}^{m} \left| w_{j} \right| + \left| b \right|\right)\), where \(\lambda\) is the regularization parameter. L2 regularization (sqaured loss): \(\displaystyle\sum_{i=1}^{n} \left(a_{i} - y_{i}\right)^{2} + \lambda \left(\displaystyle\sum_{j=1}^{m} \left(w_{j}\right)^{2} + b^{2}\right)\).

Last Updated: January 19, 2026 at 9:18 PM