Previous Projects

Machine teaching

What if there is a teacher who knows the learning goal (such as a target model) and wants to design the optimal (such as the smallest) training data for a learner? This is the question of designing the best "lesson," and the inverse problem of machine learning. Machine teaching has obvious applications in education and cognitive psychology, as well as potential applications in computer security. The optimal training set is usually not an i.i.d. sample, but rather requires combinatorial search. Finding the optimal training set is in general a difficult bilevel optimization problem, though in certain cases there are tractable solutions.[project website]

Adversarial Machine Learning, Security, and Trustworthy AI

An adversary can force machine learning to make mistakes. We study why this happens and how to defend against it. Our research ranges from test-time attacks, training data poisoning attacks to other subtle forms of adversarial attacks.[project website]

Enhancing human learning using computational learning theory

What is the VC-dimension of the human mind? Do people do active learning? Do they do semi-supervised learning? Is there a mathematically optimal way to teach them? This project seeks a unifying theory behind machine learning and human learning. It helps us understand how humans learn, with the potential to enhance education and produce new machine learning algorithms.[project website]

Topology for machine learning

Persistent homology is a mathematical tool from topological data analysis. The 0-th order homology groups correspond to clusters, while the 1st order homology groups are "holes" as in the center of a donut, and the 2nd order homology groups are "voids" as the inside of a balloon, etc. These seemingly exotic mathematical structures may provide valuable invariant data representations that complement current feature-based representations in machine learning. Here is a gentle tutorial for computer scientists and an idea for natural language processing. Here is another idea for machine learning. Also check out the ICML 2014 workshop on Topological Methods for Machine Learning.Fighting bullying with machine learning (and other social media for social good projects)

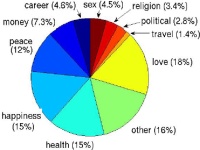

Bullying is a serious national health issue. Social science study of bullying traditionally used personal surveys in schools, suffering from small sample size and low temporal resolution. We are developing novel machine learning models to study bullying. Our model aims to reconstruct a bullying event -- who the bullies, victims, witnesses are, and what happened to them -- from publicly available social media posts. Our model and data can improve the scientific study, intervention, and policy-making, of bullying.For details see our project website. New: Bullying dataset for machine learning version 3.0 released in June 2015, with 7321 tweets annotated with bullying, author role, teasing, type, form, and emotion labels.

More broadly, we develop machine learning models to mine social media for social good. For instance, our Socioscope model help scientists estimate wildlife spatio-temporal distributions from roadkill posts (ECML-PKDD 2012 Best Paper), and we estimate real-time air quality from Weibo posts using another model.

Safe semi-supervised learning

To use unlabeled data or not, that is the question. It is known that semi-supervised learning can be inferior to supervised learning if its model assumption is violated. Can we design semi-supervised algorithms which are provably robust to such failure? The challenge is to detect model assumption violation from limited labeled data, where semi-supervised learning is most useful.[project website]