Using Machine Learning to Understand and Enhance Human Learning Capacity

Research Projects

The overall goal of the project is to develop computational learning models and theory, originally aimed at computers, to predict and influence human learning behaviors.

Capacity measure of the human mind

What is the VC-dimension of the human mind?

In machine learning, the VC-dimension is a well-known capacity measure for a model family.

What if the "model family" is the human mind, e.g., all the classifiers that one can come up with?

Can we estimate such a capacity for humans?

We propose a method to estimate the Rademacher complexity of the human mind in binary categorization tasks.

It will tell us the intrinsic complexity of the human thinking process.

It also has direct application in understanding overfitting in human learning.

Read more:

-

Xiaojin Zhu, Timothy Rogers, and Bryan Gibson.

Human Rademacher Complexity.

In Advances in Neural Information Processing Systems (NIPS) 23, 2009.

Optimal teaching

Given a task and a learner, can a teacher design an optimal teaching strategy so that the learner "gets" the true concept quickly? Recent work in the machine learning community on teaching dimension and curriculum learning starts to address this question. We are developing new computational theory and performing human behavioral experiments to advance our understanding of optimal teaching.

Read more:

Human semi-supervised learning

Human category learning is traditionally thought of as supervised learning.

We demonstrated that it is in fact greatly influenced by unlabeled data, and should be modeled as semi-supervised learning.

For example, after learning, just performing categorization on unlabeled test items can change the human's mind about the decision boundary.

Read more:

- Xiaojin Zhu, Timothy Rogers, Ruichen Qian, and Chuck Kalish.

Humans

perform semi-supervised classification too. In Twenty-Second AAAI

Conference on Artificial Intelligence (AAAI-07), 2007.

- Xiaojin Zhu, Bryan R. Gibson, Kwang-Sung Jun, Timothy T. Rogers,

Joseph Harrison, and Chuck Kalish.

Cognitive models of test-item effects in human category learning. In The 27th International Conference on

Machine Learning (ICML), 2010.

- Bryan Gibson, Xiaojin Zhu, Tim Rogers, Chuck Kalish, and Joseph

Harrison.

Humans learn using manifolds, reluctantly. In Advances in Neural

Information Processing Systems (NIPS) 24, 2010.

- Charles W. Kalish, Timothy T. Rogers, Jonathan Lang, and Xiaojin Zhu.

Can semi-supervised learning explain incorrect beliefs about categories?

Cognition, 2011.

- Xiaojin Zhu, Bryan Gibson, and Timothy Rogers.

Co-training as a human

collaboration policy. In The Twenty-Fifth Conference on Artificial

Intelligence (AAAI-11), 2011.

Human active learning

Under certain conditions, an active machine learner provably outperforms a passive learner.

If we allow a human learner to submit queries and obtain oracle labels, can they do better than their peers who passively receive iid training samples?

We showed that the answer is yes.

Read more:

- Rui Castro, Charles Kalish, Robert Nowak, Ruichen Qian, Timothy

Rogers, and Xiaojin Zhu.

Human active learning. In Advances in Neural

Information Processing Systems (NIPS) 22, 2008.

Publications from this project

-

Ji Liu and Xiaojin Zhu.

The teaching dimension of linear learners.

Journal of Machine Learning Research, 17(162):1-25, 2016.

This is the journal version of the ICML'16 paper, with a discussion on teacher-learner collusion.

[link]

-

Xiaojin Zhu, Ji Liu, and Manuel Lopes.

No learner left behind: On the complexity of teaching multiple learners simultaneously.

In The 26th International Joint Conference on Artificial Intelligence (IJCAI), 2017.

Minimax teaching dimension to make the worst learner in a class learn.

Partitioning the class into sections improves teaching dimension.

[pdf]

-

Scott Alfeld, Xiaojin Zhu, and Paul Barford.

Explicit defense actions against test-set attacks.

In The Thirty-First AAAI Conference on Artificial Intelligence (AAAI), 2017.

[pdf]

-

Tzu-Kuo Huang, Lihong Li, Ara Vartanian, Saleema Amershi, and Xiaojin Zhu.

Active learning with oracle epiphany.

In Advances in Neural Information Processing Systems (NIPS), 2016.

This paper brings active learning theory and practice closer. We analyze active learning query complexity where the oracle initially may not know how to answer queries from a certain region in the input space. After seeing multiple queries from the region, the oracle can have an "epiphany", i.e. realizing how to answer any queries from that region.

[pdf]

-

Ji Liu, Xiaojin Zhu, and H. Gorune Ohannessian.

The Teaching Dimension of Linear Learners.

In The 33rd International Conference on Machine Learning (ICML), 2016.

We provide lower bounds on training set size to perfectly teach a linear learning.

We also provide the corresponding upper bounds (and thus teaching dimension) by exhibiting teaching sets for SVM, logistic regression, and ridge regression.

[pdf | supplementary | arXiv preprint]

-

Xiaojin Zhu, Ara Vartanian, Manish Bansal, Duy Nguyen, and Luke Brandl.

Stochastic multiresolution persistent homology kernel.

In The 25th International Joint Conference on Artificial Intelligence (IJCAI), 2016.

A kernel built upon persistent homology at multiple resolutions, and with Monte Carlo to speed up.

Ready to use as topological features for machine learning.

[pdf]

-

Kwang-Sung Jun, Kevin Jamieson, Rob Nowak, and Xiaojin Zhu.

Top arm identification in multi-armed bandits with batch arm pulls.

In The 19th International Conference on Artificial Intelligence and Statistics (AISTATS), 2016.

[pdf]

-

Scott Alfeld, Xiaojin Zhu, and Paul Barford.

Data Poisoning Attacks against Autoregressive Models.

In The Thirtieth AAAI Conference on Artificial Intelligence (AAAI), 2016.

Machine teaching for autoregression, applied to computer security.

[pdf]

-

Kwang-Sung Jun, Xiaojin Zhu, Timothy Rogers, Zhuoran Yang, and Ming Yuan.

Human memory search as initial-visit emitting random walk.

In Advances in Neural Information Processing Systems (NIPS), 2015.

A random walk that only emits an output when it visits a state for the first time.

[pdf | supplemental | poster]

-

Gautam Dasarathy, Robert Nowak, and Xiaojin Zhu.

S2: An efficient graph based active learning algorithm with application to nonparametric classification.

In Conference on Learning Theory (COLT), 2015.

[pdf ]

-

Shike Mei and Xiaojin Zhu.

The security of latent Dirichlet allocation.

In The Eighteenth International Conference on Artificial Intelligence and Statistics (AISTATS), 2015.

[pdf |

slides

]

-

Xiaojin Zhu.

Machine Teaching: an Inverse Problem to Machine Learning and an Approach Toward Optimal Education.

In The Twenty-Ninth AAAI Conference on Artificial Intelligence (Senior Member Track, AAAI), 2015.

[pdf |

talk slides |

project link]

-

Shike Mei and Xiaojin Zhu.

Using Machine Teaching to Identify Optimal Training-Set Attacks on Machine Learners.

In The Twenty-Ninth AAAI Conference on Artificial Intelligence (AAAI), 2015.

[pdf

| poster ad

| poster

| Mendota ice data

| Tech Report 1813]

-

Bryan Gibson, Timothy Rogers, Charles Kalish, and Xiaojin Zhu.

What causes category-shifting in human semi-supervised learning?

In The 32nd Annual Conference of the Cognitive Science Society (CogSci), 2015.

[pdf]

-

Kaustubh Patil, Xiaojin Zhu, Lukasz Kopec, and Bradley Love.

Optimal Teaching for Limited-Capacity Human Learners.

In Advances in Neural Information Processing Systems (NIPS), 2014.

[pdf |

poster |

spotlight |

data]

-

Charles Kalish, Xiaojin Zhu, and Timothy Rogers.

Drift in children's categories: When experienced distributions conflict with prior learning.

In Developmental Science, 2014.

-

Xiaojin Zhu.

Machine teaching for Bayesian learners in the exponential family.

In Advances in Neural Information Processing Systems (NIPS), 2013.

[pdf | poster]

-

Kwang-Sung Jun, Xiaojin Zhu, Burr Settles, and Timothy Rogers.

Learning from Human-Generated Lists.

In The 30th International Conference on Machine Learning (ICML), 2013.

[pdf | slides |

SWIRL v1.0 code

| video]

-

Bryan R. Gibson, Timothy T. Rogers, and Xiaojin Zhu. Human semi-supervised learning. Topics in Cognitive Science, 5(1):132-172, 2013.

[link]

-

Xiaojin Zhu.

Persistent homology: An introduction and a new text representation for natural language processing.

In The 23rd International Joint Conference on Artificial Intelligence (IJCAI), 2013.

[pdf |

slides |

data and code ]

-

Burr Settles and Xiaojin Zhu.

Behavioral factors in interactive training of text classifiers.

In North American Chapter of the Association for Computational Linguistics - Human Language Technologies (NAACL HLT). Short paper. 2012.

[pdf]

-

Faisal Khan, Xiaojin Zhu, and Bilge Mutlu.

How do humans teach: On curriculum learning and teaching dimension.

In Advances in Neural Information Processing Systems (NIPS) 25. 2011.

[pdf | data | slides]

-

Shilin Ding, Grace Wahba, and Xiaojin Zhu.

Learning higher-order graph structure with features by structure penalty.

In Advances in Neural Information Processing Systems (NIPS) 25. 2011.

[pdf]

-

Jun-Ming Xu, Xiaojin Zhu, and Timothy T. Rogers.

Metric learning for estimating psychological similarities.

ACM Transactions on Intelligent Systems and Technology (ACM TIST), 2011.

[journal link

| unofficial version

| data

| code]

-

David Andrzejewski, Xiaojin Zhu, Mark Craven, and Ben Recht.

A framework for incorporating general domain knowledge into Latent Dirichlet Allocation using First-Order Logic.

The Twenty-Second International Joint Conference on Artificial Intelligence (IJCAI-11), 2011.

[pdf |

slides |

poster |

code]

-

Xiaojin Zhu, Bryan Gibson, and Timothy Rogers.

Co-training as a human collaboration policy.

In The Twenty-Fifth Conference on Artificial Intelligence (AAAI-11), 2011.

[pdf]

-

Andrew Goldberg, Xiaojin Zhu, Alex Furger, and Jun-Ming Xu.

OASIS: Online active semisupervised learning.

In The Twenty-Fifth Conference on Artificial Intelligence (AAAI-11), 2011.

[pdf]

-

Chen Yu, Jun-Ming Xu, and Xiaojin Zhu.

Word learning through sensorimotor child-parent interaction: A feature selection approach.

The 33rd Annual Conference of the Cognitive Science Society (CogSci 2011), 2011.

[pdf]

-

Charles W. Kalish, Timothy T. Rogers, Jonathan Lang, and Xiaojin Zhu.

Can semi-supervised learning explain incorrect beliefs about categories?

Cognition, 2011.

[link]

-

Bryan Gibson, Xiaojin Zhu, Tim Rogers, Chuck Kalish, and Joseph Harrison.

Humans learn using manifolds, reluctantly.

In Advances in Neural Information Processing Systems (NIPS) 24, 2010.

[pdf | NIPS talk slides]

-

Andrew Goldberg, Xiaojin Zhu, Benjamin Recht, Jun-Ming Xu, and Robert Nowak.

Transduction with matrix completion: Three birds with one stone.

In Advances in Neural Information Processing Systems (NIPS) 24. 2010.

[pdf]

-

Xiaojin Zhu, Bryan R. Gibson, Kwang-Sung Jun, Timothy T. Rogers, Joseph Harrison, and Chuck Kalish.

Cognitive models of test-item effects in human category learning.

In The 27th International Conference on Machine Learning (ICML), 2010.

[paper pdf]

-

Bryan R Gibson, Kwang-Sung Jun, and Xiaojin Zhu.

With a little help from the computer: Hybrid human-machine systems on bandit problems.

In NIPS 2010 Workshop on Computational Social Science and the Wisdom of Crowds, 2010.

[pdf]

Selected highlights from publications

In terms of understanding learning, we have made a number of discoveries:

-

Human teaching strategies:

We discovered interesting discrepancies between computational-theoretically optimal teaching strategies and actual human teaching behaviors. We considered a concept class of 1D threshold classifiers. To learn a target concept to a given accuracy from i.i.d. training items, a learner needs a polynomial number of items (i.e., a lot). If the learner can do active learning, it needs a logarithmic number of items (i.e., a few) -- Note the oracle waits for the learner to pick query items. However, if the oracle picks the training items for the learner, two items suffice: one positive and one negative right around the threshold. Such optimal teaching strategy is captured by a branch of computational learning theory known as teaching dimensions. However, our behavioral experiments where humans play the role of the oracle teacher in this setting revealed that human teaching behaviors differ dramatically from the optimal strategy predicted by teaching dimensions. In particular, a significant number of human teachers seem to follow the so-called "curriculum learning" strategy by first teaching extreme items at both ends of the 1D range, and then zigzagging toward the threshold. We developed a theoretical explanation which suggests that such teaching behaviors might be optimal after all. The explanation is based on risk minimization under certain assumptions. [NIPS 2011a]

Human teaching strategies:

We discovered interesting discrepancies between computational-theoretically optimal teaching strategies and actual human teaching behaviors. We considered a concept class of 1D threshold classifiers. To learn a target concept to a given accuracy from i.i.d. training items, a learner needs a polynomial number of items (i.e., a lot). If the learner can do active learning, it needs a logarithmic number of items (i.e., a few) -- Note the oracle waits for the learner to pick query items. However, if the oracle picks the training items for the learner, two items suffice: one positive and one negative right around the threshold. Such optimal teaching strategy is captured by a branch of computational learning theory known as teaching dimensions. However, our behavioral experiments where humans play the role of the oracle teacher in this setting revealed that human teaching behaviors differ dramatically from the optimal strategy predicted by teaching dimensions. In particular, a significant number of human teachers seem to follow the so-called "curriculum learning" strategy by first teaching extreme items at both ends of the 1D range, and then zigzagging toward the threshold. We developed a theoretical explanation which suggests that such teaching behaviors might be optimal after all. The explanation is based on risk minimization under certain assumptions. [NIPS 2011a]

-

Yada:

An important problem in cognitive psychology is to quantify the perceived similarities between stimuli. Previous work attempted to address this problem with multi-dimensional scaling (MDS) and its variants. However, the required input to those algorithms is not always easy to obtain in reality. We proposed Yada, a novel general metric learning procedure based on two-alternative forced-choice behavioral experiments. Our method learns forward and backward nonlinear mappings between an objective space in which the stimuli are defined by the standard feature vector representation, and a subjective space in which the distance between a pair of stimuli corresponds to their perceived similarity. We conduct experiments on both synthetic and real human behavioral datasets to assess the effectiveness of Yada. The results show that Yada outperforms several standard embedding and metric learning algorithms, both in terms of likelihood and recovery error. [TIST 2011]

Yada:

An important problem in cognitive psychology is to quantify the perceived similarities between stimuli. Previous work attempted to address this problem with multi-dimensional scaling (MDS) and its variants. However, the required input to those algorithms is not always easy to obtain in reality. We proposed Yada, a novel general metric learning procedure based on two-alternative forced-choice behavioral experiments. Our method learns forward and backward nonlinear mappings between an objective space in which the stimuli are defined by the standard feature vector representation, and a subjective space in which the distance between a pair of stimuli corresponds to their perceived similarity. We conduct experiments on both synthetic and real human behavioral datasets to assess the effectiveness of Yada. The results show that Yada outperforms several standard embedding and metric learning algorithms, both in terms of likelihood and recovery error. [TIST 2011]

-

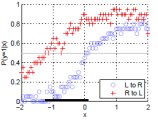

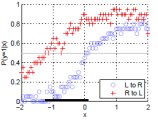

Test-Item Effect:

We have found that human categorization is strongly influenced by unlabeled test items. Here is an analogy. Imagine two luggage screening officers who received exactly the same training (i.e., same labeled data), and thus had the same idea as to what luggage are dangerous and what are not (i.e., the binary label). Now they each individually screen different luggage (i.e., different unlabeled data), but *without feedback of the true labels on those luggage*. Interestingly, this is sufficient to influence their internal decision boundary, to the extent that they may disagree on the label of certain luggage. We were able to quantify this "test item effect" using semi-supervised machine learning models [Cognition 2011, ICML 2010].

Test-Item Effect:

We have found that human categorization is strongly influenced by unlabeled test items. Here is an analogy. Imagine two luggage screening officers who received exactly the same training (i.e., same labeled data), and thus had the same idea as to what luggage are dangerous and what are not (i.e., the binary label). Now they each individually screen different luggage (i.e., different unlabeled data), but *without feedback of the true labels on those luggage*. Interestingly, this is sufficient to influence their internal decision boundary, to the extent that they may disagree on the label of certain luggage. We were able to quantify this "test item effect" using semi-supervised machine learning models [Cognition 2011, ICML 2010].

Fold.all:

Want to add all kinds of domain knowledge (e.g., word-must-in-this-topic, word-must-not-in-that-topic, if-this-then-that, etc.) to LDA? As long as you can write them in FOL, our fold.all model can combine your logic knowledge base with latent Dirichlet allocation for you via stochastic gradient descent. The resulting topics will be guided by both logic and data statistics. You don't have to derive customized LDA variants ever again (disclaimer: read the paper). In other words, fold.all to LDA is like constrained clustering to clustering.

[IJCAI 2011]

Fold.all:

Want to add all kinds of domain knowledge (e.g., word-must-in-this-topic, word-must-not-in-that-topic, if-this-then-that, etc.) to LDA? As long as you can write them in FOL, our fold.all model can combine your logic knowledge base with latent Dirichlet allocation for you via stochastic gradient descent. The resulting topics will be guided by both logic and data statistics. You don't have to derive customized LDA variants ever again (disclaimer: read the paper). In other words, fold.all to LDA is like constrained clustering to clustering.

[IJCAI 2011]

OASIS and matrix completion: We have proposed two novel semi-supervised learning models that can learn incrementally from unlimited data stream and with missing entries, abilities important to explain human learning. [AAAI 2011, NIPS 2010]

OASIS and matrix completion: We have proposed two novel semi-supervised learning models that can learn incrementally from unlimited data stream and with missing entries, abilities important to explain human learning. [AAAI 2011, NIPS 2010]

Parent-child word learning:

We have had some initial success in identifying the important factors in early child word learning using a sparse regression model. [CogSci 2011]

Parent-child word learning:

We have had some initial success in identifying the important factors in early child word learning using a sparse regression model. [CogSci 2011]

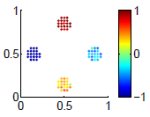

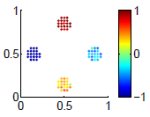

Human manifold learning:

Humans can learn the two-moon dataset, if we give them 4 (but not 2) labeled points and clue them in on the graph.

[NIPS 2010]

Human manifold learning:

Humans can learn the two-moon dataset, if we give them 4 (but not 2) labeled points and clue them in on the graph.

[NIPS 2010]

In terms of enhancing learning, we have made the follow progress:

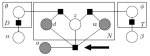

Human Co-Training:

We turned the co-training algorithm into a human collaboration policy, where two people together learn a concept. Importantly, each person sees half of the features, and the two communicate by exchanging labels. We show that this policy leads to unique nonlinear decision boundaries that were difficult to learn had the two people fully collaborated or did not collaborate. [AAAI 2011]

Human Co-Training:

We turned the co-training algorithm into a human collaboration policy, where two people together learn a concept. Importantly, each person sees half of the features, and the two communicate by exchanging labels. We show that this policy leads to unique nonlinear decision boundaries that were difficult to learn had the two people fully collaborated or did not collaborate. [AAAI 2011]

Computer Reverse Psychology:

We programmed a computer to apply reverse psychology to humans, with the hope to correct irrational human behaviors in a multi-armed bandit setting. We discovered that humans are not strongly influenced by this naive application of reverse psychology [NIPS 2010 workshop]. This constrains future persuasive strategies and deserves further research.

Computer Reverse Psychology:

We programmed a computer to apply reverse psychology to humans, with the hope to correct irrational human behaviors in a multi-armed bandit setting. We discovered that humans are not strongly influenced by this naive application of reverse psychology [NIPS 2010 workshop]. This constrains future persuasive strategies and deserves further research.

Data sets for download

Code for download

Research Group

Faculty

Graduate Students

Undergraduate Students

Staff

- Lang Chen

- Joseph Harrison

Collaborators

- Professor Mark Craven, Department of Biostatistics and Medical Informatics, University of Wisconsin-Madison.

Combining human knowledge with data-driven approaches.

- Professor Charles W. Kalish, Department of Educational Psychology, University of Wisconsin-Madison.

Machine learning models applied to cognitive psychology.

- Professor Bilge Mutlu, Department of Computer Sciences, University of Wisconsin-Madison.

Machine learning models applied to human teaching behaviors.

- Professor Robert Nowak, Department of Electrical and Computer Engineering, University of Wisconsin-Madison.

Optimization techniques for machine learning.

- Professor Benjamin Recht, Department of Computer Sciences, University of Wisconsin-Madison.

Optimization techniques for machine learning.

- Professor Timothy T. Rogers, Department of Psychology, University of Wisconsin-Madison.

Machine learning models applied to cognitive psychology.

- Professor Grace Wahba, Department of Statistics, University of Wisconsin-Madison.

Graphical model structure learning.

- Professor Chen Yu, Cognitive Science, Indiana University.

Parent-child word learning modeling.

Professor Xiaojin Zhu in Computer Sciences at the University of Wisconsin-Madison is the recipient of a 2010 Faculty Early Career Development Award (CAREER) from the National Science Foundation, a five-year grant designed to boost young faculty in establishing integrated research and educational activities while helping to address areas of important need. Zhu's CAREER project is titled "Using Machine Learning to Understand and Enhance Human Learning Capacity." His project aims to discover the common mathematical principles that govern learning in both humans and computers. Examples include rigorous generalization error bounds (how well can a student or a robot generalize what the teacher taught to new problems?), sparsity (how well can the student or robot identify a few salient features of a problem, out of a haystack of irrelevant features?), and active learning (can the student or robot ask good questions to speed up its own learning?). He expects the project will lead to novel computational approaches to enhance human learning in and out of classrooms, and advance machine learning by incorporating insights on tasks where humans excel.

This project is based upon work supported by the National Science

Foundation under Grant No. IIS-0953219. Any opinions, findings, and conclusions or

recommendations expressed in this material are those of the authors and do not

necessarily reflect the views of the National Science Foundation.

Professor Xiaojin Zhu in Computer Sciences at the University of Wisconsin-Madison is the recipient of a 2010 Faculty Early Career Development Award (CAREER) from the National Science Foundation, a five-year grant designed to boost young faculty in establishing integrated research and educational activities while helping to address areas of important need. Zhu's CAREER project is titled "Using Machine Learning to Understand and Enhance Human Learning Capacity." His project aims to discover the common mathematical principles that govern learning in both humans and computers. Examples include rigorous generalization error bounds (how well can a student or a robot generalize what the teacher taught to new problems?), sparsity (how well can the student or robot identify a few salient features of a problem, out of a haystack of irrelevant features?), and active learning (can the student or robot ask good questions to speed up its own learning?). He expects the project will lead to novel computational approaches to enhance human learning in and out of classrooms, and advance machine learning by incorporating insights on tasks where humans excel.

This project is based upon work supported by the National Science

Foundation under Grant No. IIS-0953219. Any opinions, findings, and conclusions or

recommendations expressed in this material are those of the authors and do not

necessarily reflect the views of the National Science Foundation.

Human teaching strategies:

We discovered interesting discrepancies between computational-theoretically optimal teaching strategies and actual human teaching behaviors. We considered a concept class of 1D threshold classifiers. To learn a target concept to a given accuracy from i.i.d. training items, a learner needs a polynomial number of items (i.e., a lot). If the learner can do active learning, it needs a logarithmic number of items (i.e., a few) -- Note the oracle waits for the learner to pick query items. However, if the oracle picks the training items for the learner, two items suffice: one positive and one negative right around the threshold. Such optimal teaching strategy is captured by a branch of computational learning theory known as teaching dimensions. However, our behavioral experiments where humans play the role of the oracle teacher in this setting revealed that human teaching behaviors differ dramatically from the optimal strategy predicted by teaching dimensions. In particular, a significant number of human teachers seem to follow the so-called "curriculum learning" strategy by first teaching extreme items at both ends of the 1D range, and then zigzagging toward the threshold. We developed a theoretical explanation which suggests that such teaching behaviors might be optimal after all. The explanation is based on risk minimization under certain assumptions. [NIPS 2011a]

Human teaching strategies:

We discovered interesting discrepancies between computational-theoretically optimal teaching strategies and actual human teaching behaviors. We considered a concept class of 1D threshold classifiers. To learn a target concept to a given accuracy from i.i.d. training items, a learner needs a polynomial number of items (i.e., a lot). If the learner can do active learning, it needs a logarithmic number of items (i.e., a few) -- Note the oracle waits for the learner to pick query items. However, if the oracle picks the training items for the learner, two items suffice: one positive and one negative right around the threshold. Such optimal teaching strategy is captured by a branch of computational learning theory known as teaching dimensions. However, our behavioral experiments where humans play the role of the oracle teacher in this setting revealed that human teaching behaviors differ dramatically from the optimal strategy predicted by teaching dimensions. In particular, a significant number of human teachers seem to follow the so-called "curriculum learning" strategy by first teaching extreme items at both ends of the 1D range, and then zigzagging toward the threshold. We developed a theoretical explanation which suggests that such teaching behaviors might be optimal after all. The explanation is based on risk minimization under certain assumptions. [NIPS 2011a]

Yada:

An important problem in cognitive psychology is to quantify the perceived similarities between stimuli. Previous work attempted to address this problem with multi-dimensional scaling (MDS) and its variants. However, the required input to those algorithms is not always easy to obtain in reality. We proposed Yada, a novel general metric learning procedure based on two-alternative forced-choice behavioral experiments. Our method learns forward and backward nonlinear mappings between an objective space in which the stimuli are defined by the standard feature vector representation, and a subjective space in which the distance between a pair of stimuli corresponds to their perceived similarity. We conduct experiments on both synthetic and real human behavioral datasets to assess the effectiveness of Yada. The results show that Yada outperforms several standard embedding and metric learning algorithms, both in terms of likelihood and recovery error. [TIST 2011]

Yada:

An important problem in cognitive psychology is to quantify the perceived similarities between stimuli. Previous work attempted to address this problem with multi-dimensional scaling (MDS) and its variants. However, the required input to those algorithms is not always easy to obtain in reality. We proposed Yada, a novel general metric learning procedure based on two-alternative forced-choice behavioral experiments. Our method learns forward and backward nonlinear mappings between an objective space in which the stimuli are defined by the standard feature vector representation, and a subjective space in which the distance between a pair of stimuli corresponds to their perceived similarity. We conduct experiments on both synthetic and real human behavioral datasets to assess the effectiveness of Yada. The results show that Yada outperforms several standard embedding and metric learning algorithms, both in terms of likelihood and recovery error. [TIST 2011]

Test-Item Effect:

We have found that human categorization is strongly influenced by unlabeled test items. Here is an analogy. Imagine two luggage screening officers who received exactly the same training (i.e., same labeled data), and thus had the same idea as to what luggage are dangerous and what are not (i.e., the binary label). Now they each individually screen different luggage (i.e., different unlabeled data), but *without feedback of the true labels on those luggage*. Interestingly, this is sufficient to influence their internal decision boundary, to the extent that they may disagree on the label of certain luggage. We were able to quantify this "test item effect" using semi-supervised machine learning models [Cognition 2011, ICML 2010].

Test-Item Effect:

We have found that human categorization is strongly influenced by unlabeled test items. Here is an analogy. Imagine two luggage screening officers who received exactly the same training (i.e., same labeled data), and thus had the same idea as to what luggage are dangerous and what are not (i.e., the binary label). Now they each individually screen different luggage (i.e., different unlabeled data), but *without feedback of the true labels on those luggage*. Interestingly, this is sufficient to influence their internal decision boundary, to the extent that they may disagree on the label of certain luggage. We were able to quantify this "test item effect" using semi-supervised machine learning models [Cognition 2011, ICML 2010].

Fold.all:

Want to add all kinds of domain knowledge (e.g., word-must-in-this-topic, word-must-not-in-that-topic, if-this-then-that, etc.) to LDA? As long as you can write them in FOL, our fold.all model can combine your logic knowledge base with latent Dirichlet allocation for you via stochastic gradient descent. The resulting topics will be guided by both logic and data statistics. You don't have to derive customized LDA variants ever again (disclaimer: read the paper). In other words, fold.all to LDA is like constrained clustering to clustering.

[IJCAI 2011]

Fold.all:

Want to add all kinds of domain knowledge (e.g., word-must-in-this-topic, word-must-not-in-that-topic, if-this-then-that, etc.) to LDA? As long as you can write them in FOL, our fold.all model can combine your logic knowledge base with latent Dirichlet allocation for you via stochastic gradient descent. The resulting topics will be guided by both logic and data statistics. You don't have to derive customized LDA variants ever again (disclaimer: read the paper). In other words, fold.all to LDA is like constrained clustering to clustering.

[IJCAI 2011]

OASIS and matrix completion: We have proposed two novel semi-supervised learning models that can learn incrementally from unlimited data stream and with missing entries, abilities important to explain human learning. [AAAI 2011, NIPS 2010]

OASIS and matrix completion: We have proposed two novel semi-supervised learning models that can learn incrementally from unlimited data stream and with missing entries, abilities important to explain human learning. [AAAI 2011, NIPS 2010]

Parent-child word learning:

We have had some initial success in identifying the important factors in early child word learning using a sparse regression model. [CogSci 2011]

Parent-child word learning:

We have had some initial success in identifying the important factors in early child word learning using a sparse regression model. [CogSci 2011]

Human manifold learning:

Humans can learn the two-moon dataset, if we give them 4 (but not 2) labeled points and clue them in on the graph.

[NIPS 2010]

Human manifold learning:

Humans can learn the two-moon dataset, if we give them 4 (but not 2) labeled points and clue them in on the graph.

[NIPS 2010]

Human Co-Training:

We turned the co-training algorithm into a human collaboration policy, where two people together learn a concept. Importantly, each person sees half of the features, and the two communicate by exchanging labels. We show that this policy leads to unique nonlinear decision boundaries that were difficult to learn had the two people fully collaborated or did not collaborate. [AAAI 2011]

Human Co-Training:

We turned the co-training algorithm into a human collaboration policy, where two people together learn a concept. Importantly, each person sees half of the features, and the two communicate by exchanging labels. We show that this policy leads to unique nonlinear decision boundaries that were difficult to learn had the two people fully collaborated or did not collaborate. [AAAI 2011]

Computer Reverse Psychology:

We programmed a computer to apply reverse psychology to humans, with the hope to correct irrational human behaviors in a multi-armed bandit setting. We discovered that humans are not strongly influenced by this naive application of reverse psychology [NIPS 2010 workshop]. This constrains future persuasive strategies and deserves further research.

Computer Reverse Psychology:

We programmed a computer to apply reverse psychology to humans, with the hope to correct irrational human behaviors in a multi-armed bandit setting. We discovered that humans are not strongly influenced by this naive application of reverse psychology [NIPS 2010 workshop]. This constrains future persuasive strategies and deserves further research.

Professor Xiaojin Zhu in Computer Sciences at the University of Wisconsin-Madison is the recipient of a 2010 Faculty Early Career Development Award (CAREER) from the National Science Foundation, a five-year grant designed to boost young faculty in establishing integrated research and educational activities while helping to address areas of important need. Zhu's CAREER project is titled "Using Machine Learning to Understand and Enhance Human Learning Capacity." His project aims to discover the common mathematical principles that govern learning in both humans and computers. Examples include rigorous generalization error bounds (how well can a student or a robot generalize what the teacher taught to new problems?), sparsity (how well can the student or robot identify a few salient features of a problem, out of a haystack of irrelevant features?), and active learning (can the student or robot ask good questions to speed up its own learning?). He expects the project will lead to novel computational approaches to enhance human learning in and out of classrooms, and advance machine learning by incorporating insights on tasks where humans excel.

This project is based upon work supported by the National Science

Foundation under Grant No. IIS-0953219. Any opinions, findings, and conclusions or

recommendations expressed in this material are those of the authors and do not

necessarily reflect the views of the National Science Foundation.

Professor Xiaojin Zhu in Computer Sciences at the University of Wisconsin-Madison is the recipient of a 2010 Faculty Early Career Development Award (CAREER) from the National Science Foundation, a five-year grant designed to boost young faculty in establishing integrated research and educational activities while helping to address areas of important need. Zhu's CAREER project is titled "Using Machine Learning to Understand and Enhance Human Learning Capacity." His project aims to discover the common mathematical principles that govern learning in both humans and computers. Examples include rigorous generalization error bounds (how well can a student or a robot generalize what the teacher taught to new problems?), sparsity (how well can the student or robot identify a few salient features of a problem, out of a haystack of irrelevant features?), and active learning (can the student or robot ask good questions to speed up its own learning?). He expects the project will lead to novel computational approaches to enhance human learning in and out of classrooms, and advance machine learning by incorporating insights on tasks where humans excel.

This project is based upon work supported by the National Science

Foundation under Grant No. IIS-0953219. Any opinions, findings, and conclusions or

recommendations expressed in this material are those of the authors and do not

necessarily reflect the views of the National Science Foundation.