Prev: W1 Next: W2

Blank Slides (with blank pages for quiz questions): Part 1, Part 2,

Annotated Slides: Part 1, Part 2,

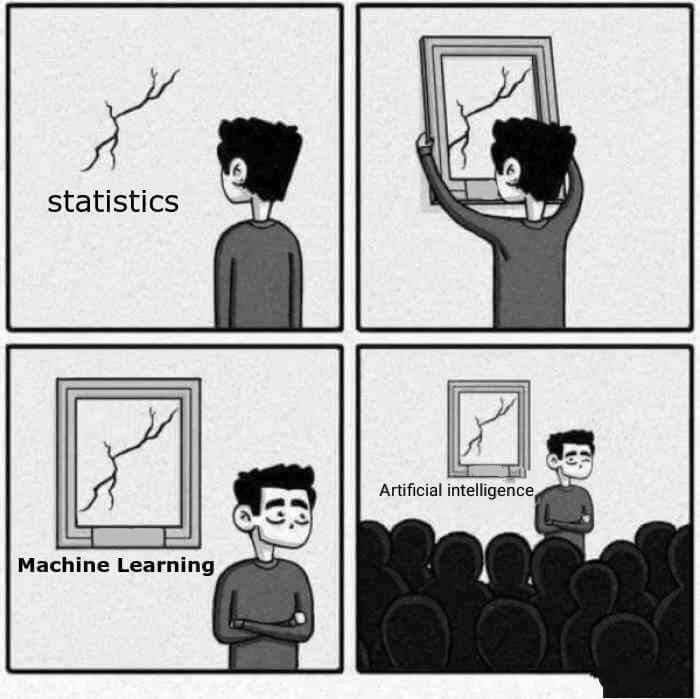

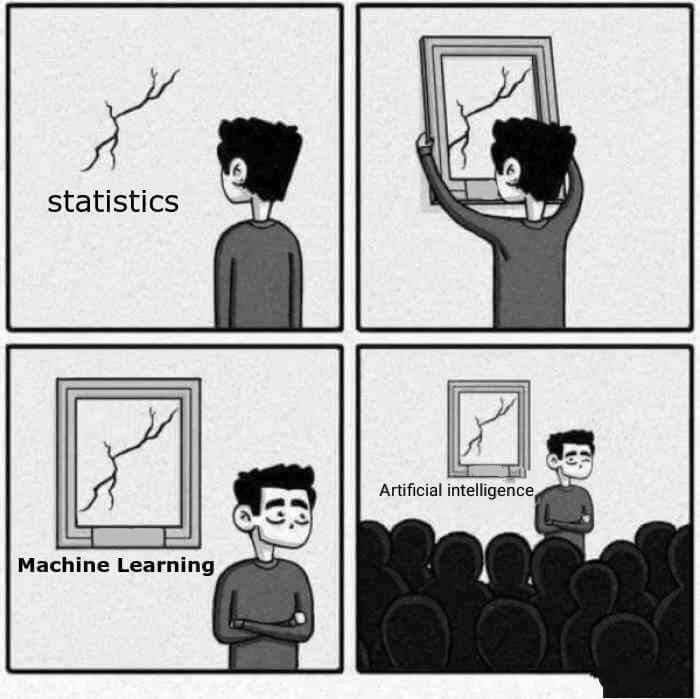

Image by sandserifcomics via towards data science

N/A

Part 2 (Perceptron learning): Link

Part 3 (Loss functions): Link

Part 4 (Logistic regression): Link

Part 5 (Convexity): Link

This X does not exist: Link

Turtle or Rifle: Link

Art or garbage game: Link

Guess two-thirds of the average? Link

Gradient Descent: Link

Optimization: Link

Neural Network: Link

Generative Adversarial Net: Link

Why cannot use linear regression for binary classification? Link

Why does gradient descent work? Link

How to derive logistic regression gradient descent step formula? Link

Example (Quiz): Perceptron update formula Link

Example (Quiz): Gradient descent for logistic activation with squared error Link

Example (Quiz): Computation of Hessian of quadratic form Link

Example (Quiz): Computation of eigenvalues Link

Example (Homework): Gradient descent for linear regression Link

Video going through M1: Link

Multivariate Calculus: Textbook, Chapter 16 and/or (Economics) Tutorials, Chapters 2 and 3.

Linear Algebra: Textbook, Chapters on Determinant and Eigenvalue.

Probability and Statistics: Textbook, Chapters 3, 4, 5.

Test item: \(\left(x', y'\right)\), where \(j \in \left\{1, 2, ..., m\right\}\) is the feature index.

Perceptron algorithm update step: \(w = w - \alpha \left(a_{i} - y_{i}\right) x_{i}\), \(b = b - \alpha \left(a_{i} - y_{i}\right)\), \(a_{i} = 1_{\left\{w^\top x_{i} + b \geq 0\right\}}\), where \(a_{i}\) is the activation value of instance \(i\).

Squared loss minimization of perceptrons: \(\left(\hat{w}, \hat{b}\right) = \mathop{\mathrm{argmin}}_{w, b} \dfrac{1}{2} \displaystyle\sum_{i=1}^{n} \left(a_{i} - y_{i}\right)^{2}\), \(a_{i} = g\left(w^\top x_{i} + b\right)\), where \(\hat{w}\) is the optimal weights, \(\hat{b}\) is the optimal bias, \(g\) is the activation function.

Loss minimization problem: \(\left(\hat{w}, \hat{b}\right) = \mathop{\mathrm{argmin}}_{w, b} -\displaystyle\sum_{i=1}^{n} \left(y_{i} \log\left(a_{i}\right) + \left(1 - y_{i}\right) \log\left(1 - a_{i}\right)\right)\), \(a_{i} = \dfrac{1}{1 + \exp\left(- \left(w^\top x_{i} + b\right)\right)}\).

Batch gradient descrent step: \(w = w - \alpha \displaystyle\sum_{i=1}^{n} \left(a_{i} - y_{i}\right) x_{i}\), \(b = b - \alpha \displaystyle\sum_{i=1}^{n} \left(a_{i} - y_{i}\right)\), \(a_{i} = \dfrac{1}{1 + \exp\left(- \left(w^\top x_{i} + b\right)\right)}\), where \(\alpha\) is the learning rate.

# Summary

📗 Monday lecture: 5:30 to 8:30, Zoom Link

📗 Office hours: 5:30 to 8:30 Wednesdays (Dune) and Thursdays (Zoom Link)

📗 Personal meeting room: always open, Zoom Link

📗 Quiz (use your wisc ID to log in (without "@wisc.edu")): Socrative Link, Regrade request form: Google Form (select Q1).

📗 Math Homework:

M1,

M2,

📗 Programming Homework:

P1,

📗 Examples, Quizzes, Discussions:

Q1,

# Lectures

📗 Slides (before lecture, usually updated on Saturday):

Blank Slides:

Part 1,

Part 2,

Blank Slides (with blank pages for quiz questions): Part 1, Part 2,

📗 Slides (after lecture, usually updated on Tuesday):

Blank Slides with Quiz Questions:

Part 1,

Part 2,

Annotated Slides: Part 1, Part 2,

📗 My handwriting is really bad, you should copy down your notes from the lecture videos instead of using these.

📗 Notes

Image by sandserifcomics via towards data science

N/A

# Other Materials

📗 Pre-recorded Videos from 2020

Part 1 (Supervised learning): Link Part 2 (Perceptron learning): Link

Part 3 (Loss functions): Link

Part 4 (Logistic regression): Link

Part 5 (Convexity): Link

📗 Relevant websites

Which face is real? Link This X does not exist: Link

Turtle or Rifle: Link

Art or garbage game: Link

Guess two-thirds of the average? Link

Gradient Descent: Link

Optimization: Link

Neural Network: Link

Generative Adversarial Net: Link

📗 YouTube videos from 2019 to 2021

Why does the (batch) perceptron algorithm work? Link Why cannot use linear regression for binary classification? Link

Why does gradient descent work? Link

How to derive logistic regression gradient descent step formula? Link

Example (Quiz): Perceptron update formula Link

Example (Quiz): Gradient descent for logistic activation with squared error Link

Example (Quiz): Computation of Hessian of quadratic form Link

Example (Quiz): Computation of eigenvalues Link

Example (Homework): Gradient descent for linear regression Link

Video going through M1: Link

📗 Math and Statistics Review

Checklist: Link, "math crib sheet": Link Multivariate Calculus: Textbook, Chapter 16 and/or (Economics) Tutorials, Chapters 2 and 3.

Linear Algebra: Textbook, Chapters on Determinant and Eigenvalue.

Probability and Statistics: Textbook, Chapters 3, 4, 5.

# Keywords and Notations

📗 Supervised Learning:

Training item: \(\left(x_{i}, y_{i}\right)\), where \(i \in \left\{1, 2, ..., n\right\}\) is the instance index, \(x_{ij}\) is the feature \(j\) of instance \(i\), \(j \in \left\{1, 2, ..., m\right\}\) is the feature index, \(x_{i} = \left(x_{i1}, x_{i2}, ...., x_{im}\right)\) is the feature vector of instance \(i\), and \(y_{i}\) is the true label of instance \(i\). Test item: \(\left(x', y'\right)\), where \(j \in \left\{1, 2, ..., m\right\}\) is the feature index.

📗 Linear Threshold Unit, Linear Perceptron:

LTU Classifier: \(\hat{y}_{i} = 1_{\left\{w^\top x_{i} + b \geq 0\right\}}\), where \(w = \left(w_{1}, w_{2}, ..., w_{m}\right)\) is the weights, \(b\) is the bias, \(x_{i} = \left(x_{i1}, x_{i2}, ..., x_{im}\right)\) is the feature vector of instance \(i\), and \(\hat{y}_{i}\) is the predicted label of instance \(i\). Perceptron algorithm update step: \(w = w - \alpha \left(a_{i} - y_{i}\right) x_{i}\), \(b = b - \alpha \left(a_{i} - y_{i}\right)\), \(a_{i} = 1_{\left\{w^\top x_{i} + b \geq 0\right\}}\), where \(a_{i}\) is the activation value of instance \(i\).

📗 Loss Function:

Zero-one loss minimization: \(\hat{f} = \mathop{\mathrm{argmin}}_{f \in \mathcal{H}} \displaystyle\sum_{i=1}^{n} 1_{\left\{f\left(x_{i}\right) \neq y_{i}\right\}}\), where \(\hat{f}\) is the optimal classifier, \(\mathcal{H}\) is the hypothesis space (set of functions to choose from). Squared loss minimization of perceptrons: \(\left(\hat{w}, \hat{b}\right) = \mathop{\mathrm{argmin}}_{w, b} \dfrac{1}{2} \displaystyle\sum_{i=1}^{n} \left(a_{i} - y_{i}\right)^{2}\), \(a_{i} = g\left(w^\top x_{i} + b\right)\), where \(\hat{w}\) is the optimal weights, \(\hat{b}\) is the optimal bias, \(g\) is the activation function.

📗 Logistic Regression:

Logistic regression classifier: \(\hat{y}_{i} = 1_{\left\{a_{i} \geq 0.5\right\}}\), \(a_{i} = \dfrac{1}{1 + \exp\left(- \left(w^\top x_{i} + b\right)\right)}\). Loss minimization problem: \(\left(\hat{w}, \hat{b}\right) = \mathop{\mathrm{argmin}}_{w, b} -\displaystyle\sum_{i=1}^{n} \left(y_{i} \log\left(a_{i}\right) + \left(1 - y_{i}\right) \log\left(1 - a_{i}\right)\right)\), \(a_{i} = \dfrac{1}{1 + \exp\left(- \left(w^\top x_{i} + b\right)\right)}\).

Batch gradient descrent step: \(w = w - \alpha \displaystyle\sum_{i=1}^{n} \left(a_{i} - y_{i}\right) x_{i}\), \(b = b - \alpha \displaystyle\sum_{i=1}^{n} \left(a_{i} - y_{i}\right)\), \(a_{i} = \dfrac{1}{1 + \exp\left(- \left(w^\top x_{i} + b\right)\right)}\), where \(\alpha\) is the learning rate.

Last Updated: January 19, 2026 at 9:18 PM