Prev: W2 Next: W4

Blank Slides (with blank pages for quiz questions): Part 1, Part 2,

Annotated Slides: Part 1, Part 2,

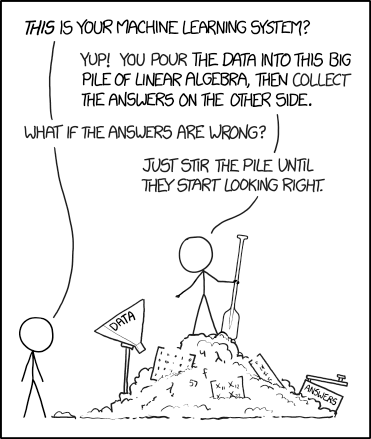

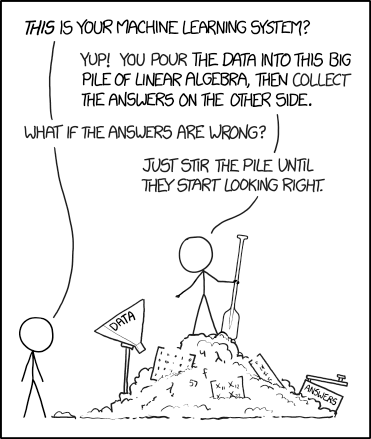

Image by xkcd via towards data science

N/A

Part 2 (Subgradient Descent): Link

Part 3 (Kernel Trick): Link

Part 4 (Decision Tree): Link

Part 5 (Random Forrest): Link

Part 6 (Nearest Neighbor): Link

RBF Kernel SVM Demo: Link

Decision Tree: Link

Random Forrest Demo: Link

K Nearest Neighbor: Link

Map of Manhattan: Link

Voronoi Diagram: Link

KD Tree: Link

Why does the kernel trick work? Link

Example (Quiz): Compute SVM classifier Link

Example (Quiz): Kernel SVM for XOR operator Link

Example (Quiz): Kernel matrix to feature vector Link

Example (Quiz): Entropy computation Link

Example (Quiz): Decision tree for implication operator Link

Example (Quiz): Three nearest neighbor Link

Hard margin, original max-margin formulation: \(\displaystyle\max_{w} \dfrac{2}{\sqrt{w^\top w}}\) such that \(w^\top x_{i} + b \leq -1\) if \(y_{i} = 0\) and \(w^\top x_{i} + b \geq 1\) if \(y_{i} = 1\).

Hard margin, simplified formulation: \(\displaystyle\min_{w} \dfrac{1}{2} w^\top w\) such that \(\left(2 y_{i} - 1\right)\left(w^\top x_{i} + b\right) \geq 1\).

Soft margin, original max-margin formulation: \(\displaystyle\min_{w} \dfrac{1}{2} w^\top w + \dfrac{1}{\lambda} \dfrac{1}{n} \displaystyle\sum_{i=1}^{n} \xi_{i}\) such that \(\left(2 y_{i} - 1\right)\left(w^\top x_{i} + b\right) \geq 1 - \xi, \xi \geq 0\), where \(\xi_{i}\) is the slack variable for instance \(i\), \(\lambda\) is the regularization parameter.

Soft margin, simplified formulation: \(\displaystyle\min_{w} \dfrac{\lambda}{2} w^\top w + \dfrac{1}{n} \displaystyle\sum_{i=1}^{n} \displaystyle\max\left\{0, 1 - \left(2 y_{i} - 1\right) \left(w^\top x_{i} + b\right)\right\}\)

Subgradient descent formula: \(w = \left(1 - \lambda\right) w - \alpha \left(2 y_{i} - 1\right) 1_{\left\{\left(2 y_{i} - 1\right) \left(w^\top x_{i} + b\right) \geq 1\right\}} x_{i}\).

Kernal Gram matrix: \(K_{i i'} = \varphi\left(x_{i}\right)^\top \varphi\left(x_{i'}\right)\).

Quadratic Kernel: \(K_{i i'} = \left(x_{i^\top} x_{i'} + 1\right)^{2}\) has feature representation \(\varphi\left(x_{i}\right) = \left(x_{i1}^{2}, x_{i2}^{2}, \sqrt{2} x_{i1} x_{i2}, \sqrt{2} x_{i1}, \sqrt{2} x_{i2}, 1\right)\).

Gaussian RBF Kernel: \(K_{i i'} = \exp\left(- \dfrac{1}{2 \sigma^{2}} \left(x_{i} - x_{i'}\right)^\top \left(x_{i} - x_{i'}\right)\right)\) has infinite-dimensional feature representation, where \(\sigma^{2}\) is the variance parameter.

Conditional entropy: \(H\left(Y | X\right) = -\displaystyle\sum_{x=1}^{K_{X}} p_{x} \displaystyle\sum_{y=1}^{K} p_{y|x} \log_{2} \left(p_{y|x}\right)\), where \(K_{X}\) is the number of possible values of feature, \(p_{x}\) is the fraction of data points with feature \(x\), \(p_{y|x}\) is the fraction of data points with label \(y\) among the ones with feature \(x\).

Information gain, for feature \(j\): \(I\left(Y | X_{j}\right) = H\left(Y\right) - H\left(Y | X_{j}\right)\).

Feature selection: \(j^\star = \mathop{\mathrm{argmax}}_{j} I\left(Y | X_{j}\right)\).

# Summary

📗 Monday lecture: 5:30 to 8:30, Zoom Link

📗 Office hours: 5:30 to 8:30 Wednesdays (Dune) and Thursdays (Zoom Link)

📗 Personal meeting room: always open, Zoom Link

📗 Quiz (use your wisc ID to log in (without "@wisc.edu")): Socrative Link, Regrade request form: Google Form (select Q3).

📗 Math Homework:

M3,

📗 Programming Homework:

P1,

📗 Examples, Quizzes, Discussions:

Q3,

# Lectures

📗 Slides (before lecture, usually updated on Saturday):

Blank Slides:

Part 1,

Part 2,

Blank Slides (with blank pages for quiz questions): Part 1, Part 2,

📗 Slides (after lecture, usually updated on Tuesday):

Blank Slides with Quiz Questions:

Part 1,

Part 2,

Annotated Slides: Part 1, Part 2,

📗 My handwriting is really bad, you should copy down your notes from the lecture videos instead of using these.

📗 Notes

Image by xkcd via towards data science

N/A

# Other Materials

📗 Pre-recorded Videos from 2020

Part 1 (Support Vector Machines): Link Part 2 (Subgradient Descent): Link

Part 3 (Kernel Trick): Link

Part 4 (Decision Tree): Link

Part 5 (Random Forrest): Link

Part 6 (Nearest Neighbor): Link

📗 Relevant websites

Support Vector Machine: Link RBF Kernel SVM Demo: Link

Decision Tree: Link

Random Forrest Demo: Link

K Nearest Neighbor: Link

Map of Manhattan: Link

Voronoi Diagram: Link

KD Tree: Link

📗 YouTube videos from 2019 to 2021

How to find the margin expression for SVM? Link Why does the kernel trick work? Link

Example (Quiz): Compute SVM classifier Link

Example (Quiz): Kernel SVM for XOR operator Link

Example (Quiz): Kernel matrix to feature vector Link

Example (Quiz): Entropy computation Link

Example (Quiz): Decision tree for implication operator Link

Example (Quiz): Three nearest neighbor Link

# Keywords and Notations

📗 Support Vector Machine

SVM classifier: \(\hat{y}_{i} = 1_{\left\{w^\top x_{i} + b \geq 0\right\}}\). Hard margin, original max-margin formulation: \(\displaystyle\max_{w} \dfrac{2}{\sqrt{w^\top w}}\) such that \(w^\top x_{i} + b \leq -1\) if \(y_{i} = 0\) and \(w^\top x_{i} + b \geq 1\) if \(y_{i} = 1\).

Hard margin, simplified formulation: \(\displaystyle\min_{w} \dfrac{1}{2} w^\top w\) such that \(\left(2 y_{i} - 1\right)\left(w^\top x_{i} + b\right) \geq 1\).

Soft margin, original max-margin formulation: \(\displaystyle\min_{w} \dfrac{1}{2} w^\top w + \dfrac{1}{\lambda} \dfrac{1}{n} \displaystyle\sum_{i=1}^{n} \xi_{i}\) such that \(\left(2 y_{i} - 1\right)\left(w^\top x_{i} + b\right) \geq 1 - \xi, \xi \geq 0\), where \(\xi_{i}\) is the slack variable for instance \(i\), \(\lambda\) is the regularization parameter.

Soft margin, simplified formulation: \(\displaystyle\min_{w} \dfrac{\lambda}{2} w^\top w + \dfrac{1}{n} \displaystyle\sum_{i=1}^{n} \displaystyle\max\left\{0, 1 - \left(2 y_{i} - 1\right) \left(w^\top x_{i} + b\right)\right\}\)

Subgradient descent formula: \(w = \left(1 - \lambda\right) w - \alpha \left(2 y_{i} - 1\right) 1_{\left\{\left(2 y_{i} - 1\right) \left(w^\top x_{i} + b\right) \geq 1\right\}} x_{i}\).

📗 Kernel Trick

Kernel SVM classifier: \(\hat{y}_{i} = 1_{\left\{w^\top \varphi\left(x_{i}\right) + b \geq 0\right\}}\), where \(\varphi\) is the feature map. Kernal Gram matrix: \(K_{i i'} = \varphi\left(x_{i}\right)^\top \varphi\left(x_{i'}\right)\).

Quadratic Kernel: \(K_{i i'} = \left(x_{i^\top} x_{i'} + 1\right)^{2}\) has feature representation \(\varphi\left(x_{i}\right) = \left(x_{i1}^{2}, x_{i2}^{2}, \sqrt{2} x_{i1} x_{i2}, \sqrt{2} x_{i1}, \sqrt{2} x_{i2}, 1\right)\).

Gaussian RBF Kernel: \(K_{i i'} = \exp\left(- \dfrac{1}{2 \sigma^{2}} \left(x_{i} - x_{i'}\right)^\top \left(x_{i} - x_{i'}\right)\right)\) has infinite-dimensional feature representation, where \(\sigma^{2}\) is the variance parameter.

📗 Information Theory:

Entropy: \(H\left(Y\right) = -\displaystyle\sum_{y=1}^{K} p_{y} \log_{2} \left(p_{y}\right)\), where \(K\) is the number of classes (number of possible labels), \(p_{y}\) is the fraction of data points with label \(y\). Conditional entropy: \(H\left(Y | X\right) = -\displaystyle\sum_{x=1}^{K_{X}} p_{x} \displaystyle\sum_{y=1}^{K} p_{y|x} \log_{2} \left(p_{y|x}\right)\), where \(K_{X}\) is the number of possible values of feature, \(p_{x}\) is the fraction of data points with feature \(x\), \(p_{y|x}\) is the fraction of data points with label \(y\) among the ones with feature \(x\).

Information gain, for feature \(j\): \(I\left(Y | X_{j}\right) = H\left(Y\right) - H\left(Y | X_{j}\right)\).

📗 Decision Tree:

Decision stump classifier: \(\hat{y}_{i} = 1_{\left\{x_{ij} \geq t_{j}\right\}}\), where \(t_{j}\) is the threshold for feature \(j\). Feature selection: \(j^\star = \mathop{\mathrm{argmax}}_{j} I\left(Y | X_{j}\right)\).

Last Updated: January 19, 2026 at 9:18 PM