Prev: W1 Next: W3

Blank Slides (with blank pages for quiz questions): Part 1, Part 2,

Annotated Slides: Part 1, Part 2,

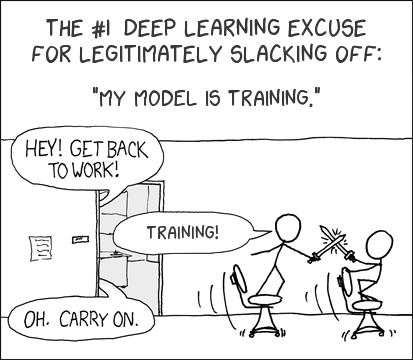

Image by Vishal Arora via medium

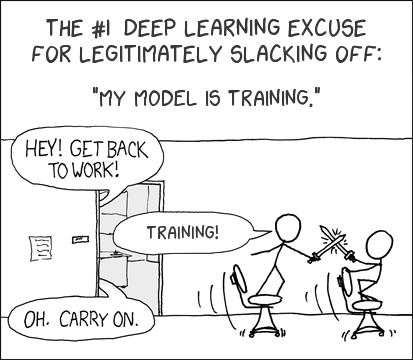

N/A

Part 2 (Backpropogation): Link

Part 3 (Multi-Layer Network): Link

Part 4 (Stochastic Gradient): Link

Part 5 (Multi-Class Classification): Link

Part 6 (Regularization): Link

Another Neural Network Demo: Link

Neural Network Videos by Grant Sanderson: Playlist

MNIST Neural Network Visualization: Link

Neural Network Simulator: Link

Overfitting: Link

Neural Network Snake: Video

Neural Network Car: Video

Neural Network Flappy Bird: Video

Neural Network Mario: Video

MyScript: algorithm Link demo Link

Maple Calculator: Link

How derive 2-layer neural network gradient descent step? Link

How derive multi-layer neural network gradient descent induction step? Link

Comparison between L1 and L2 regularization. Link

Example (Quiz): Cross validation accuracy Link

\(a^{\left(1\right)}_{ij} = \dfrac{1}{1 + \exp\left(- \left(\left(\displaystyle\sum_{j'=1}^{m} x_{ij'} w^{\left(1\right)}_{j'j}\right) + b^{\left(1\right)}_{j}\right)\right)}\), where \(m\) is the number of features (or input units), \(w^{\left(1\right)}_{j' j}\) is the layer \(1\) weight from input unit \(j'\) to hidden layer unit \(j\), \(b^{\left(1\right)}_{j}\) is the bias for hidden layer unit \(j\), \(a_{ij}^{\left(1\right)}\) is the layer \(1\) activation of instance \(i\) hidden unit \(j\).

\(a^{\left(2\right)}_{i} = \dfrac{1}{1 + \exp\left(- \left(\left(\displaystyle\sum_{j=1}^{h} a^{\left(1\right)}_{ij} w^{\left(2\right)}_{j}\right) + b^{\left(2\right)}\right)\right)}\), where \(h\) is the number of hidden units, \(w^{\left(2\right)}_{j}\) is the layer \(2\) weight from hidden layer unit \(j\), \(b^{\left(2\right)}\) is the bias for the output unit, \(a^{\left(2\right)}_{i}\) is the layer \(2\) activation of instance \(i\).

Stochastic gradient descent step for two layer network with squared loss and logistic activation:

\(w^{\left(1\right)}_{j' j} = w^{\left(1\right)}_{j' j} - \alpha \left(a^{\left(2\right)}_{i} - y_{i}\right) a^{\left(2\right)}_{i} \left(1 - a^{\left(2\right)}_{i}\right) w_{j}^{\left(2\right)} a_{ij}^{\left(1\right)} \left(1 - a_{ij}^{\left(1\right)}\right) x_{ij'}\).

\(b^{\left(1\right)}_{j} \leftarrow b^{\left(1\right)}_{j} - \alpha \left(a^{\left(2\right)}_{i} - y_{i}\right) a^{\left(2\right)}_{i} \left(1 - a^{\left(2\right)}_{i}\right) w_{j}^{\left(2\right)} a_{ij}^{\left(1\right)} \left(1 - a_{ij}^{\left(1\right)}\right)\).

\(w^{\left(2\right)}_{j} \leftarrow w^{\left(2\right)}_{j} - \alpha \left(a^{\left(2\right)}_{i} - y_{i}\right) a^{\left(2\right)}_{i} \left(1 - a^{\left(2\right)}_{i}\right) a_{ij}^{\left(1\right)}\).

\(b^{\left(2\right)} \leftarrow b^{\left(2\right)} - \alpha \left(a^{\left(2\right)}_{i} - y_{i}\right) a^{\left(2\right)}_{i} \left(1 - a^{\left(2\right)}_{i}\right)\).

L2 regularization (sqaured loss): \(\displaystyle\sum_{i=1}^{n} \left(a_{i} - y_{i}\right)^{2} + \lambda \left(\displaystyle\sum_{j=1}^{m} \left(w_{j}\right)^{2} + b^{2}\right)\).

# Summary

📗 Monday lecture: 5:30 to 8:30, Zoom Link

📗 Office hours: 5:30 to 8:30 Wednesdays (Dune) and Thursdays (Zoom Link)

📗 Personal meeting room: always open, Zoom Link

📗 Quiz (use your wisc ID to log in (without "@wisc.edu")): Socrative Link, Regrade request form: Google Form (select Q2).

📗 Math Homework:

M1,

M2,

📗 Programming Homework:

P1,

📗 Examples, Quizzes, Discussions:

Q2,

# Lectures

📗 Slides (before lecture, usually updated on Saturday):

Blank Slides:

Part 1,

Part 2,

Blank Slides (with blank pages for quiz questions): Part 1, Part 2,

📗 Slides (after lecture, usually updated on Tuesday):

Blank Slides with Quiz Questions:

Part 1,

Part 2,

Annotated Slides: Part 1, Part 2,

📗 My handwriting is really bad, you should copy down your notes from the lecture videos instead of using these.

📗 Notes

Image by Vishal Arora via medium

N/A

# Other Materials

📗 Pre-recorded Videos from 2020

Part 1 (Neural Network): Link Part 2 (Backpropogation): Link

Part 3 (Multi-Layer Network): Link

Part 4 (Stochastic Gradient): Link

Part 5 (Multi-Class Classification): Link

Part 6 (Regularization): Link

📗 Relevant websites

Neural Network: Link Another Neural Network Demo: Link

Neural Network Videos by Grant Sanderson: Playlist

MNIST Neural Network Visualization: Link

Neural Network Simulator: Link

Overfitting: Link

Neural Network Snake: Video

Neural Network Car: Video

Neural Network Flappy Bird: Video

Neural Network Mario: Video

MyScript: algorithm Link demo Link

Maple Calculator: Link

📗 YouTube videos from 2019 to 2021

How to construct XOR network? Link How derive 2-layer neural network gradient descent step? Link

How derive multi-layer neural network gradient descent induction step? Link

Comparison between L1 and L2 regularization. Link

Example (Quiz): Cross validation accuracy Link

# Keywords and Notations

📗 Neural Network:

Neural network classifier for two layer network with logistic activation: \(\hat{y}_{i} = 1_{\left\{a^{\left(2\right)}_{i} \geq 0.5\right\}}\) \(a^{\left(1\right)}_{ij} = \dfrac{1}{1 + \exp\left(- \left(\left(\displaystyle\sum_{j'=1}^{m} x_{ij'} w^{\left(1\right)}_{j'j}\right) + b^{\left(1\right)}_{j}\right)\right)}\), where \(m\) is the number of features (or input units), \(w^{\left(1\right)}_{j' j}\) is the layer \(1\) weight from input unit \(j'\) to hidden layer unit \(j\), \(b^{\left(1\right)}_{j}\) is the bias for hidden layer unit \(j\), \(a_{ij}^{\left(1\right)}\) is the layer \(1\) activation of instance \(i\) hidden unit \(j\).

\(a^{\left(2\right)}_{i} = \dfrac{1}{1 + \exp\left(- \left(\left(\displaystyle\sum_{j=1}^{h} a^{\left(1\right)}_{ij} w^{\left(2\right)}_{j}\right) + b^{\left(2\right)}\right)\right)}\), where \(h\) is the number of hidden units, \(w^{\left(2\right)}_{j}\) is the layer \(2\) weight from hidden layer unit \(j\), \(b^{\left(2\right)}\) is the bias for the output unit, \(a^{\left(2\right)}_{i}\) is the layer \(2\) activation of instance \(i\).

Stochastic gradient descent step for two layer network with squared loss and logistic activation:

\(w^{\left(1\right)}_{j' j} = w^{\left(1\right)}_{j' j} - \alpha \left(a^{\left(2\right)}_{i} - y_{i}\right) a^{\left(2\right)}_{i} \left(1 - a^{\left(2\right)}_{i}\right) w_{j}^{\left(2\right)} a_{ij}^{\left(1\right)} \left(1 - a_{ij}^{\left(1\right)}\right) x_{ij'}\).

\(b^{\left(1\right)}_{j} \leftarrow b^{\left(1\right)}_{j} - \alpha \left(a^{\left(2\right)}_{i} - y_{i}\right) a^{\left(2\right)}_{i} \left(1 - a^{\left(2\right)}_{i}\right) w_{j}^{\left(2\right)} a_{ij}^{\left(1\right)} \left(1 - a_{ij}^{\left(1\right)}\right)\).

\(w^{\left(2\right)}_{j} \leftarrow w^{\left(2\right)}_{j} - \alpha \left(a^{\left(2\right)}_{i} - y_{i}\right) a^{\left(2\right)}_{i} \left(1 - a^{\left(2\right)}_{i}\right) a_{ij}^{\left(1\right)}\).

\(b^{\left(2\right)} \leftarrow b^{\left(2\right)} - \alpha \left(a^{\left(2\right)}_{i} - y_{i}\right) a^{\left(2\right)}_{i} \left(1 - a^{\left(2\right)}_{i}\right)\).

📗 Multiple Classes:

Softmax activation for one layer networks: \(a_{ij} = \dfrac{\exp\left(- \left(w_{k^\top} x_{i} + b_{k}\right)\right)}{\displaystyle\sum_{k' = 1}^{K} \exp\left(- \left(w_{k'}^\top x_{i} + b_{k'}\right)\right)}\), where \(K\) is the number of classes (number of possible labels), \(a_{i k}\) is the activation of the output unit \(k\) for instance \(i\), \(y_{i k}\) is component \(k\) of the one-hot encoding of the label for instance \(i\). 📗 Regularization:

L1 regularization (squared loss): \(\displaystyle\sum_{i=1}^{n} \left(a_{i} - y_{i}\right)^{2} + \lambda \left(\displaystyle\sum_{j=1}^{m} \left| w_{j} \right| + \left| b \right|\right)\), where \(\lambda\) is the regularization parameter. L2 regularization (sqaured loss): \(\displaystyle\sum_{i=1}^{n} \left(a_{i} - y_{i}\right)^{2} + \lambda \left(\displaystyle\sum_{j=1}^{m} \left(w_{j}\right)^{2} + b^{2}\right)\).

Last Updated: January 19, 2026 at 9:18 PM